Are citations the best way to assess a scientific researcher’s worth? David Laband argues that although citation counts are easy to quantify and broadly indicative, they ultimately provide limited information and should only be used with a healthy dose of caution and common sense. At stake is the distribution of enormously important scientific resources, both public and private.

Are citations the best way to assess a scientific researcher’s worth? David Laband argues that although citation counts are easy to quantify and broadly indicative, they ultimately provide limited information and should only be used with a healthy dose of caution and common sense. At stake is the distribution of enormously important scientific resources, both public and private.

Let me start with a confession. I like and use citations. Does this really make me a bad person? I don’t think so, for reasons that are fundamentally economic and that are reflected in a conversation I had three decades ago with Tom Borcherding, the former longtime editor of Economic Inquiry. Tom observed that people paid relatively little attention to your first paper published in a top-tier journal, but that they paid a lot of attention to everything you publish once your second paper is published in a top-tier journal. His common-sense reasoning was that one paper in the very prestigious Journal of Political Economy could be accidental, but the probability of an individual publishing two papers in the Journal of Political Economy by accident is vanishingly small. The lesson is that sometimes, just a bit more information about an individual’s publication success significantly enhances an observer’s inferential accuracy. Context matters.

Citations and ‘impact’

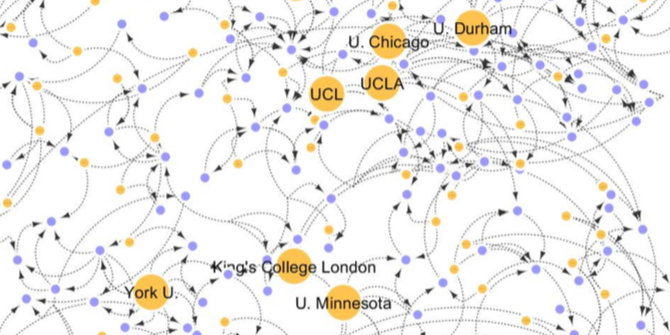

For a variety of reasons, some cost-based, others use-based, the use of citations as a measure of the ‘impact’ of a scientific paper, scholar, academic unit/programme, or institution has expanded enormously within the scientific community during the past 30 years. This fact notwithstanding, a number of scholars have suggested, rightly so in my opinion, that there are problems with citations. In perhaps the most compelling contribution to this literature, Hirsch (2005) argued that how we interpret citations is critically dependent on context.

The importance of context

Hirsch’s general insight about the importance of context with respect to the amount of information derived from citation counts motivated my recent paper. I argue that while rankings of economics journals that are based on mean or median citations per article provide some useful information, additional information with respect to the number of articles published by each journal and the distribution of citations across those articles dramatically increases what one plausibly can infer from the citation information.

Again, one might consider two separate journals, each of which published 40 articles in 2005 that have since garnered a total of 4,000 citations. Left at that, these journals look identical, with average citations per article equal to 100. But what if the first journal’s citation count resulted from one article with 3,961 citations and 39 articles with one citation each while the other’s citation count resulted from 40 articles that each attracted 100 citations? The distribution of citations across articles conveys much more useful information than does mean or median citations per article. If you are interested in using a citations-based journal ranking to forecast the relative impact of a recently published paper (that, obviously, does not yet have an established record of citations), such a forecast surely would be influenced by the presence of distributional information.

Drilling down further, the inferences one makes and/or conclusions one draws from an individual’s citation count plausibly will be influenced by such factors as the number of his/her publications, the distribution of citations across papers, the extent of self-citation, how long each paper has been accumulating citations, the size of the relevant community of scholars from which citations are drawn (which varies across scientific disciplines), and whether the citations are critical or laudatory.

Citation counts provide limited information

Where does this brief overview leave us, with respect to policy? My simple answer is that citation counts (and other measures of scientific productivity) provide limited information. This recognition, coupled with common sense, suggests that citation counts be used with caution.

A simple example serves to illustrate. During the course of my 32-year career as an academic economist, the field of economic history has been slowly, but surely, dying off. Papers written by historians of economic thought rarely, if ever, are published in top economics journals and draw relatively few citations as compared to papers written on currently fashionable subjects such as the economics of happiness or network economics. Does the fact that a historian of economic thought has a much lower citation count since 2000 than a network economist imply that the latter is a ‘better’ economist than the former?

The answer to this question depends entirely on how one defines ‘better,’ and in turn, on why the one is being compared against the other. But the fact is such comparisons are being made constantly now, in a wide variety of academic and institutional settings, all over the world. Some of these comparisons are made at the level of the individual scholar. Increasingly, the comparisons are being made at the department, school, and institution levels. At stake is the distribution of enormously important scientific resources, both public and private.

From an economic perspective, competition for resources is a good thing. But the arbiters of this competition might find it helpful, if not essential, to reflect carefully on what objectives motivate the comparisons being made and the implicit competition being incentivised. With that information established, the usefulness of the arbitration depends critically on both the metrics chosen by the arbiters and how those metrics are used. All this dependency is worrisome from both scientific and policy perspectives.

By all means, use citations – they are a relatively cheap source of information. But the information conveyed by citations is limited. So be cautious. Be careful. Use common sense. Seek the insights provided by context.

This column first appeared on Vox and is reposted with permission.

Note: This article gives the views of the author, and not the position of the Impact of Social Science blog, nor of the London School of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

David Laband is Chair of the School of Economics at Georgia Institute of Technology.

3 Comments