In this post, which was originally published at the end of last year, Anne Collier considers the major challenges of and recent innovations in the monitoring and moderation of live-streamed content on social media platforms. This is an issue which has since been brought to the fore when the tragic terrorist attacks in New Zealand in March were live-streamed on social media. Anne Collier is founder and executive director of The Net Safety Collaborative, home of the US’s social media helpline for schools. She has been blogging about youth and digital media at NetFamilyNews.org since before there were blogs, and advising tech companies since 2009. [Header image credit: D. Vespoli, CC BY-ND 2.0]

In this post, which was originally published at the end of last year, Anne Collier considers the major challenges of and recent innovations in the monitoring and moderation of live-streamed content on social media platforms. This is an issue which has since been brought to the fore when the tragic terrorist attacks in New Zealand in March were live-streamed on social media. Anne Collier is founder and executive director of The Net Safety Collaborative, home of the US’s social media helpline for schools. She has been blogging about youth and digital media at NetFamilyNews.org since before there were blogs, and advising tech companies since 2009. [Header image credit: D. Vespoli, CC BY-ND 2.0]

Who could’ve predicted that “content moderation” would become such a hot topic? It’s everywhere this year – from hearings on Capitol Hill to news leaks, books, podcasts and conferences. It’s clear that everybody – the companies themselves, users, and certainly policymakers – is struggling to understand how social media companies protect users and free speech at the same time. The struggle is real. Moderating social media to everybody’s satisfaction is like trying to get the entire planet to agree on the best flavour of ice cream.

But even as the extreme challenge of this is dawning on all of us, there’s some real innovation going on. It’s not what you’d normally think, and it’s happening where you might least expect it: live-streamed video, projected to be more than a $70 billion market in two years but already mainstream social media for teens and young adults, according to Pew Research (parents may’ve noticed this!).

Two distinct examples are Twitch, the 800-pound gorilla of streaming platforms based in San Francisco, and Yubo, a fast-growing startup based in Paris. Both are innovating in really interesting ways, blending both human norms and tech tools. Both…

- Innovate around user safety because it’s “good business”

- Have live video in their favouras they work on user safety

- Empower their users to help.

To explain, here’s what they’re up to….

Let’s start with the startup

Yubo, an app that now has more than 15 million users in more than a dozen countries, reached its first million within 10 months without any marketing (it was then called Yellow). The app’s all about communication – chat via live video-streaming. Somehow, the focus of early news coverage was on one Tinder-like product feature, even though the Yubo’s safety features have long included a “firewall” that keeps 13 to 17-year-old users separate from adults. Virtually all news coverage has been alarmist, especially in Europe, encouraging parents and child advocates to expect the worst.

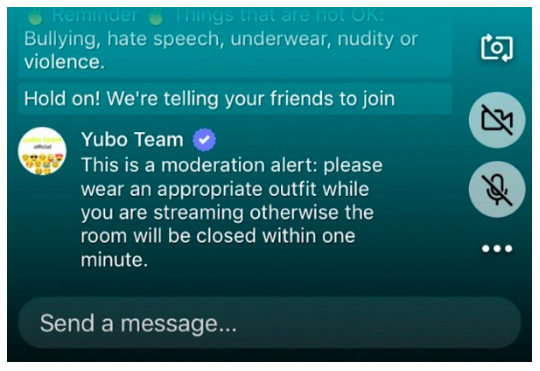

So they doubled down, with a mix of high- and low-tech “tools.” One of them is surprisingly unusual, and it definitely involves an element of surprise: nearly real-time intervention when a user violates a community rule (the rules are no bullying, hate speech, underwear, nudity or violence).

‘Please wear an appropriate outfit’

When the violation happens – maybe a user joins a chat in their underwear – the user almost immediately gets a message like the one in this screenshot:

What’s so unusual about that? you might ask. But this is rare in social media. It’s one of those sudden disruptors that stops the conversation flow in real time, changing the dynamic and helping chatters learn the community standards by seeing them enforced right when things are happening. Yubo can do that because it takes a screenshot of all chat sessions every 10 seconds; so between users’ own reports of annoying behaviour and algorithms running in the background that detect and escalate it, the app can respond very quickly. If the violation doesn’t stop, moderators will either disable the chat mid-stream or suspend the user’s account.

You wouldn’t think an app communicating with users in real time is that unusual, but Yubo says it’s the only social video app that intervenes with young users in this way, and I believe it. In more than 20 years of writing about kids and digital media, I haven’t seen another service that does.

Teaching the algorithms

The other innovative part of this is the algorithms, and the way Yubo uses them to detect problems proactively for faster response (Twitch doesn’t even have algorithms running proactively in the background, they told me). Proactive is unusual. For various reasons – including the way platforms are used – content moderation has always been about 99% reactive, dependent on users reporting problems and never even close to real-time. Bad stuff comes down later – sometimes without explanation.

Knowing how hard it is for algorithms to detect nudity and underwear, I asked Yubo COO Marc-Antoine Durand how that works on his app. “We spent nearly a year labelling every reported piece of content to create classifiers. Every time we had a report, a dedicated team had to classify the content into multiple categories (ex: underwear, nudity, drug abuse, etc.). With this, our algorithms can learn and find patterns.”

It takes a whole lot of data – a lot of images – to “teach” algorithms what they’re “looking for.” An app that’s all live-streamed video, all the time, very quickly amasses a ton of data for an algorithm to chew on. And Yubo has reached 95% accuracy in the nudity category (its highest priority) with a dedicated data scientist feeding (and tweaking) the algorithms full-time, he said. “We had to make a distinction between underwear and swimsuits,” he added. “We trained our algorithms to know the difference by detecting the presence of sand, water, a swimming pool, etc.”

Not that nudity’s a huge problem on the app – for example, only 0.1-0.2% of users upload suggestive content as profile photos any given day – Yubo says they prioritise nudity and underwear.

Social norms for safety

But those aren’t the only tools in the toolbox. Probably because live-streamed video hasn’t come up much on Capitol Hill or in news about “content moderation” (yet), we don’t hear much about how live video itself – and the social norms of the people using it – are safety factors too. But it’s not hard for anyone who uses Skype or Google hangouts to understand that socialising in video chat is almost exactly like socialising in person, where it’s extremely unusual for people to show up in their underwear, right? If someone decides to in Yubo, and others in the chat have a problem with that, the person typically gets reported because it’s just plain annoying, and moderators can suspend or delete their account. The every-10-sec. screenshots are backup so moderators can act quickly on anything not reported. On top of that, Yubo provides moderation tools to users who open chats, to the “streamer” of the “live.”

Then there’s Twitch

Twitch leverages social norms too. It empowers streamers, or broadcasters, with tools to maintain safety in chat during their live streams. With 44 billion minutes streamed per month by 3.1 million “streamers” (according to TwitchTracker.com) the company really has to harness the safety power of social norms in millions of communities.

“We know through statistics and user studies that, when you have a lot of toxicity or bad behaviour online, you lose users,” said Twitch Associate General Counsel Shirin Keen at the CoMo Summit in Washington, D.C., last spring, one of the first conferences on the subject. “Managing your community is core to success on Twitch.” So because streamer success is Twitch’s bread and butter, the platform has to help streamers help their viewers feel safe.

Twitch’s phenomenal growth has been mostly about video gamers streaming their videogame play. But the platform’s branching out – to the vastness of IRL (for “In Real Life”), its biggest new non-gaming channel. And just like in real real life, communities develop their own social norms. The Twitch people know that. The platform lets streamers shape their own norms and rules. Streamers appoint their own content moderators and provides them with moderation tools for maintaining their own community’s standards.

So can you see how online safety innovation is as much about humans as it is about tech – what we find acceptable or feel comfortable with in the moment and in a certain social setting? Take “emotes,” for example. They’re a way users can express their emotions in otherwise dry, impersonal text chat. They too are part of channel culture and community-building at Twitch. So are “bits“, colourful little animated graphics that viewers can buy to cheer a streamer on from the chat window. And these graphical elements help moderators see – at a glance, in a fast-moving chat stream – when things are good or going south. Last summer Twitch launched “Creator Camp” for training streamers and their “mods” (channel moderators) on how to create their community and its unique culture, “all without bug spray, sunburns, and cheesy sing-alongs (ok maybe a few sing-alongs),” Twitch tells its streamers.

So two vastly different live video companies are experimenting with something we’re all beginning to see, that…

- Live video makes social media a lot like in-person socialising

- Social media, like social anything, is affected by social norms

- Social norms help make socialising safer

- People stick around and have more fun when they feel safe.

The bottom line being that safety – in the extremely participatory business of social media – is good business.

The transparency factor

That’s the kind of innovating happening on the inside of industry. Then there’s the kind happening out here in society. If it could be boiled down to a single word, that would be “transparency.” It may never be a glass box, but the black box is slowly becoming less opaque.

While social media companies have to keep innovating around safety, they also have to innovate around how to make the challenge of, and users’ role in, content moderation more transparent. It’s good we out here are pushing for that, but we have to innovate too. As participants in the public discussion about user safety, we all need to understand a couple of things: that effective content moderation is a balance that companies have to strike between protecting users and protecting their freedom of expression, and that, if the platforms are too transparent, their systems can be gamed or hacked more easily, which makes everybody less safe.

So even as we demand free speech, more protection and greater transparency, the public discussion has to keep innovating too. Safety is good business and works from the inside out and the outside in.

Notes

Disclosure: I am an Internet safety adviser to Yubo and other social media services, including Facebook, Twitter and Snapchat, but not including Twitch.

This post originally appeared on the Medium website and it has been reposted here with permission.

This post gives the views of the authors and does not represent the position of the LSE Parenting for a Digital Future blog, nor of the London School of Economics and Political Science.