The quality of scientific evidence in government heavily depends upon the independent assessment of research. Pressure from those commissioning the research may pose a threat to scientific integrity and rigorous policy-making. Edward Page reports that whilst there is strong evidence of government leaning, this leaning appears to have little systematic impact on the nature of the conclusions that researchers reach due to the presence of disincentives within academia and research administrators within government.

The quality of scientific evidence in government heavily depends upon the independent assessment of research. Pressure from those commissioning the research may pose a threat to scientific integrity and rigorous policy-making. Edward Page reports that whilst there is strong evidence of government leaning, this leaning appears to have little systematic impact on the nature of the conclusions that researchers reach due to the presence of disincentives within academia and research administrators within government.

Do governments lean too much on the researchers who evaluate their policies? One can think of one reason why they would: to get good publicity. And one can think of a reason why researchers would give in to such pressure: to get contracts. But there are also plausible reasons to expect government not to lean on researchers: whether government genuinely wants to draw lessons from research or just wants good PR it needs to be rigorous rather than obsequious. Moreover researchers might not be expected to give in to pressure since their reputations are built on independence and can be destroyed by evidence or suspicions that their professional opinions are for sale.

The issue is an important one even though the signs are that the Coalition government is not as keen as New Labour was on securing evaluation evidence by commissioning research. If evaluation research is generated under pressure from sponsors intending to produce results that suit them, then the nature of this pressure and the responses of those facing it are at the very least relevant to our assessment of the quality of that evidence. To examine government leaning and researcher buckling we conducted a study, based on a web survey of 204 academics who had done research work for government since 2005, supplemented by interviews with 22 researchers.

The strongest evidence of government influence can be found in the very initial stages of the research: setting up the research design. Some of our respondents offered comments along these lines:

… the real place where research is politically managed is in the selection of topics/areas to be researched and then in the detailed specification. It is there that they control the kinds of questions that are to be asked. This gives plenty of opportunity to avoid difficult results.

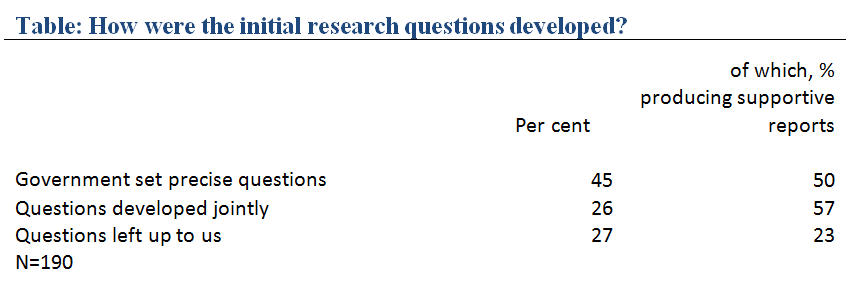

When asked, nearly half of our respondents (45 per cent) pointed out that “the government organisation(s) had a clear idea of the precise questions the research should examine” with 27 per cent suggesting “the government organisation(s) had a broad idea of what the research should examine and left the definition of the precise research questions to me/us”, 26 per cent indicated the development of the research questions was a joint effort with government and 2 per cent did not know.

Whether government intervened in the research design or not appears to have a significant influence on whether the research produced was supportive of government policy or not, as reported by our respondents. Of those who were left to develop the research questions, only 23 per cent produced a report broadly supportive of government policy compared with 50 per cent of those writing reports. When government was involved in developing research questions, whether alone or in conjunction with researchers, their reports were substantially more likely to be supportive.

At no other stage in the research did government pressure have an impact on how supportive the final report turned out to be. This was not for want of trying. For instance, 52 per cent reported they were asked to make significant changes to their draft reports (i.e. affecting the interpretation of findings or the weight given to them). And some of our respondents elaborated along these lines: “There was a lot of dialogue back and forth at the end between us and the Department … before it was published to ensure they did not look bad. They wanted certain wording changed so that it was most beneficial from a PR and marketing point of view; and they wanted certain things emphasised and certain things omitted”. Yet such pressure seems to have had little effect on whether the end result was a favourable or critical report. If anything, those asked to make changes were more likely to produce critical reports (though this finding does not approach statistical significance).

One must be careful about what we mean by “government” influence. There are at least four distinct groups within a ministry, each with different relationships with researchers. First there are the officials who are responsible for research, possibly because they are researchers themselves. Where mentioned, these seem to have the best relationships with researchers and appear most likely to share a belief in the importance of programme evaluation objectives in research. A second group is made up of “policy people” — officials with the task of looking after policy within the department, whether to amend, defend, expand or contract it. One respondent summarised concisely her view of the difference between these two groups: “the research manager places a lot of emphasis on research integrity, whereas the policy teams may have their own ideological or policy motives”.

The third group is the ministerial political leadership. Our survey evidence suggests unsurprisingly that they are highly likely, in the view of our respondents, to downvalue programme evaluation objectives of research as only 4 per cent of respondents (N=182) agreed with the proposition that “Ministers are prepared to act on evidence provided by social research even when it runs counter to their views”. The professionals and service providers in the programmes being evaluated make up a fourth group. Their views might be sought on an ad hoc basis as the research develops or they might be part of “stakeholder” or “expert” steering groups. Several respondents and interviewees mentioned the role of service providers as a source of constraint on their research findings. One argued

We met regularly with the Head of Research in the [Department] and also occasionally with their policymaking colleagues. One difficulty with these meetings was that they insisted representatives of the [organisation running programme being evaluated] attended. This made it quite difficult to discuss the report openly because these peoples’ livelihoods depended on the scheme. My part of the report was critical of the [programme] and I thought it inappropriate for the [department] to invite these people along. I felt it hindered honest and open discussion.

Overall there is sufficient evidence here to suggest that governments do lean on researchers. However, for the most part this leaning appears to have little systematic impact on the nature of the conclusions that researchers reach. The most effective constraint appears to be found when government specifies the nature of the research to be done at the outset. No other form of constraint has as powerful an effect on the degree to which the overall conclusions the researchers reach support government policy.

Our findings suggest that there are two main forces that reduce the impact of government “leaning” on the character and quality of the research reports. The first is the persistence of disincentives within academic career structures to compromise scientific integrity for the sake of securing government contracts. Our findings point to this, but we also have to note the shortcomings of our evidence base in this respect: it is based on academics reporting on their own behaviour. The second is the existence within government of a body of research administrators given significant responsibility for developing and managing research and coming in between policy officials and politicians on the one hand and the researchers on the other. In the absence of such a body of research administrators, the pressures on researchers to produce politically congenial research would likely be far stronger. Without the disincentives to compromise scientific integrity, one would have to have serious concern for the value of commissioned research.

This research by the LSE GV314 Group was recently published in the journal Public Administration. GV314 Empirical Research in Government is a final year undergraduate course in the Government Department at LSE. With a group of up to 15 students, Edward Page conducts a separate research project each year. More details on the project can be found here.

This article was originally published on LSE’s Impact of Social Sciences blog.

Note: This article gives the views of the author, and not the position of the Impact of Social Science blog, nor of the London School of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

Professor Edward Page FBA is the Sidney and Beatrice Webb Professor of Public Policy in the Department of Government at LSE. His most recent books are Changing government relations in Europe (London: Routledge, 2010), co-edited with Mike Goldsmith; and Policy Bureaucracy. Government with a cast of thousands (Oxford: Oxford University Press, 2005). Co-authored with B. Jenkins.

Ed – in an era of REF Impact and ever greater pressures to ‘chase the money’ – I think you’ve identified an increasingly salient issue.

One element to pick up on though is your musing that – ‘the Coalition government is not as keen as New Labour was on securing evaluation evidence by commissioning research.’

Across the whole of Whitehall that might be true – but they did of course set up the Contestable Policy Fund [https://www.gov.uk/contestable-policy-fund#about-the-fund] – which has itself drawn controversey, as a previous LSE blog by myself and Martin Smith touched on – https://blogs.lse.ac.uk/politicsandpolicy/archives/34544

The rolling out of the CPF also drew expressions of concern from Bernard Jenkin’s PASC Ctte in their recent ‘Truth to Power’ report [http://www.publications.parliament.uk/pa/cm201314/cmselect/cmpubadm/74/74.pdf].

The Report observed:

“43. Questions have been posed about the use of the Contestable Policy-Making Fund in this situation. The Minister stated that the decision was taken to commission this research to “get an outside perspective and some much more detailed research”, following concerns from the Minister that the preparation undertaken by officials for the Civil Service Reform Plan did not provide “very much insight” into the history of Civil Service reform, or international examples. The Minister added that the Cabinet Office “did not have all that many bids” to carry out the work, with several academics and think tanks choosing not to tender for the contract as they did not wish to carry out sponsored research.

44. We welcome the Minister’s publication of the Institute of Public Policy Research

report on Civil Service accountability systems. This publication establishes the important precedent that research commissioned by the Contestable Policy Fund

should be published and should not be treated in confidence as “advice to ministers”.

45. There is a close correlation between the Institute of Public Policy Research (IPPR) report and the Minister’s thinking, as expressed in his Policy Exchange speech. This does raise questions about how objective research commissioned by ministers in this way might be. It should not be a means of simply validating the opinions of ministers.”

Food for thought when reflecting on the relationship between pressures on independent research and directly government funded [as opposed to RC funded] research.

Do you really mean scientific evidence and the interpretation of textual answers to open questions. Take a look at the 2011/2012 answers and interpretation and outcomes of HS2 public consultation to show how wrong the parties were.

Transport north and south in Enlgand is shared mainly by the motoraways not the railways for intercity and longer commuting and delivery requirements. Speed is not possible above the 70mph and other statutory regulations.

However in the future a faster speed motoraway would be possible for cars.

A road rail combination would be less money to build than a road and separate railway as HS2.

Demand in the larger population advancing to 80Million requires a new north south trunk corridor.

Where was the research contribution to provide the evidence for tomorrows infrastructure please. Wanted and wanting. Yest please advise but with some vision that meets the reality not a single or fews view of tomorrows world called HS2.