Image credit: CC0 Public Domain.

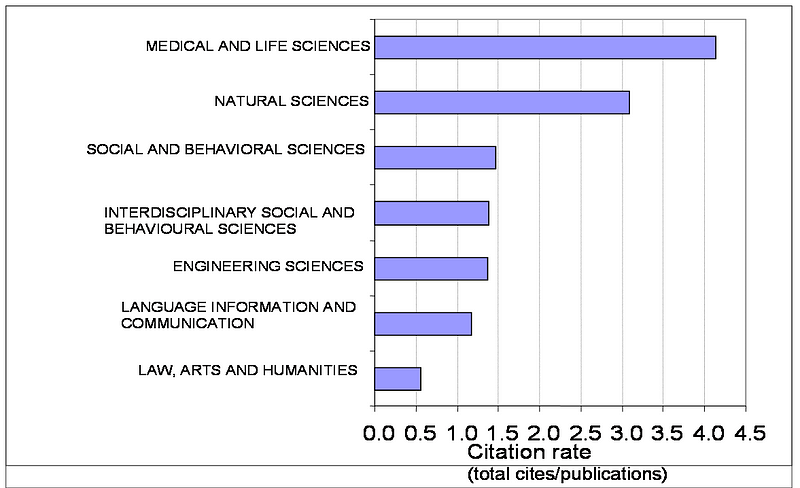

There is a huge gulf between many STEM scientists (in science, technology, engineering and mathematics) and scholars in other disciplines in how often they cite other people’s research in explaining their own findings and arguments. My first chart shows that the citation rate (defined as total citations divided by the number of publications)is eight times greater in medical and life sciences than in the humanities (including law and ‘art’ subjects here). In the ‘natural’ sciences (i.e the STEM disciplines minus life sciences) the citation rate is six times greater than in the humanities.

Figure 1: Differences in the average aggregate citation rates between major groups of disciplines, (that is, total citations divided by number of publications) in 2006

Source: Centre for Science and Technology Studies (2007).

The social sciences as a discipline group are in somewhat better shape than the humanities, with citation rates just beating engineering, the subjects in the large overlap areas between STEM and social science, and information/language studies. Nonetheless the citation rate for natural sciences is twice that in the social sciences, and for life sciences more than 2.5 times as great.

When we turn to looking at academic journals, the gulf in citation practices is even bigger. Traditionally social scientists and even more humanities scholars have tended to dismiss journal-citation results derived from the proprietary bibliographic databases like the Web of Science and Scopus, and they had legitimate grounds for doing so. Until very recently Web of Science completely ignored academic books as sources of citations, although they are very important across the humanities and ‘soft’ social sciences. Its coverage of journals also varied sharply across discipline groups, from 90%+ in particle physics or astronomy (a strong base for analysis) down to 35–40% for the social sciences (a poor basis for any analysis) and below 20% for the humanities (a hopeless basis). Scopus was a bit better in including books, but its inclusion basis was similarly restricted outside the STEM disciplines. Both these old-established, science-biased databases have recently begun trying to get better at covering the humanities and social sciences (with WoS trying to cover books for instance), but they have a huge amount of ground to make up still.

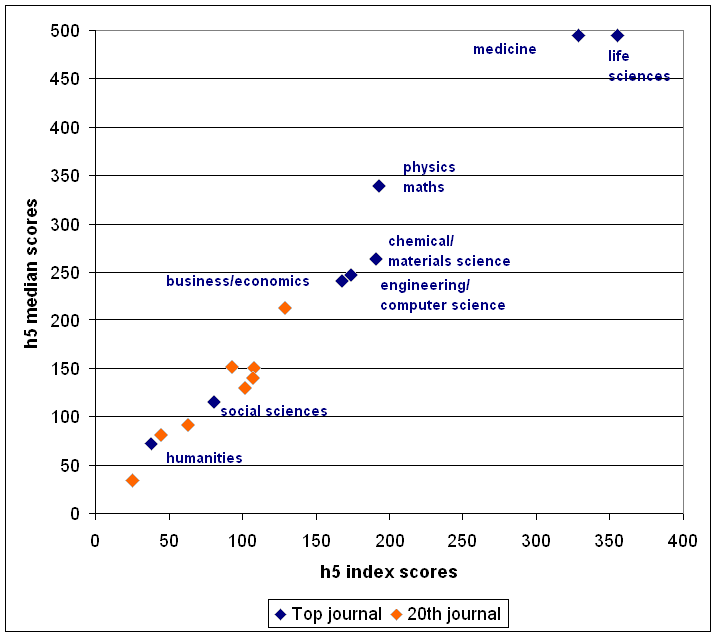

However, the “we can’t be compared with STEM” defense has crumbled away from another angle, with the rapid development of Google Scholar Metrics. These measures of citations look across all sources very evenly (covering journal articles, books, research reports and conference papers) and their cite counts are very inclusive of journals across all the discipline groups. Google also uses excellent indicators of journals’ average citing rates, giving the h score for each journal for the last five years (the h5 index) — where an h5 score of 20 shows that 20 articles have been cited 20 times. They also include the number of cites in the last five years for the median article included in the journal’s h5 score, a measure which is called the h5 median. Google’s h5 index and h5 median are robust and meaningful average indicators, and they contrast starkly with the thoroughly discredited ‘journal impact factor (JIF)’, still trumpeted by some mathematically ignorant journal publishers.

My second chart shows these Google metrics for the topmost journals in each discipline group, and (because this may be an exceptional score) for the journals that are ranked at number 20 in each group. There are huge differences here, with the top scoring medical and life sciences journals getting h5 scores that are nine times larger than top humanities journals, four times those of other social sciences, and twice those of business and economics.

Figure 2: How Google’s metrics vary across discipline groups, for the top journal (blue) and the 20th ranked journal (orange)

At the 20th ranked journal in each discipline group the absolute range of these scores narrows, but the picture stays pretty much the same as the Figure shows. The medical journal ranked 20th still has five times the h5 score of its humanities counterpart, and two and a half times that of its social sciences counterpart. And remember these are h5 scores that are very resilient indicators anyway, very unsusceptible to distortion. So the gulf charted here isn’t the product of just a few science super-journals.

And it doesn’t get better when you look at the details. For example:

- the top scoring journals in English literature and literary studies generally have h5 scores of around 10

- in history only two journals make it to h5 scores of 20

- and in political science again only two journals achieve h5 scores of 50.

What should or can be done?

I’ve participated in a great many discussions among social scientists about low citation rates. Typically most people in these conversations treat the issue as of minor significance, indicative only of the myriad external academic cultures that form such a mystery to us, enmeshed as we all are within our single-silo perspectives. The greater volume of STEM science publications is cited as if it explains things — it doesn’t, most individual STEM science papers are not often cited. And thorough or comprehensive referencing is usually pictured as a kind of compulsive behavior by scientists, a hoop-jumping formality often carried to excess. It is rare indeed to hear any social scientist who recognizes the extent or importance of the search work that STEM researchers must undertake every week, just to keep abreast of their field and a rapidly evolving literature.

I have had fewer discussions with humanities scholars, although senior figures there seem to take a similar stance. The sheer rarity of cross-citing and the general absence of cumulation especially galls some young academics, but there is no developed sense that anything different could or should ever happen. Indeed some despairing observers have concluded that in fields like literary studies we should abandon the current emphasis upon undertaking research altogether, since most of this work will never be referenced or seen as of any provable value by other scholars in the field.

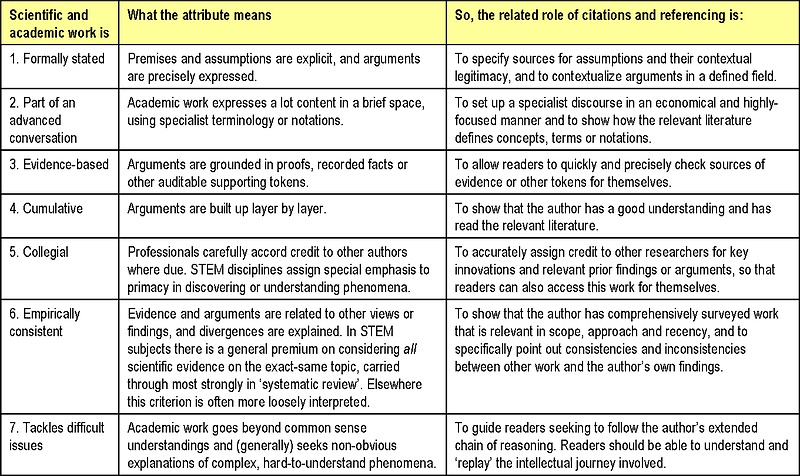

Yet citation and referencing patterns are not trivial things. The patterns charted above are not just a filigree of external appearances that dangles around disciplines without any connection to the seriousness and purposefulness of their fundamental activities. On the contrary Figure 3 below shows that how we cite and reference other work relates back closely and integrally to how we practice science and scholarship. Full and accurate citations are not a ritualistic or optional extra that we can dispense with at will, nice to have if you’ve got time or a research assistant on your side, but a bit of a chore otherwise. They are instead a solid and important indications of the presence of the seven primary academic virtues listed in the leftmost column of Figure 3.

Figure 3: How seven main features of scientific and scholarly work are reflected in referencing and citations

Source: Patrick Dunleavy and Jane Tinkler, Improving the Impacts of University Research, Palgrave, forthcoming.

It follows that those social science and humanities academics who go through life anxious to prove themselves a ‘source’ (of citations) but not a ‘hub’ (of cites to others) are not practicing the reputation-building skills that they fondly imagine. Similarly a scholar who cites other people’s work only if it agrees with theirs, or who sifts the literature in an ideologically-limited or in a discipline-siloed way, ignoring other views, perspectives and contra-indications from the evidence, is just a poorer researcher. Their practice is self-harming not just to themselves, but to all who read their works or follow after them as students at any level. Others who perhaps accept such attitudes without practicing them themselves – for example, as departmental colleagues or journal reviewers – are also failing in their scholarly obligations, albeit in a minor way.

To break past such attitudes requires a collective effort in the social sciences and humanities, by all of us, to get better at understanding and summarizing the existing literatures on the myriad topics that we cover. There are now numerous tools for doing so. The now-continuous aggregation and re-aggregation of scientific and scholarly knowledge via social media — especially academic blogging — is one key area of innovation, allowing all of us to keep in scope a wider and more up to date range of debates than ever before. Technical developments in search — most recently such as Google Scholar Updates and Metrics — have made keeping in touch with specialist areas far easier.

There are also now some very specific and increasingly influential methods for re-aggregating and re-understanding what whole literatures tell us. ‘Systematic review’ is an especially key approach now across the social sciences, spreading in from medicine and the health sciences. It starts by the reviewer clearly delineating (i) the subject, focus and boundaries of the review; and (ii) explicit criteria to be applied in evaluating sources and texts as being high value, medium value or low value. The review begins by considering the full pool of sources, leaving nothing out. The reviewer then systematically applies the criteria already set out, filtering down the studies progressively to focus on the higher value materials. Within the high value studies alone, those with the best evidence or methods employed, the systematic review considers what exactly are the strength of any evidential connections made and tries to reconcile as far as possible any divergences in estimating effects across the high value studies. Finally the conclusions (a) sum up the central findings; (b) make clear and assess the level of evidence and weight of the materials underpinning the findings; (c) give a sensitivity analysis of how far the conclusions might differ if the assessment criteria used for focusing down on key studies had been different.

Compared to systematic review, many current literature reviews undertaken in the social sciences and humanities are just plainly inadequate — they make little or no effort to be comprehensive; they operate on implicit, obscure, varying or inconsistently applied criteria; and they culminate in judgements that can often seem personal to the point of idiosyncracy. None of this is to say that we should pick up methods unadapted or in formulaic fashion from the STEM sciences. We need to adapt systematic review (and other approaches) to the social sciences and humanities, especially to handle qualitative research and analyses. But some progress has already been made here, and far more can be anticipated as we move into a new era of more cumulation and re-aggregation of academic research.

By improving our currently poor to very poor citation and referencing practices in the social sciences and humanities we can begin to reverse the collective self-harm inherent in the status quo. But far larger gains will also follow as better citation practices allow us to strengthen each of the seven distinctive features of academic knowledge considered in Figure 3 above.

Some related information for the social sciences (and some overlap areas with the humanities, included above) can be found in : Simon Bastow, Patrick Dunleavy and Jane Tinkler, ‘The Impact of the Social Sciences ’ (Sage, 2014) or the Kindle edition. You can also read the first chapter for free and other free materials are here.

To follow up relevant new materials see also my stream on Twitter @Write4Research and the LSE Impact blog