With growing concerns over children’s online privacy and the commercial uses of their data, it is vital that children’s understandings of the digital environment, their digital skills and their capacity to consent are taken into account in designing services, regulation and policy. Our research project set out to ask children directly, which was not a straightforward task.

Focus groups were a key part of the project’s overall methodology. They allow children’s voices and experiences to be expressed in a way that is meaningful to them, and permit children to act as agents in shaping their digital rights, civic participation, and need for support. Drawing on the relevant research literature, our aim was to use real-life scenarios and exemplar digital experiences to facilitate the focus group discussions and to ensure that children are clearly focused on the opportunities, risks, and practical dilemmas posed by the online environment.

Our initial pilot research demonstrated some challenges of researching children’s privacy online – children found it hard to discuss institutional and commercial privacy, as well as data profiling as they seemed to have substantial gaps in their understanding of these areas. They also did not see their online activities as data or as sharing personal information online. This made it hard to establish how important privacy was for them and what digital skills they had.

To address this, the team took a step-by-step approach – starting with the unprompted perceptions of privacy and children’s practices (for example, in relation to selecting apps, checking age restrictions, reading terms and conditions, changing privacy settings, getting advice about new apps from others) and a quick test of their familiarity with relevant terminology (e.g. cookies, privacy settings, algorithms, facial recognition). Then moving on to more complex areas, such as types of data they share and with whom, gradually building the landscape of children’s online behaviour and enabling discussion of less thought-of areas such as data harvesting and profiling.

An activity that worked really well as an introduction was asking children about the apps and websites they used over the past week – this produced very quickly a comprehensive picture of their recent activities and the platforms they engage with. The examples of the apps they use then offered an opportunity to ask about their practices in a more contextualised and familiar setting, rather than talking about the internet more generally, which children found harder.

To discover children’s familiarity with privacy-related terminology, we asked, simply, “Have you heard this word? Can you tell me what it means?” This proved very effective in enabling children to talk about their experiences on their own terms before we gradually introduced more complex privacy issues, such as data sharing.

Another challenge was to help children describe what they share online and their understanding of how additional information might be collected in the background by the apps and used to create a digital profile. The initial idea to ask children to brainstorm about all the data they might have shared, with prompts from the team, was not very effective.

The team therefore re-structured the activities, making them more visual and interactive. This enabled children to engage better with the different dimensions of privacy online and relate their experiences to the discussed issues.

Children were given four sharing options – share with online contacts (interpersonal privacy), share with my school, GP, future employer (institutional privacy), share with companies (commercial privacy), and keep to myself (desire not to share something). After discussion with the UnBias project, we identified 13 types of data that might be shared digitally and reduced these to the nine most relevant categories: personal information, biometric data, preferences, internet searches, location, social networks, school records, health, and confidential information (for more, see here).

Children were asked to say whether they share each type of data with anyone, why they might share it and what the implications might be. This worked really well as it allowed children to relate the examples to the applications they use and to some practical situations (e.g. sharing information about your address with a delivery app).

Interestingly, this exercise demonstrated that children often choose ‘keep to myself’ spontaneously but, as the discussion progressed, they acknowledged that they are sometimes asked for this data and feel obliged to provide it.

Still, thinking about the possible future implications remained a challenge. The discussion often diverted to general internet safety with children reciting familiar messages about ‘stranger danger’ and password sharing but failing to grapple with how their data might become available online and how it might be used for unintended purposes. A lot of prompting was used to elicit greater details about this.

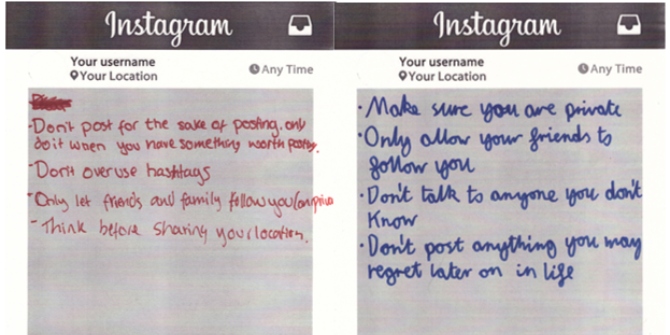

To facilitate children’s thinking about the future and about child development, we designed an activity ‘Advice to a younger sibling’ where children write a message to an imaginary younger sibling telling them what they need to learn about privacy online. This exercise worked well but children still struggled to express what effective strategies might be.

Finally, we felt that some questions might have remained unanswered, and we gave children the opportunity to ask any questions they still had, and make suggestions for changes in the future. This worked well and we will be using this input in the design of the online toolkit for children we’re now developing.

♣♣♣

Notes:

- This blog post was originally published on LSE Media Policy Project. You can read about some research findings here or on the project website, which is updated each month.

- The post gives the views of its author, not the position of LSE Business Review or the London School of Economics.

- Featured image by the authors. Not under Creative Commons. All rights reserved.

- Before commenting, please read our Comment Policy

Mariya Stoilova is a post-doctoral research officer at LSE and an associate lecturer in psychosocial studies at Birkbeck, University of London. With a strong focus on multi-method analyses, psychosocial research approaches, and policy and practice development, Mariya’s research covers the areas of digital technologies, well-being, and family support; social change and transformations of intimate life; and citizenship and social inequalities.

Mariya Stoilova is a post-doctoral research officer at LSE and an associate lecturer in psychosocial studies at Birkbeck, University of London. With a strong focus on multi-method analyses, psychosocial research approaches, and policy and practice development, Mariya’s research covers the areas of digital technologies, well-being, and family support; social change and transformations of intimate life; and citizenship and social inequalities.

Rishita Nandagiri is a PhD candidate at LSE’s department of social policy (demography and population studies) and an external graduate associate member of the Centre for Cultures of Reproduction, Technologies and Health, the University of Sussex.

Rishita Nandagiri is a PhD candidate at LSE’s department of social policy (demography and population studies) and an external graduate associate member of the Centre for Cultures of Reproduction, Technologies and Health, the University of Sussex.

Sonia Livingstone, OBE, is Professor of Social Psychology in the Department of Media and Communications at LSE. She teaches master’s courses in media and communications theory, methods, and audiences and supervises doctoral students researching questions of audiences, publics and youth in the changing digital media landscape. She is author or editor of nineteen books and many academic articles and chapters. She has been visiting professor at the Universities of Bergen, Copenhagen, Harvard, Illinois, Milan, Oslo, Paris II, and Stockholm, and is on the editorial board of several leading journals. She is a fellow of the British Psychological Society, the Royal Society for the Arts, and fellow and past President of the International Communication Association, ICA. Sonia has received honorary doctorates from the University of Montreal and the Erasmus University of Rotterdam. She was awarded the title of Officer of the Order of the British Empire (OBE) in 2014 ‘for services to children and child internet safety.’

Sonia Livingstone, OBE, is Professor of Social Psychology in the Department of Media and Communications at LSE. She teaches master’s courses in media and communications theory, methods, and audiences and supervises doctoral students researching questions of audiences, publics and youth in the changing digital media landscape. She is author or editor of nineteen books and many academic articles and chapters. She has been visiting professor at the Universities of Bergen, Copenhagen, Harvard, Illinois, Milan, Oslo, Paris II, and Stockholm, and is on the editorial board of several leading journals. She is a fellow of the British Psychological Society, the Royal Society for the Arts, and fellow and past President of the International Communication Association, ICA. Sonia has received honorary doctorates from the University of Montreal and the Erasmus University of Rotterdam. She was awarded the title of Officer of the Order of the British Empire (OBE) in 2014 ‘for services to children and child internet safety.’