The general view is that the 2020-21 pandemic has been already accelerating the adoption of automation, and moves towards digital business. There is strong evidence for this, and for the trend to continue across the 2021–2025 period. And indeed the case for these technologies has been well made by our 2020 experiences. The technology worked. It provided alternative ways of working in a crisis. It allowed a high degree of virtuality, and all the upsides to that, in a world rendered physically semi-paralysed, albeit temporarily. It would be a foundation for building resilience in the face of increasingly uncertain business environments, and future crises. But when we talk of the technology, in fact there are many emerging digital technologies. Which to bet on?

The general view is that the 2020-21 pandemic has been already accelerating the adoption of automation, and moves towards digital business. There is strong evidence for this, and for the trend to continue across the 2021–2025 period. And indeed the case for these technologies has been well made by our 2020 experiences. The technology worked. It provided alternative ways of working in a crisis. It allowed a high degree of virtuality, and all the upsides to that, in a world rendered physically semi-paralysed, albeit temporarily. It would be a foundation for building resilience in the face of increasingly uncertain business environments, and future crises. But when we talk of the technology, in fact there are many emerging digital technologies. Which to bet on?

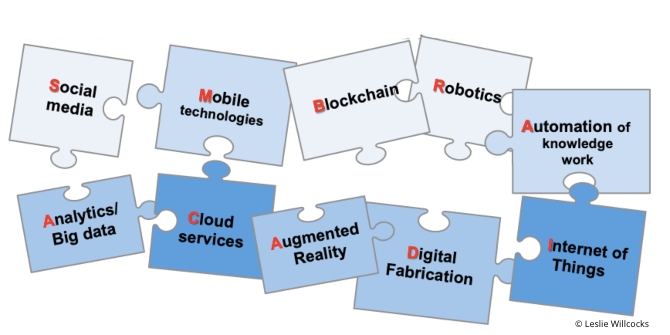

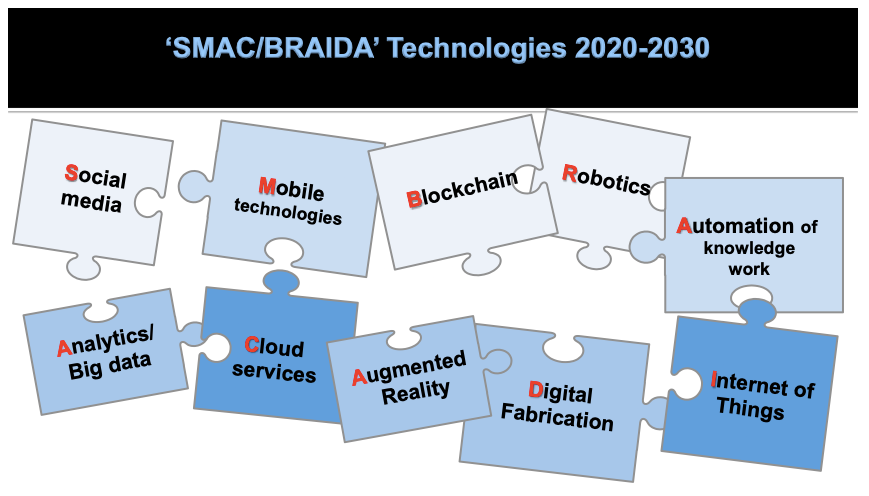

My own view is that ten technologies, which I call SMAC/BRAIDA, are the most obvious candidates, and that used combinatorially, they represent the basis for most businesses in advanced economies being digital by 2030. That long? I hear you ask. Well, yes, because digital transformation is highly challenging – the failure rate has been between 70% and 87%, depending on which study you read and how failure is measured. Why is this? I will discuss the complex factors in a later article, but the first reason becomes obvious when you just look at the formidable range of emerging technologies that can be harnessed. Just bringing one of these into an organisation is highly problematic, as we are finding in our work on automation. With digital transformation, organisations are trying to harness, eventually, all ten. To understand the potential and the challenges, we need to look more carefully at the technologies shown in Figure 1.

Figure 1. SMAC/BRAIDA technologies

Let’s start with social media. These are interactive computer-mediated technologies that facilitate the creation or sharing of information, ideas, career interests and other forms of expression via virtual communities and networks. Social media are interactive Web 2.0 Internet-based applications. User-generated content, or user-shared content, such as text posts or comments, digital photos or videos, and data generated through all online interactions, is the lifeblood of social media. Users create service-specific profiles and identities for the website or app that are designed and maintained by the social media organisation. Users usually access social media services via web-based technologies on desktops and laptops, or download services that offer social media functionality to their mobile devices (e.g., smartphones and tablets). As users engage with these electronic services, they create highly interactive platforms through which individuals, communities and organisations can share, co-create, discuss, participate and modify user-generated content or self-curated content posted online.

Mobile technology is used for cellular communication. Mobile technology has evolved rapidly over the past few years. Since the start of this millennium, a standard mobile device has gone from being no more than a simple two-way pager to being a mobile phone, GPS navigation device, an embedded web browser and instant messaging client, and a handheld gaming console. Many experts believe that the future of computer technology rests in mobile computing with wireless networking. Mobile computing by way of tablet computers is becoming more popular. Tablets are available on the 3G, 4G and 5G networks.

Next we have analytics, dealing with the discovery, interpretation, and communication of meaningful patterns in data, and applying data patterns towards effective decision-making. Especially valuable in areas rich with recorded information, analytics relies on the simultaneous application of statistics, computer programming and operations research to quantify performance. Organisations apply analytics to business data to describe, predict, and improve business performance. Specifically, areas within analytics include predictive analytics, prescriptive analytics, enterprise decision management, descriptive analytics, cognitive analytics, Big Data Analytics, retail analytics, supply chain analytics, store assortment and stock-keeping unit optimisation, marketing optimisation and marketing mix modelling, web analytics, call analytics, speech analytics, sales force sizing and optimisation, price and promotion modelling, predictive science, credit risk analysis, and fraud analytics. The algorithms and software used for analytics harness the most current methods in computer science, statistics, and mathematics.]

A foundational technology for the others is cloud computing – the on-demand availability of computer system resources, especially data storage and computing power, without direct management by the user. The term is generally used to describe data centres made available to many users over the Internet. Cloud computing is usually bought on a pay-per-use basis from a cloud vendor, for example Amazon’s data services. Today’s large clouds often have functions distributed over multiple locations from central servers. Clouds may be limited to a single organisation (enterprise clouds) or be available to many organisations (public cloud). Cloud computing relies on sharing of resources to achieve coherence and economies of scale. The advantages of cloud computing are said to be: companies avoid or minimise up-front IT infrastructure costs; allows enterprises to get their applications up and running faster; improved manageability and less maintenance; and enables IT teams to more rapidly adjust resources to meet fluctuating and unpredictable demand. On the downside, people cite perceived insecurity especially with the public cloud, regulation compliance problems, difficulties integrating with existing IT and business processes, in-house skills shortages, sometimes inflexible contracting and pricing, and lock-in to suppliers.

There has been much excitement about blockchain, mainly because of the high profile debates about crypto-currencies. A blockchain consists of a growing list of records, called blocks, which are linked using cryptography. Each block contains a cryptographic hash of the previous block, a timestamp, and transaction data. By design, a blockchain is resistant to modification of the data. It has been described as an open, distributed ledger that can record transactions between two parties efficiently and in a verifiable and permanent way. For use as a distributed ledger, a blockchain is typically managed by a peer-to-peer network collectively adhering to a protocol for inter-node communication and validating new blocks. Blockchain technology has already been used as a basis for many cryptocurrencies, and for smart contracts, in supply chains and in financial services, for example distributed ledgers in banking, and in banking settlement systems. Proponents suggest it has massive business potential, but also that will take time to have large-scale impacts.

Then there is robotics, which is an interdisciplinary branch of engineering and science that includes mechanical engineering, electronic engineering, information engineering, and computer science. Robotics deals with the design, construction, operation, and use of robots, as well as computer systems for their control, sensory feedback, and information processing. These technologies are used to develop machines that can substitute for humans and replicate human actions. Robots can be used in many situations and for lots of purposes, including in dangerous environments, manufacturing processes, and increasingly in service settings, e.g. healthcare.

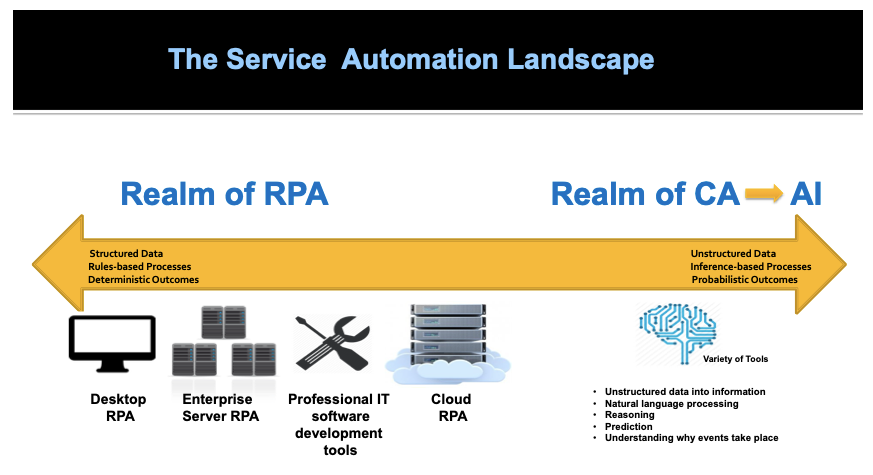

My primary research ground has been automation of knowledge work. This involves software called robotic process automation (RPA), which automates simple repetitive processes by taking structured data and applying prior configured rules to produce a deterministic outcome, i.e. correct answer. It is generically applicable to most business processes. Its limitations mean that it is increasingly complemented and augmented by cognitive automation (CA) software that can process unstructured and structured data, through algorithms (pre-built from training data) to arrive at probabilistic outcomes (see Figure 2.) For example, in one development Zurich insurance used cognitive technology to check accident claims by looking at medical records supplied by the claimant and pictures of injuries to establish the claim and how much to pay out. The process used to take a human some 59 minutes, but subsequently took less than six seconds, and saved dramatically on cost and time while improving accuracy. Artificial intelligence is an umbrella term used to cover all automation technologies, though the vast majority in use as of 2021 did not match the strong definition of AI as ‘getting computers to do the sorts of things human minds can do’.

Figure 2. The service automation landscape

Another foundational technology, because it collects data, is the internet of things. IoT represents a system of interrelated computing devices, mechanical and digital machines, including sensors, provided with unique identifiers (UIDs) and the ability to transfer data over a network without requiring human-to-human or human-to-computer interaction. The internet of things has evolved due to the convergence of multiple technologies, real-time analytics, machine learning, commodity sensors, and embedded systems. In the consumer market, IoT is used in so-called ‘smart homes’, but have multiple uses for collecting data through sensors, for example traffic and people monitoring, creating customer intelligence, and building data for business analytics in real time. There are a number of serious concerns about dangers in the growth of IoT, especially in the areas of privacy and security, and consequently industry and governmental moves have been addressing these.

We move on to digital fabrication and modelling which is a design and production process that combines 3D modelling or computing-aided design (CAD) with additive and subtractive manufacturing. Additive manufacturing is also known as 3D printing, while subtractive manufacturing may also be referred to as machining. Many other technologies can be exploited to physically produce the designed objects. Finally there is augmented reality. AR is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities. The overlaid sensory information can be constructive (i.e. additive to the natural environment), or destructive (i.e. masking of the natural environment). This experience is seamlessly interwoven with the physical world such that it is perceived as an immersive aspect of the real environment. The primary value of augmented reality is the manner in which components of the digital world blend into a person’s perception of the real world, not as a simple display of data, but through the integration of immersive sensations, which are perceived as natural parts of an environment.

We have focused here deliberately on generic digital technologies that can be used across sectors. Clearly there are also impactful biotechnologies being developed, and interesting progress being made in computing, for example quantum computing. Looking across the impressive technologies we have highlighted, you will quickly get a sense of their potential for being applied in organisations and driving business value, especially when they begin to be used, as some are already, in combination. McKinsey Global Institute has estimated that applying these technologies could add an additional $13 trillion to global GDP by 2030. But historically there is a long challenging road to implementation of a major technology, let alone its exploitation. On past experience it can take from eight to 26 years for one such technology to be deployed by 90% of organisations across sectors. And certainly that is how it has been playing out so far with cloud computing, robotics, automation of knowledge work, internet of things, analytics, with, of course, social media and mobile more quickly adopted, and blockchain, digital fabrication and augmented reality more slowly deployed. Such technologies are not silver bullets, fire-and-forget missiles, or plug-and-play – whatever metaphor we would want to mislead ourselves with. Based on our most recent research, we will look at the challenges and routes forward in a later article.

♣♣♣

Notes:

- This blog post is based on the forthcoming book Willcocks, L. (2021) Global Business: Management, available from SB Publishing from January 2021.

- The post expresses the views of its author(s), not the position of LSE Business Review or the London School of Economics.

- Featured image by Leslie Willcocks © All Rights Reserved, NOT under Creative Commons.

- When you leave a comment, you’re agreeing to our Comment Policy

Leslie Willcocks is a professor of technology, work and globalisation at LSE’s department of management.

Leslie Willcocks is a professor of technology, work and globalisation at LSE’s department of management.

The implementation of automation in your company should have to consider your company’s need to work with a specialized RPA consultant, who can build, implement and manage the automation projects for you. It is important for companies to determine which of their processes are suitable for RPA so that automation runs smoothly. Furthermore, these technologies also have limitations, however, I believe that there is always a solution to every challenge in the integration of new technology. Thank you for this interesting article.