As academics work to illustrate the true value of their work to the public, some might struggle to make a seemingly esoteric project seem relevant, accessible and interesting to the public at large. Alastair Dunning believes that the advancement of online crowdsourcing may hold some answers.

As academics work to illustrate the true value of their work to the public, some might struggle to make a seemingly esoteric project seem relevant, accessible and interesting to the public at large. Alastair Dunning believes that the advancement of online crowdsourcing may hold some answers.

In the push to make clear and unquestionable links between research and its effects on society, academics with seemingly esoteric projects might struggle to make their work accessible and interesting to the public. But projects centring on Scots language dictionaries, tattered Greek papyri and Bentham’s philosophy of utilitarianism have all made the jump through innovative use of crowdsourcing. A growing number of projects, such as Ancient Lives, Transcribe Bentham, Old Weather and Scots Words and Places, are making sophisticated use of the web to actively engage the general public as contributors to their research.

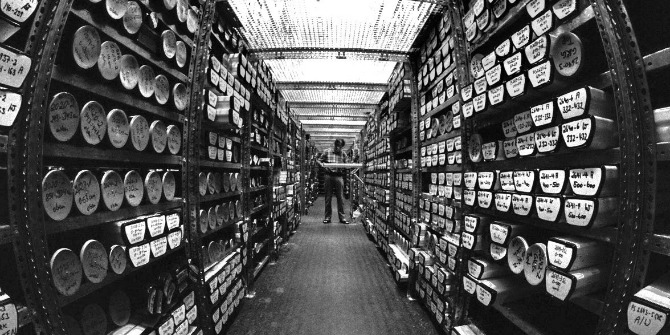

Old Weather, for example, invites the general public to transcribe naval logs, thus providing crucial meteorological data for climate scientists, as well as opening up sources for the history of the British navy. Transcribe Bentham works with a range of groups, in particular schools, to decipher the numerous papers of Jeremy Bentham. For such projects, securing user contributions is about much more than impact. They provide a venue for communities outside academia to play a meaningful role within university research, providing insight and knowledge, saving time, and facilitating the route towards high-quality outputs.

It is worth remembering that crowdsourcing predates the digital era; the Oxford English Dictionary was initially built on contributions from volunteers and there is a long tradition of active contributions from the public within many fields of the social sciences.

But the development of crowdsourcing on the internet has rapidly accelerated the sophistication of its methodologies. Recent projects have been particularly adept at using social media, developing refined mechanisms for ensuring that contributions are quality assured, working with large data sets, and creating interfaces that interact in a way that reduces complexity and confusion.

These developments mean that there are suddenly novel prospects for future projects to interact with much larger audiences than previously, and to do so in a much more effective manner.

Of course, there are plenty of research projects that do not lend themselves to this kind of public engagement whatsoever. That’s fine. But for other projects, even those that could seem recondite in nature, there are opportunities to explore.

So as crowdsourcing advances, a vital factor will be the sensitivity with which the needs and motivations of those taking part are understood. If the research community engages the public in a utilitarian sense, as just cogs in a larger research wheel, then the whole methodology will become imperilled. Understanding what moves an inhabitant of a specific community, a child in the schoolroom, or the ‘silver surfer’ with a new internet connection, and making sure their input is suitably recognised is crucial.

Engagement, as Chris Batt pointed out in his report on the topic, must be a two-way conversation “knowledge co-creation and exchange rather than simply knowledge transfer: a dialogue which enriches knowledge for mutual benefit.”

The task of the University of Oxford’s RunCoCo team was to develop guidelines for projects wishing to develop digitised collections by asking the public to upload their own content or adding information to existing resources, as happened with the highly successful Great War Archive. Equally, the Citizen Science Alliance is working according to firm principles on how to interact with their users, as articulated in Arfon Smith’s podcast on the success of the Galaxy Zoo project. Indeed, the Alliance is now looking for other researchers with whom to work with and is requesting proposals for ideas.

If crowdsourcing is to continue to be embedded in research, then it is the principles and thinking drawn from RunCoCo or the Citizen Science Alliance that need to be adopted, adapted and implemented. There is a wealth of UK research that can be enhanced by the involvement of a engaged, knowledgeable and passionate UK public.

Stuff like http://dailycrowdsource.com/2011/01/11/technology/new-game-taps-crowdsourcing-to-help-scientists/ has led to crowdsourced data being referenced in academic papers, though, might be harder to make social sciences games.

Thank you for this; a very insightful post. It’s very good to see incredible research projects highlighted. I also enjoyed your recent post on crowdsourcing for the Guardian Higher Education Network, by the way.

I’m glad you’ve addressed an essential point, which is the importance of engagement and a sensitivity towards contributors. I’m an enthusiast of crowdsourcing and its research potential, but in practice I miss more awareness of what Chris Batt alludes to in his report. This “two-way conversation” needs to take place within clear guidelines and terms of reference. I am worried about how crowdsourcing fits within a current devaluation or lack of appreciation of intellectual work (in terms of its symbolic and financial recognition, not its quality).

In academic research, the stimulus seems to be both funding (the money that makes the project possible) and credit (reputation, impact, authority). Someone benefits from the funding and someone benefits from signing the publications. When “the crowd” is essential for this work, what systems are implemented to ensure that the work relationship remains ethical and fair? When can crowdsourcing be seen as a cheeky exploitation of free intellectual labour and a remedial means to “make do” in the context of budget cuts, and how can we ensure it is not?

Crowdsourcing is openly seen as an effective way of saving time and money, but the level of time, expertise and money employed in the engagement strategy that a large project would require with its voluntary contributors should not be underestimated. If “the crowd” will not be paid for their time, expertise and work, the least they can get is public acknowledgement and credit… or at the very least, depending on the case, polite thanks. Implementing good practice strategies to ensure ethical and fair collaboration with ‘external’ contributors seems to me something that should no longer be postponed.

Article on the ABC Science website on citizen science: http://www.abc.net.au/science/articles/2010/04/15/2872493.htm.