Being able to find, assess and place new research within a field of knowledge, is integral to any research project. For social scientists this process is increasingly likely to take place on Google Scholar, closely followed by traditional scholarly databases. In this post, Alberto Martín-Martín, Enrique Orduna-Malea , Mike Thelwall, Emilio Delgado-López-Cózar, analyse the relative coverage of the three main research databases, Google Scholar, Web of Science and Scopus, finding significant divergences in the social sciences and humanities and suggest that researchers face a trade-off when using different databases: between more comprehensive, but disorderly systems and orderly, but limited systems.

Being able to find, assess and place new research within a field of knowledge, is integral to any research project. For social scientists this process is increasingly likely to take place on Google Scholar, closely followed by traditional scholarly databases. In this post, Alberto Martín-Martín, Enrique Orduna-Malea , Mike Thelwall, Emilio Delgado-López-Cózar, analyse the relative coverage of the three main research databases, Google Scholar, Web of Science and Scopus, finding significant divergences in the social sciences and humanities and suggest that researchers face a trade-off when using different databases: between more comprehensive, but disorderly systems and orderly, but limited systems.

Researchers routinely use databases such as Google Scholar, Web of Science, and Scopus to search scholarly information and consult bibliometric indicators such as citation counts. However, although an understanding of the basic characteristics of these services is needed for effective literature searches and for deciding whether their indicators are appropriate for use in research evaluations, the differences between these databases in terms of coverage and reliability of the data are still not widely known.

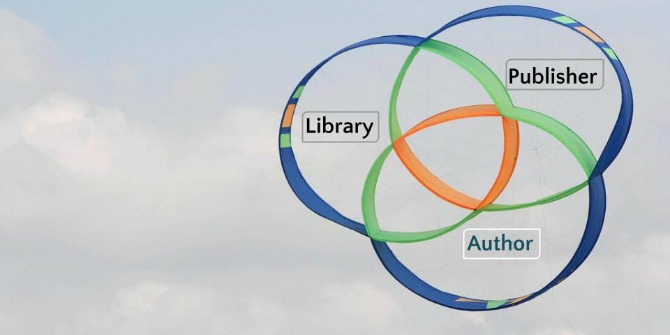

A crucial aspect in which these services differ is in their approach to document inclusion. Web of Science and Scopus rely on a set of source selection criteria, applied by expert editors, to decide which journals, conference proceedings, and books the database should index. Conversely, Google Scholar follows an inclusive and automated approach, indexing any (apparently) scholarly document that its robot crawlers are able to find on the academic web.

Each approach has its pros and cons. The selective approach of Web of Science and Scopus produces a curated collection of documents, but is sensitive to biases in the selection criteria. Indeed, evidence has shown that these databases have limited coverage in the areas of Social Sciences and Humanities, literature written in languages other than English, and scholarly documents other than journal articles. For its part, Google Scholar’s inclusive and unsupervised approach maximises coverage, giving each article “the chance to rise on its own merit”. Nevertheless, it leads to the presence of technical errors in the platform, such as duplicate entries that refer to the same document, incorrect or incomplete bibliographic information, and the inclusion of non-scholarly materials.

We have recently tested the differences in coverage in these three data sources across subject categories. For a sample of over 2,500 very highly-cited documents across 252 subject categories that Google Scholar released in 2017, we checked whether the documents were also covered by Web of Science and Scopus. This comparison favours Google Scholar, since it is the original source of the documents, but is nevertheless a reasonable test since it seems that any scholarly database ought to have quite comprehensive coverage of highly cited documents. The results showed that, even within this highly-selective set of documents (all published in English), a significant amount in the Social Sciences and Humanities were not covered by the selective databases. In most cases, the cause was that the database did not cover the journal at the time the article was published.

We later decided to dig deeper into this issue, and for all the highly-cited documents in the sample, we collected the complete list of citations that each of the three databases provided, and identified the overlapping and unique citations. This new sample, which amounted to just below 2.5 million citations, gave us a more detailed picture of the relative differences in coverage across the three databases, not only at the level of broad areas, but also for each of the 252 subject categories.

The results by broad areas showed that Google Scholar was able to find most of the citations to Social Sciences articles (94%), while Web of Science and Scopus found 35% and 43%, respectively. Moreover, Google Scholar appeared to be a superset of Web of Science and Scopus, as it was able to find 93% of the citations found by Web of Science, and 89% of the citations found by Scopus. Last but not least, over 50% of all the citations to Social Science articles were only found by Google Scholar. The same analysis was applied to the 252 specific subject categories, and can be viewed in this interactive web application.

The large proportion of citations that are only found by Google Scholar, especially in the Social Sciences, the Humanities, and Business, Economics & Management, raises the question of which types of sources Google Scholar covers that the other databases do not. To provide an answer, we identified the document types and the languages of the citations in our sample, and compared the proportions of document types and languages of citations only found by Google Scholar on one side (unique citations in Google Scholar), and citations found by two or more databases on the other (overlapping citations). The results were aggregated at the level of broad areas.

The majority (~60%) of the citations found only by Google Scholar come from non-journal sources: among these we find theses and dissertations, books and book chapters, not-formally-published papers such as preprints and working papers (especially important in Business and Economics), and conference papers. Nevertheless, there is still a large proportion of citations to Social Sciences and Humanities articles from journals that are not indexed in Web of Science or Scopus. There is also a significant minority of citations to Social Sciences and Humanities articles that only Google Scholar can find, that come from documents published in languages other than English, which are not covered in the selective databases.

Interestingly, despite the significant differences in coverage, and despite the known errors that may be present in the data from Google Scholar, which we did not attempt to eliminate (e.g. inflated citation counts caused by duplicate entries), Spearman correlations between citation counts are very strong across all areas and databases (in most cases over .90, although sometimes lower in some fields of the Humanities). Thus, if Google Scholar citation counts were used for research evaluations then its data would be unlikely to produce large changes in the results. It would be particularly useful when there is a reason to believe that documents not covered by Web of Science or Scopus are important for an evaluation.

In conclusion, the inclusive paradigm of document indexing popularised by Google Scholar facilitates discovery of not only the most well-known sources, but also of sectors of scholarly communication that were previously hidden from view. This can be useful in literature searches, as well as for those who need to compile evidences of research impact for a collection of outputs, but at the same time it has created some problems of its own. The question, as our colleague Professor Harzing put it, is whether we are ready to accept a trade-off: going beyond the comfortable and orderly borders of curated databases in exchange for more diverse coverage. Our hope is that these results can help researchers and other stakeholders make informed decisions in this regard.

This post draws on the authors’ co-authored article, Google Scholar, Web of Science, and Scopus: a systematic comparison of citations in 252 subject categories available on SocArXiv.

About the authors

Alberto Martín-Martín is a lecturer in the department of communication and information science at the Universidad de Granada, Spain.

Enrique Orduna-Malea an assistant professor at the Universitat Politècnica de València, Spain.

Mike Thelwall is a professor of information science at the University of Wolverhampton, UK.

Emilio Delgado-López-Cózar is Professor of Research Methods at the Universidad de Granada, Spain.

Note: This article gives the views of the authors, and not the position of the LSE Impact Blog, nor of the London School of Economics. Please review our comments policy if you have any concerns on posting a comment below.

Featured Image Credit adapted from, Fernand de Canne via Unsplash (Licensed under a CC0 1.0 licence)

Google Scholar’s database is more likely than other databases to contain sources referenced by sources in the Google Scholar database. I can’t see how this is not a biased experiment and a trivial result.

Hi Paul,

The comparison in this study is among citations to documents that are covered by the three databases: Google Scholar, Web of Science, and Scopus. Yes, the initial seed sample of cited documents was selected from Google Scholar, which could give it an unfair advantage. But we think this advantage is not substantial, as the comparison of citation counts of the citing documents also shows that Google Scholar systematically finds more citations than Web of Science and Scopus. This is nothing new. All studies in the related literature find this effect. The novelty of this study is that it applies the analysis to a larger sample, and provides results for many subject categories.

Dear Alberto, thanks for your response. I think it would be interesting to repeat your experiment drawing the initial seed samples from Web of Science and Scopus.I think it may be found that each database will favour citations to documents which it contains. The databases are possibly beginning to diverge as separate citation “communities” form within each one. It would be interesting to also add time-dependence to the analysis. I think a tool such as network analysis could provide useful information as to the degree of overlap between the databases.

Dear Paul,

thank you for your suggestion. In the future we may test how different results are if the seed sample is selected from other sources. However, the data we have observed up to now, in our own studies and studies by other researchers does not seem to support the hypothesis of divergent communities of citation based on data source. This is because these three sources do not tap fundamentally different collections of documents. They are all multidisciplinary scholarly databases, some more selective, some more inclusive. Therefore there is a significant overlap among all of them. Most of what is covered by Web of Science is also covered by Scopus (which has a larger coverage), and most of what is covered by these two, is also covered by Google Scholar (which is larger still).

As an example, in a different study where we wanted to analyse Open Access availability in Google Scholar, the initial document selection was done in Web of Science. We selected all articles and reviews in two different publication years (~2.3 million documents). We searched each of these documents in Google Scholar, and we were able to find 97.6% of them. This corroborates the finding in this study that Google Scholar is a superset of Web of Science in terms of document coverage. Since linking this other study might flag this comment as SPAM, I’ll just leave the title: “Evidence of Open Access of scientific publications in Google Scholar: a large-scale analysis”.

Recently, another study has built on this one, but focusing exclusively on the discipline of Operations Management. They use a different seed sample (documents published in a journal covered by Web of Science, Scopus, and Google Scholar). Their citation overlap analysis yields very similar results to ours. This is also significant because they took the time to remove all the duplicate citations and other errors found in the data extracted from Google Scholar. The title of this study is: “An evaluation of Web of Science, Scopus and Google Scholar citations in operations management”.

Yes, I quite agree with the central arguments and findings of this piece. But my take is that the whole debate speaks to a broader issue relating to the political economy of knowledge production, dissemination and consumption. Whether elastic or restricted, all the databases lubricate the wheel of capitalism and reinforce the need to reconsider certain issues in north-south relations in the knowledge industry.

Interesting research, thank you for sharing!

Personally, I would love to see this type of research with the Open Academic Graph https://www.openacademic.ai/oag/, which is starting to be popular with other free to search discovery engines

This study and others doing comparisons are very valuable to research librarians who get this question over and over, especially from those seeking promotion and tenure. Faculty and committees seek to understand what their citation counts mean, what the norms are in terms of their subfields, and how they can understand available metrics provided by all three resources (such as differences in h-index as reported by each). Your research and this paper have been valuable to my work as a science librarian. Thank you.

Content and coverage is important! But how useful without considering search interface, presentation of search results and search methodology? If you can’t retrieve it, what’s the purpose? Probably a big difference between the two databases and the search engine. Purpose must come first, not content or coverage!

OK! Interesting, if you are searching for articles that you already have information about. But then, why search for them again… More interesting, if you didn’t know about this highly cited articles at all and started to search the three resources by using basic information on topic etc. (as you normally would), what would be the results in finding those most relevant articles in the three “databases” and how about timed used in the process? Then you can decide which is the most relevant and efficient database to use for research.