With notable negative impacts in clinical research, large numbers of studies simply replicate findings that have previously been confirmed. Caroline Blaine, Klara Brunnhuber and Hans Lund, suggest that much of this waste could be averted with a more structured and careful approach to systematic reviews and propose Evidence-Based Research as a framework for achieving this.

Meta-research (research on research) has shown that many unnecessary studies could have been avoided, if a systematic review had been conducted during the planning phase to flag that no new research was needed. Failing to base new scientific studies on earlier results, especially in medical research, exposes participants to unnecessary research. This is not just wasteful, but is unethical, potentially harmful and limits funding for important and relevant research.

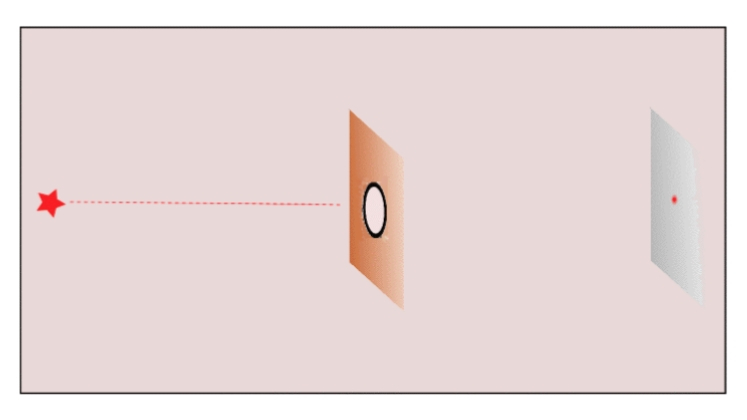

Cumulative meta-analysis, in which studies are added in order of publication date to show the overall result as each new study contributes to the knowledge base, is a research tool that can be used to demonstrate when confirmatory studies are no longer required. These cumulative meta-analyses have over time shown the same picture of waste in many cases. For example:

- In 1992 Lau et al. published a cumulative meta-analysis showing by 1977 enough studies had been conducted to conclude that intravenous streptokinase preserves left ventricular function in patients with acute myocardial infarction. Nevertheless, from 1977 to 1988 more than 30,000 patients were involved in unnecessary placebo-controlled randomised controlled trials (RCTs) of intravenous streptokinase.

- Fergusson et al. in 2005 showed that by 1994 there were enough studies to conclude that aprotinin diminishes bleeding during cardiac surgery. Still, in the following decade more than 4000 patients were involved in unnecessary RCTs comparing aprotinin versus placebo i.e. at least 2000 patients did not receive a potentially life-saving medication.

- Finally, in 2021 Jia et al. found that since 2008 there have been more than 2000 redundant clinical trials on statins in mainland China, resulting in over 3000 extra major cardiac adverse events and 600 deaths. A scale of redundancy they argue points towards “multiple system failures”.

Unfortunately, evidence from meta-research shows that in the scientific literature a systematic and transparent approach is rarely used. The examples below all come from clinical health, often thought to be one of the most transparent and systematic areas of research, and highlight issues at each stage of the process:

1. Designing new studies

A retrospective study evaluated applications for funding to see if a systematic review (SR) was used in the planning and design of new RCTs. Two cohorts of trials were analysed. In the first cohort 42 of 47 trials (89%) referenced a SR, and in the second (37 trials) 100% did. However, less than 12% of both cohorts used information from the SR in the design or planning of the new study.

2. Justifying new studies

A study analysing 622 RCTs published in high-impact anaesthesiology journals between 2014 and 2016 found that less than 20% explicitly mentioned a SR as justification for the new study, and 44% did not cite a single SR.

3. Referencing earlier similar trials

Robinson and Goodman, in a seminal work, identified original studies that could have cited between three and 58 previous studies. On average they found that only 21% of earlier similar studies were referred to, and regardless of the number of available references, the median number cited was always 2. Citing only the newest, best, or largest studies cannot be scientific because it is based on strategic considerations rather than the totality of earlier research.

4. Placing new results in the context of existing results from earlier similar trials

In a series of studies, Clarke and Chalmers [1998, 2002, 2007 & 2010] repeatedly showed that RCTs published in the five highest-ranking medical journals in the month of May almost never used a SR to place new results in context.

Evidence-based research (EBR) is the use of prior research in a systematic and transparent way to inform a new study so that it answers research questions that matter in a valid, efficient and accessible manner.

To raise awareness of the issues and to promote an EBR approach an international group of researchers established the Evidence-Based Research Network (EBRNetwork) in 2014. Building on its work, the 4-year EU-funded COST Action called “EVBRES” is funding activities to raise awareness, promote collaboration, and build capacity around EBR in clinical health research until 2022.

Just promoting the use of systematic reviews is not enough. A 2014 investigation identified 136 new studies which were published after a 2000 SR concluded that a small intravenous dose of lidocaine was the most effective intervention for preventing pain from propofol injection. 73% of these studies actually cited the systematic review that had reported no need for further research. The EBR approach therefore provides a framework that emphasises systematic reviews as a generative part of the research process, rather than a simple box ticking exercise, thereby improving research integrity.

Just promoting the use of systematic reviews is not enough

In 1994, Professor Doug Altman expressed the need for less but better research, and in 2009, Chalmers and Glasziou outlined the four stages where waste occurs in the production and reporting of research evidence. The EBR approach addresses many of the issues raised including:

- The need to focus on necessary, relevant clinical questions

- Over 50% of studies being designed without reference to SRs of existing evidence

- The findings of most new research not being interpreted in the context of a systematic assessment of other relevant evidence.

By building on the existing body of evidence during study planning, and presenting results in context, an EBR approach will help:

- Prevent research waste through more relevant, ethical and worthwhile research

- Improve resource allocation

- Reduce the risk and potential harm to study participants from unnecessary research

- Reduce medical reversals

- Restore end user trust in research.

COVID-19 has triggered a huge amount of research, but also a “deluge” of research waste. In the current uncertain times, it is more important than ever to make clinical, policy, and research decisions on the best available evidence. In the longer term, stakeholders (especially clinical researchers) will need to dedicate time and effort into acquiring the knowledge and skills to be evidence-based, but in return, Evidence Based-Research not only offers gains in research efficiency, but also limits the potential human costs and negative effects of unnecessary research.

For anyone wanting to find out more about Evidence-Based Research please see the EVBRES website you can also read and watch presentations from the 1st EBR Conference that took place on the 16-17 November 2020.

Note: This article gives the views of the authors, and not the position of the Impact of Social Science blog, nor of the London School of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

Image Credit: Chuttersnap via Unsplash.

I wonder whether the volume of gratuitously repeated research may also reflect on the work of referees of research grants and journal articles.

For completeness it may have been worth reporting that the authors conducted a systematic review before undertaking this research.

Thank you for your interest in our blog. You can find lots more information about EBR and the work we are doing at http://evbres.eu including assessing the impact for funders, journals and ethics committees.