University rankings are imbued with great significance by university staff and leadership teams and the outcomes of their ranking systems can have significant material consequences. Drawing on a curious example from their own institution, Jelena Brankovic argues that taking rankings as proxies for quality or performance in a linear-causal fashion is a fundamentally ill-conceived way of understanding the value of a university, in particular, when publicly embraced by none other than scholars themselves.

Earlier this month, QS published its annual World University Rankings by Subject, spurring excitement across academic social media. “Thrilled to be part of the world’s No. 1,” said a faculty member. “Proud alumna and staff member,” wrote another. “So proud to be part of the team. Well done everyone”… And so on. You get the picture.

There’s nothing inherently wrong with people liking their job and enjoying working in a stimulating environment. There is, however, something unsettling about scholars taking things like a ranking produced by QS, Shanghai, or whichever organization, as confirmation, or evidence, of how good—or bad for that matter—they are having it as compared to everyone else.

One may wonder, do scholars respond to rankings in this way because they are just carried away in the moment? Or because they take rankings seriously? Or is it, perhaps, something else?

To be clear from the start, my intention here is not to criticise rankings. At least not in the way this is usually done. In this sense, this is not a story about rankings’ flawed methodologies or their adverse effects, about how some rankings are produced for making profit, or about how opaque or poorly governed they are. None of that matters here.

One may wonder, do scholars respond to rankings in this way because they are just carried away in the moment?

What I wish to do, instead, is to draw attention to a highly problematic assumption which many in academia seem to subscribe to: the assumption that there is, or that there could possibly be, a meaningful relationship between a ranking, on the one hand, and, what a university is and does in comparison to others, on the other.

I will start by telling a story that involves my own university and I will conclude with a more general argument about why publicly endorsing rankings does a disservice to the academia.

What’s in a rank?

In September 2019, German Der Spiegel published an article reporting on the then just-released Times Higher Education’s (THE) World University Rankings. Germany’s status as “the third most represented country in the Top 200,” the article said, was once again confirmed. As “particularly noteworthy,” the article continued, another piece of news stood out: “Bielefeld University jumped from the position 250 to 166.”

Although Bielefeld had participated in this particular ranking since the first edition in 2011, for the most part it would be variously positioned somewhere between 201 and 400. Then, in the 2020 edition of the ranking,” Bielefeld found itself in the Top 200. The news was well received at home. Bielefeld’s Rector thanked everyone “who has contributed to this splendid outcome.”

However, Bielefeld’s leadership was quite puzzled by the whole thing: they could not put the finger on what exactly they had done to have caused this remarkable improvement. They must have done something right, for sure, but what? Aiming to get to the bottom of it, they decided to investigate.

It was quite obvious from the start that the “jump” probably had to do with citations. Anyone even remotely familiar with the methodology of this ranking can understand how this would make sense. And in fact, by citations alone, Bielefeld was the 99th university in the world and sixth in Germany.

Our university library confirmed the citations hypothesis. Moreover, when the scores over time were recalculated, it looked like Bielefeld improved more than 120 places in two ranking cycles alone. And, not only did it “improve” exceptionally, it was also one of the top performers of all universities worldwide when it came to how much it progressed from one year to the next.

Bielefeld, it seemed, was an extreme outlier.

As it turned out, huge leaps in THE rankings had already been linked to a large international collaboration in global health—the Global Burden of Disease study. The THE’s method of counting citations is such, another source said, that in some cases it took not more than a single scholar participating in this study to improve the rank of a university, and even significantly so, from one year to the next.

We were struck by the finding that ten articles alone brought as much as 20% of Bielefeld’s overall citations in those two years. Each one could be linked to the Global Burden of Disease study. All but one were published in The Lancet and co-signed by hundreds of authors. One of the authors—and one only—came from Bielefeld.

Bielefeld’s rise in the ranking was, our analysis showed, clearly caused by one scholar. This is how meaningful the relation between the “performance” of Bielefeld University—an entire institution—and its rank really was.

It is tempting to think that, all things considered, Bielefeld “gamed” this particular ranking—accidentally. It is funny even, especially when one thinks of the inordinate amounts of money some universities put into achieving this kind of result in a ranking.

The logical fallacy

When THE published the ranking, the president of the German Rectors’ Conference said he believed that “Germany’s rise was ‘closely connected’ to the government’s Excellence Initiative.” He certainly wasn’t alone in the effort to explain rankings by resorting to this linear-causal kind of reasoning, as we can see in the same text.

rankings are artificial zero-sum games. Artificial because they force a strict hierarchy upon universities. Artificial also because it is not realistic that a university can only improve its reputation for performance exclusively at the expense of other universities’ reputations

This detail, however, points to what may be the most extraordinary and at the same time the most absurd aspect of it all. Almost intuitively, people tend to explain the position in a ranking by coming up with what may seem like a rational explanation. If the university goes “up,” this must be because it has actually improved. If it goes “down,” it is being punished for underperforming.

Bielefeld’s example challenges the linear-causal thinking about rankings, spectacularly so even. However, in truth, there is nothing exceptional about this story beyond it being a striking example of how arbitrary rankings are.

THE may eventually change its methodology, but changing methodology won’t change the fact that its rankings are artificial zero-sum games. Artificial because they force a strict hierarchy upon universities. Artificial also because it is not realistic that a university can only improve its reputation for performance exclusively at the expense of other universities’ reputations. Finally, artificial because reputation is in and of itself not a scarce resource, but rankings make it look like it is. With this in mind, THE’s rankings are not in any way special, better, or worse than other rankings. They are just a variation on a theme.

Numbers, calculations, tables and other visual devices, “carefully calibrated” methodologies, and all that, are there to convince us that rankings are rooted in logic and quasi-scientific reasoning. And while they may appear as if they were works of science, they most definitely are not. However, maintaining the appearance of being factual is crucial for rankings. In this sense, having actual scientists endorsing the artificial zero-sum games rankers produce critically contributes to their legitimacy.

To assume that a rank—in any ranking—could possibly say anything meaningful about the quality of a university relative to other universities, be it as a workplace or as a place to study, is downright absurd. It is, however, precisely this assumption that makes rankings highly consequential, especially when it goes not only unchallenged, but also openly and publicly embraced—by scholars themselves.

The section of this post relating to Bielefeld’s position in the THE rankings appeared in German in a longer form in the Frankfurter Allgemeine Zeitung under the title “So verrückt können Rankings sein” on March 11, 2020.

Note: This review gives the views of the author, and not the position of the LSE Impact Blog, or of the London School of Economics.

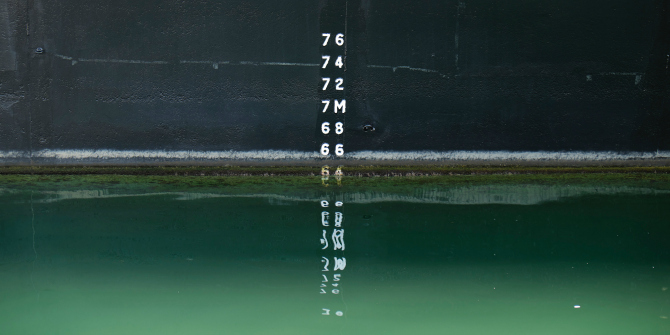

Image Credit: Ian Taylor via Unsplash.

This is one of (if not THE) best article on university rankings I’ve come across. I remember very clearly starting a new job in the UK university sector in 2007 and being utterly perplexed by the rankings. On the one hand, it seemed, all of the university leadership team seemed in thrall to them and wanted to make analysing the university’s position in them an enormous part of my job (that and gaming the, largely spurious, input metrics). On the other, my academic colleagues seemed to spend a lot of time criticising (mostly legitimately) the rankings and their methodologies – but only when their university did not achieve the ranking they thought it deserved. I rather naively asked why, if we did not agree with the methodology, we were allowing ourselves to participate at all…

In the UK we are currently in the middle of a so-called ‘Research Excellence Framework’ (REF) exercise, held about every 6-7 years, that is largely metrics based and where publication impacts play a large role. The overall score allocated to a research unit or department determines its funding that totals several £ billion nationally. Earlier versions of this exercise included moderation by expert panels that corrected for the kind of phenomenon mentioned regarding the Bielefeld GBD study papers. There is a clear difference in being 57th author in a list of 250 on a highly cited paper compared to being the major (normally placed last in science papers) author on a moderately well cited paper with 3 other authors all from the same research group. Unfortunately the new metrics driven approach makes it more difficult for panellists to correct for a possible ‘Bielefeld effect’ thereby leaving the door open to all kinds of distortions and even possible abuse of the system.

Brilliant thinking on the matter. What Jelena discusses here is definitely something scholars discuss privately in the “narrow corridors” of the academia, but like to take the advantage of it anyways when the opportunity presents itself. There are numerous other examples of a single scholar being responsible for university decline or rise in the rankings alone.

Great article on an important topic. The anti-organic corporatization of universities has been happening for some time now and I now despair for the loss of the ‘organic university’. I attempted, in 2005, to provide a critique of this trend but I am please to see Jelena blow-up the institutional ranking process that was no so well developed in 2005. For some history see: https://www.academia.edu/21482387/Organicism_and_the_Organic_University

Rankings are here to stay and their importance will grow. This discussion reminds me of old debates on Google rank, and cheating the system purposefully or not with black hat seo. Anybody not using Google search? There are bad concentration effects at the top but it still the main gateway.

Rankings have always existed, the quality and availability just keeps on increasing. 50 years ago, the US had Ivy League, the UK Oxbridge, France les Grandes Écoles, and further more detailed word of mouth multiform rankings.

Methodology will improve and fix outliers like maybe dividing citations by number of authors or even rank of the author. Additional criteria will be added such as diversity, progression of students etc.

Better spending time educating on how to use rankings with caution than trying to make them irrelevant, this is just not going to happen.

Criticizing rankings is emotionally and intellectually satisfying, even accurate, but will have no impact whatsoever on their popularity.

I agree with Pierre D. The author rightfully critizes how rankings can be misused, but does not provide a solution to the problem. The problem is: as nobody will disagree that some universities are “better” than others, there is a need to measure and visualize this. Prospective students and employees have a right to know what they are signing up to. Just to acknowledge the fact that some universities are objectively “better” than others is already a ranking being made. Said otherwise: it would be downright absurd if what Dr Brankovic intends to say here is that all universities are equal per definition, and what university you should choose is a matter of personal subjectivity only.

So, if we are already making rankings anyway, better do this as scientifically sound as possible. And here we are at the problem with ANY data processing problem. Data processing means trying to extrude meaningful information from data. This is tricky business, as how meaningful the extruded information is, depends on the quality of the algorithms used, the quality of the data, the completeness of the data, statistical fluctuations in the data, you just call it.

Producing university rankings is a data processing scientific procedure like any other. And like any other scienfic procedure, it can (and definitely should) always be improved, and there is always the danger of overinterpreting or misinterpreting the results. I won’t deny that this is happening a lot. But to conclude that because rankings are often misinterpreted, they are therefore meaningless, is scientifically incorrect. Nobody will disagree that, for instance, Stanford is overall a better university than any of the ones ranked 1000+ in THE. Yes, maybe the University of WhatEver is better than Stanford for one particular individual student or academic and field of study. But exceptions can be made for every statistical comparison. That doesn’t make such comparison completely invalid.

I agree with Frank in general, but there is one “but” and even a few.

Misinterpretation of rankings is one side of the problem, there also others like data manipulations including violation of academic integrity by universities. And like in online chess there is no way to detect all the cheaters and prove it. Yes, in your example Stanford vs 1001+ nothing help the second university to win, but do we need the Ranking to figure out that Stanford better than the University of WhatEver?

I’m for the Ranking, but in the current stage, it’s only a supporting tool with many limitations, but the majority uses it as the main tool. Imho, Dr Brankovic’s post about it, not about that all univs are equal.

Thanks for an article

“One of the authors—and one only—came from Bielefeld”.

And it is not an extreme example. Sometimes no one comes from university that benifited from a research article in Rankings. I mean when an author specified an affiliation of university that he visited once or even never visited. And it becomes more and more popular practice.

Good point, especially when affiliation is not always accurate and open to gaming eg. https://blogs.lse.ac.uk/impactofsocialsciences/2019/09/26/whats-in-a-name-how-false-author-affiliations-are-damaging-academic-research/

Good article. The analysis, however, misses the connection between HE and State policy. Those universities that have ‘low performance’ are castigated by Ministeries of Education in the accreditation process and the ‘quality’ measurements. I agree with the author that as academics and researchers we have a great responsibility, not as observes but as propositive actors.

Excellent article. And one need not to go much further and conclude that the whole idea of ranking is flawed by the simple fact that universities are conceived to be different from one another. Not to mention how money is the biggest bias in any ranking.

About five or six years ago I was analysing my university’s performance in one of the international league tables, I forget which. I was puzzled to see that one department, not internally regarded as one of our best, seemed to be the most cited in the world in its subject area. I duly investigated.

It turned out that this was due to just one highly cited publication: a classic textbook (not even a piece of research), originally authored in the 1990s, which had been reissued as an e-book recently enough to be picked up by the algorithm as a “new publication”. Not only was this absurd in itself, but the author was actually at a entirely different university when the textbook was written, and had retired several years before the league table was issued. And for that, the department was accorded the status of “most cited in the world”.

El Ranking de categorización Universitaria como recurso en la medición en la calidad educativa está o debe serlo en la calidad de sus aportes en la investigación y publicaciones docentes. Además de sus competencias educativas a lo local como nacional e internacional. No debeser visto como un ego personal que a veces como humanos pretendemos utilizar e impulsar en la toma de decisiones en señalamientos a veces mal inducidod. Es de como la educacion debe ser considerada en este siglo XXI, inferiendo su extension en lo global e universal. Como es el caracter universitario atraves del buena gestión en sus programas educativos e utilización del paradigma investigativo del alma a ser referenciada.

Wait till you go to Asia, where ranking is not only taken seriously, it gets to define policies and investments. Ministries of education insist on ranking improvements, populations indulge in them in newspapers, and losers get no love. The problem with this is that this obsession with rankings has taken what was once a promising set of universities and made them focus on improving their rankings, which tend to be gimmicks: 1) Hiring highly-cited researchers in some part time capacity, 2) Inflating the number of students while 3) Inflating the number of faculty by reconsidering what it means to be a professor and who qualifies for it, and 4) dumping fields where citations are naturally poor for the benefit of fields known to have large citations, and the like. You get the idea. So, it is not absurd, it is damaging.

Rankings are, and always have been, primarily a means of control. Control of power or (usually the same thing) control of money. They are mostly a waste of everyone’s time, but no university can really dare to say so (and the bean counters now in charge at universities like the overall “business model”, which is personally lucrative), and academics are forced to go along with it or suffer the consequences. By submitting to the ludicrous and complex procedures which are claimed to rank the worth of a university and individual departments, the whole educational system has been degraded.

See https://cacm.acm.org/magazines/2016/9/206263-academic-rankings-considered-harmful/fulltext

Moshe Vardi

The University Rankings are a business organization with an agenda. Only a true expert can tell a student which university is best for him. We in the USA are fortunate that we have three Universities Rockefeller (tiny and special in biological sciences mainly, earlier Rockefeller Institute), Cal-tech (in engineering and biological sciences) and Cooper Union (in engineering only) which are a class apart from all global universities. If we use a benchmark of genuine Nobel Prizes from the original source both Rockefeller (extremely tiny, including Max Theiler) and Cal-tech (tiny) have generated 20 Nobel Prizes each: https://www.nobelprize.org/prizes/lists/nobel-laureates-and-research-affiliations/ The highest amount of Nobel Prizes have been won by the University of California

There are those academics who add value to their institutions and there are those academics who need the ranking of their institutions and there are those in between. University rankings are gap fillers for the professional uncertainty that some feel.

What about a ranking of the rankings? Which is ranking is best and why?