The contributions of science and research to society are typically made intelligible by measuring direct individual contributions, such as number of journal articles published, journal impact factor, or grant funding acquired. Leo Tiokhin, Karthik Panchanathan, Paul Smaldino and Daniel Lakens argue that this focus on direct individual contributions obscures the significant indirect contributions that scientists make to research as a collective undertaking. In the first of two posts they outline why indirect contributions should be given more weight in research assessment.

The beauty and the tragedy of the modern world is that it eliminates many situations that require people to demonstrate a commitment to the collective good.

Imagine two scientists, Postdoc 1 and Postdoc 2, who have just obtained their PhDs and are entering the job market.

Postdoc 1 has four empirical papers. They are first author on two, including a publication in a prominent journal. Postdoc 1 has 75 citations, with two papers cited 25 times each—not bad for a newly-minted PhD in their field. They have also mentored five undergraduate students and have obtained a modest research grant.

Postdoc 2 has seven empirical papers. They are first author on five, including three publications in prominent journals. Postdoc 2 has over 200 citations, with four papers cited more than 40 times each—impressive for a newly-minted PhD in their field. They have also mentored five undergraduate students theses and obtained a major research grant.

Suppose you were a member of a search committee, and Postdoc 1 and Postdoc 2 were in the running for your department’s final interview spot. Which candidate would you choose?

Postdoc 2, right? Of course, you know that focusing on proxy measures like publication count, citations, and funding can distort science by incentivizing less-rigorous research. But, it really does seem like Postdoc 2 is doing better work, at a higher rate of productivity, and with more potential for external support. If you had to select the best individual scientist, Postdoc 2 would seem like the obvious choice.

Is choosing the best scientist that simple?

Now imagine that you talk to colleagues and learn a bit more about each candidate.

You learn that Postdoc 2 is sometimes negligent: they don’t carefully document their experimental procedures, don’t check their code for bugs, and don’t make their materials available and accessible. You also learn that Postdoc 2 engages in questionable research practices to increase their chances of getting statistically significant findings (incentives, right?). As a consequence, some of Postdoc 2’s publications probably contain false positives, which will waste the time of scientists who try to build on their work. Postdoc 2 is so motivated to be successful that they neglect many prosocial aspects of academic work: they rarely perform departmental service or help colleagues when they ask for assistance, and write lazy peer reviews. To top it off, Postdoc 2 is a terrible mentor—colleagues have seen Postdoc 2 exploiting students, stealing their ideas without giving proper credit, and withdrawing mentorship from students who were struggling. Postdoc 2 may be productive, but they are a crappy colleague.

In contrast, you learn that Postdoc 1 is exceptionally diligent: they carefully document their experimental procedures, double check their code for bugs, and make their materials readily accessible to others. Postdoc 1 works hard to avoid questionable research practices and conducts their research methodically. As a consequence, their publications are more likely to contain reliable findings, contributing to the gradual accumulation of scientific knowledge. Postdoc 1 is committed to helping fellow community members: they serve on departmental committees, help colleagues when colleagues ask for help, and are a thoughtful, constructive peer reviewer. To top it off, Postdoc 1 is a dedicated mentor: they devote personal time to help students, credit students for their contributions, and step up when students are struggling. Sure, Postdoc 1 may not be the most ‘productive’ individual, but they are an ideal colleague.

Knowing all of this, would you reconsider your choice? More generally, is it possible to separate scientists’ intellectual contributions from their effects on the productivity and well-being of colleagues and the broader scientific community?

Typical evaluation criteria ignore indirect effects

Of course, Postdoc 1 and 2 are caricatures—real differences between candidates are rarely so clear cut.

Yet, their tale is useful because it illustrates two pathways by which scientists contribute to science: directly and indirectly.

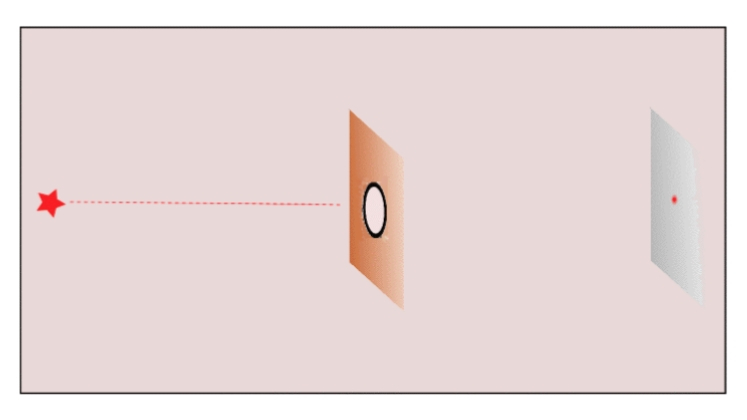

A ‘direct’ effect is one in which the causal path goes straight from a scientist’s efforts to a measurable scientific outcome. An ‘indirect’ effect is one in which the causal path from a scientist’s efforts to a measurable scientific outcome goes through other scientists. In other words, indirect contributions are mediated by their effects on other scientists’ direct contributions. The following image (technically a Directed Acyclic Graph DAG) illustrates these two pathways:

Any scientist can contribute via these two pathways. Thus, without accounting for both direct and indirect contributions, it is impossible to determine a scientist’s total contribution to any scientific outcome.

This should be concerning, given that many indirect effects are left out of standard research metrics. However, maybe this is just a minor issue. After all, no metric can capture all relevant factors, so how harmful is it really if we only measure direct effects? Are there tangible repercussions for the efficiency of science, the well-being of scientists, the spread of good scientific practices, or other dimensions that truly matter?

We see three serious repercussions of prioritizing direct effects while ignoring indirect ones.

Ignoring indirect effects fails to reward scientists who help others and fails to penalize scientists who harm others

First, consider an extreme case in which a scientist generates little direct output, such as producing no first-authored publications. Given current evaluation criteria, such a scientist would struggle to find a research position, get grants, and receive awards. Would this be justified?

Possibly.

The problem is that, if the scientist has large positive indirect effects, then their total contribution may be large enough to warrant being recognized and rewarded, despite the fact that they produce little work themselves. You may know such scientists—they are not exceptionally productive, but they lift up their department and are a joy to have as colleagues. By failing to recognize their indirect contributions, current evaluation criteria don’t give them the recognition that they deserve.

Now consider a scientist who generates substantial direct output, such as having many first-authored publications. Should this scientist be hired, get grants, and receive awards?

Possibly.

If the scientist also has large positive indirect effects, then focusing on direct output would lead to an underestimate of their total contribution. However, if the scientist is productive in-part by imposing negative indirect effects on others, then they achieve personal success at others’ expense. As a result, ignoring indirect effects would lead to an overestimate of their total contribution.

Sure, such a scientist might still be worth hiring: a superstar may be so prolific that they are worth keeping around, despite the cost they impose on others. However, it is impossible to know without accounting for indirect effects. Many of us also know of such scientists—they may be productive, but are crappy colleagues and may even be exploitative. By failing to recognize that these individuals indirectly harm science, current evaluation criteria give them more recognition than they deserve.

Ignoring indirect effects increases the intensity of competition between individual scientists

Ignoring indirect effects increases the intensity of individual-level competition by reducing the “stake” that scientists have in the outcomes of other scientists. In biology, this is well established: evolutionary mechanisms that cause individuals to have a stake in each other’s outcomes (such as relatedness) result in a “shared fate,” often reducing individual-level competition and promoting cooperation.

Of course, competition can be useful (promoting innovation, increasing effort, and incentivizing individuals to tackle diverse problems). The problem is that individual-level competition incentivizes scientists to only engage in those behaviours that benefit themselves, even though individually-beneficial behaviours are a mere subset of the behaviours that benefit science as a whole. So, understandably, scientists end up engaging in low levels of many collectively-beneficial behaviours, such as sharing code and well-documented datasets, doing replication research, conducting rigorous peer-reviews, and criticizing the work of others.

Competition also incentivizes scientists to harm others, in situations where scientists benefit from the failures of their competitors (for example, two labs competing for priority of discovery or two scientists competing for the same grant). In focus-group discussions with scientists at major research universities, Anderson et al. document unnerving examples of the things that scientists do to succeed in intensely-competitive contexts, including strategically withholding and misreporting research findings, delaying peer review of competitors’ papers to “beat them to the punch,” and lying to and exploiting PhD students.

It’s no surprise that competition and the pursuit of self-interest can make everyone worse off. And, there is a clear analogy with science: selecting scientists based on individual productivity, while ignoring indirect effects, generates intense individual-level competition, exacerbating the disconnect between what scientists must do to have successful careers and what is best for science and the well-being of scientists.

Ignoring indirect effects reduces the incentive to specialise in unique skills that complement others

A focus on direct, individual contributions using a narrow set of metrics, such as first-authored papers, creates an additional problem: scientists have fewer incentives to specialize in roles that are not rewarded by the prevailing regime, even if these roles are essential for science.

In psychology, for example, scientists are incentivized to become a ‘content specialist’ and develop a unique and identifying brand (“Pat studies evolved fear predispositions; Kim studies working-memory constraints”). By contrast, there is little incentive to become a ‘methodological specialist’ and develop a skill set that complements the skills of others (“Pat is an expert statistician; Kim is a dedicated peer-reviewer”). In an empirically-dominated discipline like psychology, more so than in disciplines like physics or economics, a scientist would struggle to find a job as a dedicated theorist. So instead of becoming methodological specialists, scientists spread themselves thin across competencies that are rewarded. In this way, ignoring indirect effects may hinder the efficient division of labour that is crucial for “large team” science.

Where do we go from here?

Ignoring indirect effects has serious repercussions for science. How can we make progress towards addressing these problems? Are there ways to reduce the disconnect between scientists’ individual and collective interests? In the next post, we draw on insights from non-academic (and even non-human) fields, and outline how current ways of recognizing and rewarding scientists could be modified to produce better outcomes for science and scientists.

A version of this post first appeared as Why indirect contributions matter for science and scientists, on Medium.

Note: This article gives the views of the authors, and not the position of the LSE Impact Blog, nor of the London School of Economics. Please review our comments policy if you have any concerns on posting a comment below

Image Credit: Feature image Fakurian Design, via Unsplash. DAG adapted from author’s own illustration.