Wikipedia has become focal point in the way in which information is accessed and communicated within modern societies. In this post, Zachary J. McDowell and Matthew A. Vetter discuss the principles that have enabled Wikipedia to assume this position and how at the same time these governing principles, formulated early in the Wikipedia project, have embedded particular forms of knowledge production and biases within the platform.

If anything on the Internet needs no introduction, it would be Wikipedia. Now twenty years old, Wikipedia is a veritable geriatric in the grand scheme of Internet history. It has gone from being the butt of jokes, to being one of the most valued collections of information in the world. You would be hard pressed to find someone who hasn’t heard of Wikipedia, let alone someone who hasn’t used it within the last month, week, or even day. Despite its flaws, it remains the last best place on the Internet. However, if there is one thing that we have learned in our shared 20 years of experience with Wikipedia, it is that it is also one of the most widely misunderstood places on the Internet. This is something we have attempted to shed light on in our recent book, Wikipedia and the Representation of Reality, where we analyse the formal policies of the site to explore the often complex disconnect between policy and actual editorial practice.

For starters, Wikipedia is not just an encyclopedia, it is a community – a movement really. One with a grand mission – to make “the sum of all human knowledge available to every person in the world.” But even if people get that, what they don’t understand is how this happens, including who writes it, why, and what governs Wikipedia.

Wikipedia has no “firm rules” (this is specified in the Five Pillars), but it does have quite a few policies and guidelines to push this mission forward. These help to ensure that the encyclopedia remains neutral and reliable, which then helps to combat against disinformation on the encyclopedia (and consequently the Internet at large, as Wikipedia is one of the largest and most accessed repositories for information). There are numerous policies and guidelines, but we found the most significant to be: Reliability, Verifiability, Neutral Point of View (NPOV) and Notability.

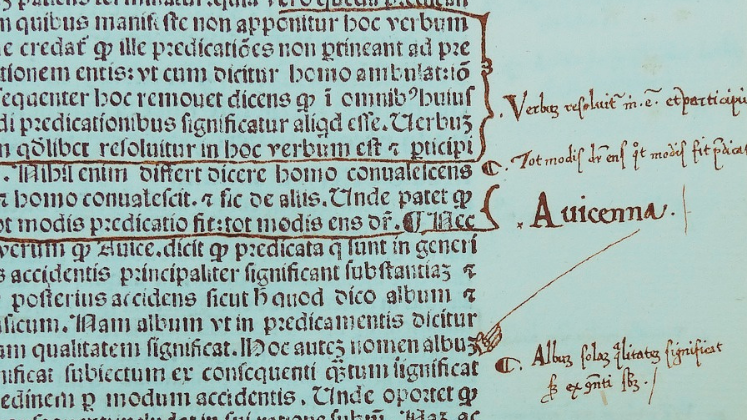

Reliability refers to relying on reliable secondary sources, while Verifiability ensures that statements on Wikipedia must be cited. Together, these policies form the ways in which Wikipedia relies on the authority of reliable secondary sources (instead of using terms like “truth”) to construct its own authoritative voice. As far as “voice” goes, NPOV refers to both the language of Wikipedia (descriptive of information, without sounding persuasive) as well as the representation of information within the articles. As information should come from secondary sources, it is important to represent what is out there and give space only as much as it is represented in reliable sources. Essentially, this policy acts as a protection for articles against over-representation of fringe ideas such as climate change denial. Finally, Notability governs “what counts” for inclusion as a Wikipedia article and requires multiple reliable sources on a topic before a topic warrants its own page.

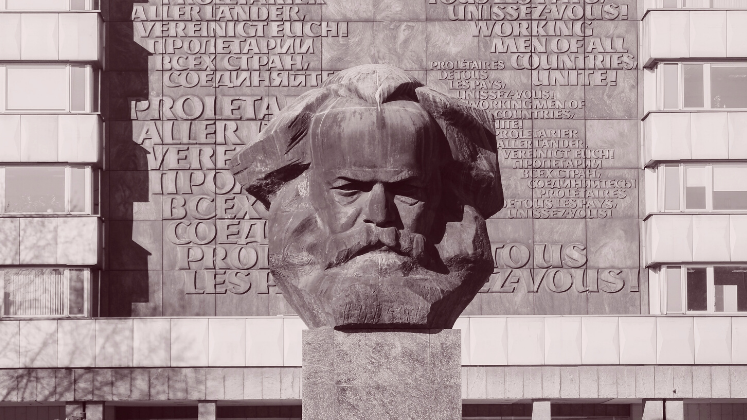

Despite Wikipedia being “the encyclopedia anyone can edit,” its policies are part of a larger history of knowledge production, and in particular, follow the larger (western) project of encyclopedias in general. Wikipedia’s information is designed to always remain a representation of what is published in secondary sources, which both makes it reliable insofar as representative of secondary information, but also means that it can suffer from the same systemic biases (and even more compounded) that exist both historically and currently in the larger information ecosystem. As acknowledged by Katherine Maher, former CEO of Wikimedia, Wikipedia is a mirror of society’s biases, and nowhere are these biases more visible than the much-discussed gender gap. The fact is that less than 20% of Wikipedia editors identify as women. This leads to all kinds of issues related to content gaps, policy biases, community climate and harassment, which we discuss in detail in our book. Notably, these issues broke into to mainstream in the case of Donna Strickland (the first woman to win a Nobel Prize in Physics since Marie Curie), who not only did not have a Wikipedia page until about 90 minutes after she won, but as it later emerged had a previous draft of her article rejected for not meeting notability standards. This and other issues are part of a larger systemic bias (eg. the lack of reporting on women in science), but are exacerbated and reflected by low levels of diversity within Wikipedia itself.

If Wikipedia is going to be truly representative of the world’s knowledge, and indeed constructing the ways in which reality is represented on the Internet (and then for the world), the community needs new editors that bring more diverse experiences and identities.

However, such biases can also be amplified by Wikipedia, due to the history of the community itself: especially its origins in a fairly homogenous and narrow (male) demographic. One of the earliest guidelines in Wikipedia, “Be Bold,” (developed in 2001) is especially illustrative of this issue. The “Be Bold” directive can be seen as encouraging and even necessary advice for new Wikipedia editors. However, as a social norm inscribed by early Wikipedia editors and founders, Larry Sanger in particular, the “Be Bold” directive can and should also be read as intrinsically prohibitive and gendered. Newcomers to Wikipedia, particularly those with marginalised identities, may choose to be bold and still have their edits questioned or reverted. They may choose to be bold and face multiple types of harassment. In short, as minorities in a predominantly male community, the extent of their boldness does not necessarily translate into fair and equitable treatment by other editors.

If Wikipedia is going to be truly representative of the world’s knowledge, and indeed constructing the ways in which reality is represented on the Internet (and then for the world), the community needs new editors that bring more diverse experiences and identities. We also need to be critical of (and even look for alternatives to) early mantras like “Be Bold,” developed during a very different time in the encyclopedia’s history. However, unlike most of the Internet, Wikipedia has outlasted many other organisations from similar times and, much like all organisations, must continue to evolve, grow, and come to terms and identify preconceptions that helped to formulate its trajectory.

The policies and guidelines that help construct its authority, the same ones that also ensure its continuing reliability, can also be divisive and used to exclude important participants and knowledge. As any policy analyst understands, unequal and biased implementation results in inequity and injustice. Much like in any analysis of policy, identifying these inequities and injustices can help to re-align and reformulate policies and guidelines to better achieve stated goals. While we are celebratory of Wikipedia’s project overall, we would never shy away from exploring its many problems and challenges, and hope that others will take the same approach in loving (and participatory) critique.

Note: This article gives the views of the authors, and not the position of the LSE Impact Blog, nor of the London School of Economics. Please review our comments policy if you have any concerns on posting a comment below.

Image Credit: LSE Impact Blog.

Although Wikipedia has contributed to democratize of knowledge and give a voice to everybody ( the good and the bad ) , it suffers also of a Mathews Effect as people from The Global South are not well represented . That comes from the fact that biases are still there despite the openness Wikipedia promotes.

Absolutely true, we focus here specifically on representation of women in science for example, but the biases of Wikipedia are the worlds biases magnified by the editors biases. We cover this more in the book. This being said, I know that the foundation is working hard on increasing representation, inclusion, and participation with areas in the global south. Personally I’ve done a lot of work in Ecuador to get people to write about indigenous knowledge and history.

Most anything relating to politics on Wikipedia is so often biased to the left, it’s unusable. In an age where formerly trustworthy mainstream news outlets are increasingly resorting to distortion and disinformation for narrative control, references to them are not reliable in many cases, yet Wikipedia doesn’t seem to mind. Some people have realized they have the power to influence popular belief with their version of reality by submitting or revising entries. The problem is , the people that are inclined do that are all indoctrinated with the academic , big tech , far left, ideology that’s been trending in recent years.

How can Wikipedia be “one of the most widely misunderstood places on the Internet”, and yet still somehow be “one of the most valued collections of information in the world”. Have you not simply made the mistake many commentators seem to make, and just assumed that because Wikipedia is widely used, that must mean people trust it? Does it not make more sense that Wikipedia is popular simply because it is free, easy to access, and they have always specifically said themselves that you cannot trust Wikipedia, you need to check the (typically absent) references provided (while still callling itself an encyclopedia, rather than a mere structured linkfarm)?

Thanx for this informative piece.

The demographics of the authors seem to reflect the biased demographics of Wikipedia editors; presumably this irony was mentioned in the book.

Wikipedia needs to decide what its next steps are. It can’t just keep on churning out information without managing the older stuff on there.

Viewing the world through the lens of Wikipedia provides valuable insights into how the world’s largest encyclopedia operates. Understanding its inner workings is crucial for navigating its vast repository of knowledge effectively. For those eager to contribute or learn more about Wikipedia’s processes, consider engaging Wikipedia editors for hire. These professionals offer expertise in creating and editing Wikipedia pages, ensuring accuracy, reliability, and adherence to Wikipedia’s guidelines.