Generative AI applications promise efficiency and can benefit the peer review process. But given their shortcomings and our limited knowledge of their innerworkings, Mohammad Hosseini and Serge P.J.M. Horbach argue they should not be used independently nor indiscriminately across all settings. Focusing on recent developments, they suggest the grant peer review process is among contexts that generative AI should be used very carefully, if at all.

Readers can find more posts on Peer Review and the Impact of AI on Higher Education via the links.

In the ever-evolving landscape of academic research and scholarly communication, the advent of generative AI and large language models (LLMs) like OpenAI’s ChatGPT has sparked attention, praise and criticism. The use of generative AI for various academic tasks has been discussed at length, including on this blog (e.g. in education). Among the potential use cases, having Generative AI support reviewers and editors in the peer review process seems like a promising option. The peer review system has long been facing various challenges including biased and/or unconstructive reviews, paucity of expert reviewers and the time-consuming nature of the endeavour.

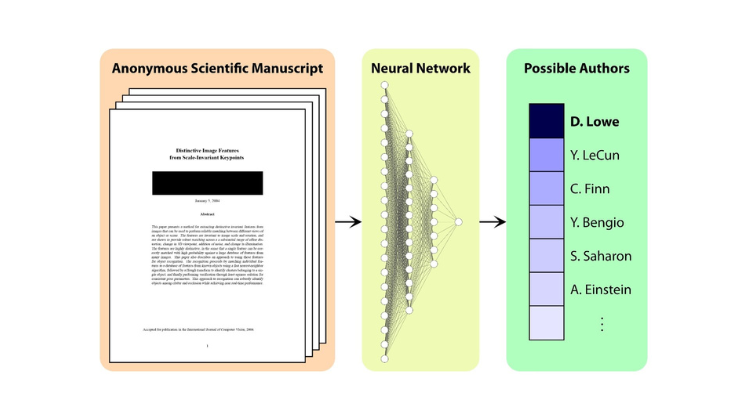

Although using generative AI might mitigate some of these challenges, as with many discussions about integrating new technologies into existing workflows, there are also several legitimate concerns. We recently examined the use of LLMs against the backdrop of five critical themes within the peer review context: the roles of reviewers and editors, the quality and functions of peer reviews, issues of reproducibility, and the broader social and epistemic implications of the peer review process. We concluded that generative AI has the potential to reshape the roles of both peer reviewers and editors, streamlining the process and potentially alleviating issues related to reviewer shortages. However, this potential transformation is not without complexity.

Assisting reviews, but not an independent reviewer

In their current form, generative AI applications are unable to perform peer review independently (i.e., without human supervision), because they still make too many mistakes and their inner workings are unknown and rapidly changing, resulting in unpredictable outcomes. However, generative AI can assist actors in the peer review process in other ways, for example by helping reviewers improve their initial notes to become more constructive and respectful. In addition, generative AI can enable scholars not writing in their native language to contribute to the review process in other languages (e.g., English), or help editors in writing decision letters based on a set of review reports. These use cases could help to broaden the reviewer pool and make the process more efficient and equitable.

generative AI can enable scholars not writing in their native language to contribute to the review process in other languages

Confidentiality, bias and robustness

Despite these potential benefits, and in addition to the limited capacities of generative AI to perform independent review, there are major concerns related to the use of LLMs in review contexts. These relate for example, to the way in which inputted data are used by the developers of generative AI tools. Especially when submitting data sets, potentially containing personal or sensitive data, the way in which tools’ developers use the inputted content should be transparent, which currently is not. In addition, generative AI tools run the risk of exacerbating some of the existing biases in peer review, as they reproduce content and biases contained in their training data. Third, since these tools evolve rapidly and their output highly depends on the prompt given (and even minor changes could have a great impact on generated content), their output is not always reproducible. This raises questions about the robustness of reviews generated with AI assistance, giving more credibility to the view that generative AI could only assist to improve (formatting, tone, grammar and readability) reviews that have been written by human reviewers.

Especially when submitting data sets, potentially containing personal or sensitive data, the way in which tools’ developers use the inputted content should be transparent, which currently is not.

Outsourcing the social element of peer review

Another concern relates to the fact that peer review is an inherently social process. Indeed, rather than a mechanistically objective gatekeeping mechanism, peer review is based on interactions between peers about what it means to do good science. Therefore, the process is an important means to discuss and negotiate community norms about what questions should be addressed, what methods are appropriate or acceptable, what ways of communication are most suitable, and many more. Peer review is also a fundamental constitutive component of research integrity and ethical norms. In a recent study, we found that, when it comes to research integrity norms, researchers most prominently value the opinion of their epistemic peers, i.e. those that publish in the same journals or attend the same conferences, rather than, for example, other colleagues working at the same institute. Peer review processes are a prominent site where experts ‘meet’ and where discussions about these topics, implicitly or explicitly, unfold. Outsourcing these processes to automated tools might impoverish these discussions and have wider unforeseen consequences.

Recent developments

In the months since our article has been published, several developments have changed the landscape of generative AI and impacted their use for academic peer review purposes. The reaction of some funders (such as the National Institutes of Health (NIH) [Notice number NOT-OD-23-149] and the Australian Research Council [Policy on Use of Generative Artificial Intelligence in the ARC’s grants programs]) to ban the use of Generative AI in their grant review and assessment processes are among these.

Funders have a responsibility to protect researchers and their ideas from being scooped and Limiting the use of generative AI in their review processes aligns with this responsibility.

These funders are primarily concerned about confidentiality and hallucination; and rightly so. Grant review is a different ball game to journal reviews, as the former shapes research agendas and access to financial resources, while the latter, primarily reports results of studies that have already been conducted and sometimes published as preprints. In terms of confidentiality, using generative AI to review grant applications might give away novel ideas and solutions. Especially in regards to grants, it is important to note that having access to new ideas as early as possible (even before they are funded) could provide major advantages. Funders have a responsibility to protect researchers and their ideas from being scooped and Limiting the use of generative AI in their review processes aligns with this responsibility. Regarding hallucination, generative AI models still make mistakes even at the level of basic facts. Therefore, using them to review grants and subsequently distribute funds could seriously damage the integrity of funders’ workflow and compromise the legitimacy of funding decisions. Furthermore, grant applications sometimes contain detailed information about each member of the project, whose privacy might be compromised if they are shared with third parties. This is also a main concern when generative AI is used to review manuscripts or data sets, which might contain personal or sensitive information about research participants, or technologies.

Applications of Generative AI are still in early phases of development and in the near future could benefit the peer review process in many ways. That said, given limitations of this technology, small scale experiments and phased adoption should be encouraged. We should also be ready to pull the plug when needed or in cases where risks outweigh the benefits.

While we support the ban of generative AI applications in some contexts, we also have concerns about this strategy in the long run. Apart from the complicated question of how to enforce such a ban and monitor compliance, one always needs to balance the concerns in relation to generative AI with their efficiency gain that could free up financial resources (in the case of funders, to fund additional projects). Moving forward, we recommend different user groups in academia to frequently revisit and revise their policy about using generative AI applications based on the circumstances and adopt risk-mitigating measures that fit their specific context.

This post draws on the authors article, Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other large language models in scholarly peer review, published in Research Integrity and Peer Review.

The content generated on this blog is for information purposes only. This Article gives the views and opinions of the authors and does not reflect the views and opinions of the Impact of Social Science blog (the blog), nor of the London School of Economics and Political Science. Please review our comments policy if you have any concerns on posting a comment below.

Image Credit: Kristaps Ungurs via Unsplash.