Every now and again a paper is published on the number of errors made in academic articles. These papers document the frequency of conceptual errors, factual errors, errors in abstracts, errors in quotations, and errors in reference lists. James Hartley reports that the data are alarming, but suggests a possible way of reducing them. Perhaps in future there might be a single computer program that matches references in the text with correct (pre-stored) references as one writes the text.

Every now and again a paper is published on the number of errors made in academic articles. These papers document the frequency of conceptual errors, factual errors, errors in abstracts, errors in quotations, and errors in reference lists. James Hartley reports that the data are alarming, but suggests a possible way of reducing them. Perhaps in future there might be a single computer program that matches references in the text with correct (pre-stored) references as one writes the text.

Different kinds of error

The highest estimates of error rates are to be found when people study the accuracy of citations in journal articles. These estimates vary from 25 – 50%. The paper by Luo et al (2014) provides a typical example from the medical literature. The main errors in citations appear to be in the author’s names (and initials), titles, page numbers, and the dates and places of publication.

The estimated percentage of errors made in quotations has varied from approximately 10 – 40% in over a dozen studies Buijze et al; Luo et al; Davies; Wager & Middleton, with a median of about 20. Such quotations can be well known or bowdlerised – e.g., ‘To write or not to write – that is the question.’ Or, it is more likely that authors are quoting what was said by a previous author – e.g., Author (1960) said that ‘It was unlikely that such results could be obtained without error (p.64)’. Whatever the case, it seems that writers often rely on memory and quote without checking (or even citing) original sources. Some authors argue that it is essential to include the page number(s) for any quoted text so that readers can locate them and check their accuracy.

Rejoinders to publications, and possibly book reviewers, are likely to point to errors in original texts. Giles (2005), for example, compared errors in Wikipedia and Encyclopaedia Britannica. Wager and Williams (2011) reported that retractions in medical (and other) journals have increased sharply since 1980, but not all of these retractions are due to errors: an increasing number are due to research misconduct.

Errors in abstracts are somewhat different. Here we have to compare the content of what is said in the abstract with that which follows in the text. Here there are both sins of omission and commission. ‘Informative’ abstracts bely their name by not informing the reader at all about what happens in a study, only what was done. ‘Structured’ abstracts make it easier to look for errors because each section of the abstract corresponds to each section of the paper (Introduction, Aims, Methods and Results and Discussion). It is probable that most errors of omission and commission occur in traditional abstracts. Some of these might be slips (e.g., p<.001 rather than p<.01): some might be deliberate (e.g., leaving out crucial information in the abstract – such as that there were only 4 participants in the study).

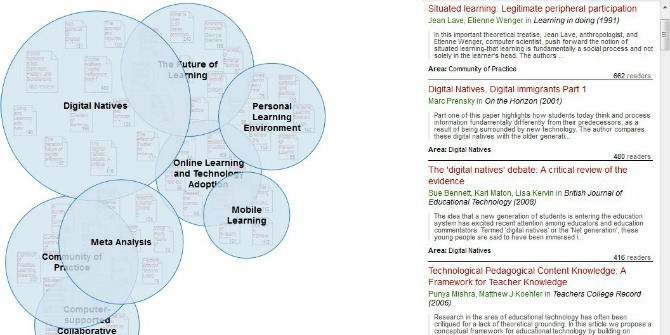

Table 1 shows that most studies of errors can be found in the medical literature. But there are also studies in the social and environmental sciences. It is likely that there are articles on errors of facts and interpretations in arts journals, but since I do not read this literature I have not come across them. (Does this count as an error of omission?)

Table 1. Recent papers on error rates in different fields of enquiry

| Chemistry | Seibers (2001) |

| Environmental Science | Lopresti (2010) |

| Geology | Donovan (2008) |

| Library and Information Science | Davies (2012) |

| Medicine | Seibers (2001); Pitkin, Branagan and Burmeister (2000); Gehanno et al (2005); Mertens & Baethge (2011); Buijze et al; Luo et al (2014) |

| Pharmacy | Ward, Kendrach & Price (2004) |

| Psychology | Hartley (2000); Harris, et al (2002) |

Errors in the dates of publication, journal volumes, titles and page numbers all individually and in combination can prevent papers from being retrievable from electronic systems. Lopresti (2010) found that citations in electronic links had fewer errors (20%) than conventional in-text references (25%), but these numbers are still high.

Both Mertens & Baethge (2011) and Davies (2012) note, to date, that no-one has reported any relationship between error rates and impact factors.

What can be done to reduce errors?

So far the reduction of errors has been left to

- Editors exhorting authors to check their texts carefully.

- Editors exhorting reviewers to check the details when refereeing.

- Editors changing the rubrics for submitting papers to academic journals.

A study by Pitkin, Branagan and Burmeister (2000) did indicate that changes in the rubrics could be effective. In their study of 100 abstracts in Journal of the American Medical Association the authors found 26 deficient abstracts in the pre-intervention period and 10 in the post intervention period. But this widely cited paper is the only one on changing rubrics that I have been able to find.

Image credit: Brainsnorkel (Flickr, CC BY-NC-SA)

Image credit: Brainsnorkel (Flickr, CC BY-NC-SA)

As a reviewer, I find exhortations from editors to check for errors irritating. I assume (obviously incorrectly) that authors have always checked their facts pre-submission. I thus tend to look over the reference lists for omissions, changes in format etc., but I do not check in detail the accuracy of the information provided in article. That, for me, is the author(s) job.

In my view it is the author’s job to check the details of the texts. This is sometimes not as easy as it seems – as I have found with this Blog! Indeed, I have been surprised by how difficult it can be to check for errors and I now feel a little humbler than I did when I began working on this Blog!

Future prospects

Perhaps what is needed is a new approach. It should not be beyond the whit of man (or woman) to cross-check the references they are citing with the actual references stored on computer databases. Perhaps in future there might be a single computer program that matches references in the text with correct (pre-stored) references as one writes the text. A by-product of such a program might be that it might encourage the adoption of a single format for presenting references.

Featured image credit: Brainsnorkel (Flickr, CC BY-NC-SA)

Note: This article gives the views of the authors, and not the position of the Impact of Social Science blog, nor of the London School of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

James Hartley is Emeritus Professor in the School of Psychology at Keele University, UK. (E-mail; j.hartley@keele.ac.uk)

Informative article – as you alluded, the use of software like Mendeley or Endnote can reduce errors by creating a standardized repository of reference citations that can then be double-checked at the end. Once a reference has been verified, it can be marked as such and any citations to that source will be automatically updated in the corresponding Word document. Very handy!

Sorry, but why isn’t it the editor’s job to spot errors? For references, they are the ones who should be checking that references match the house style of the journal, and can check that other important details are correct at the same time. Similarly, if an abstract is badly written (as they often are), why aren’t editors picking up on this and kicking it back to the author to improve? Reviewers should be checking the quality of the content, but are not trained to pick up on errors such as the ones you’ve described.

I guess you mean ‘the wit of man’… 🙂