Proving research ‘impact’ can be tricky and impact itself can be hard to define, despite expectations from research donors and university evaluations. LSE’s Duncan Green has written a series documenting the impact of research at the LSE Centre for Public Authority and International Development – so what has he learnt about creating impact and how it should be reported?

This post is part of a series on public authority evaluating the real-world impact of research at the LSE Centre for Public Authority and International Development (CPAID) at the Firoz Lalji Institute for Africa.

Being asked to write impact case studies for the research programme at the Centre for Public Authority and International Development (full list of links at the end of this post) was a lot of fun, and a bit unusual. When discussing impact, whether through the REF or the wider demands of self-promotion, institutions normally pick the research that has had the biggest impact, then try and work out why/how. Of course, this results in a massive selection bias. This set of case studies has a couple of those (such as those on hunger courts in South Sudan and Ebola in Liberia) but other research projects don’t claim to have had world-changing impact. In that latter group, it was fascinating to see what influence ‘normal’ research has and why.

Here I’ll try to pull together the ‘learnings’ (vomit emoji) from the six case studies. Let’s start with a famous quote from Milton Friedman, an academic who knew a thing or two about impact.

‘Only a crisis – actual or perceived – produces real change. When that crisis occurs, the actions that are taken depend on the ideas that are lying around. That, I believe, is our basic function: to develop alternatives to existing policies, to keep them alive and available until the politically impossible becomes the politically inevitable.’

These CPAID case studies confirm Friedman’s view on the importance of crises in opening policy windows for researchers, but they also add a lot of detail on what else can help beyond having your ideas ‘lying around’.

Firstly, they highlight a string of necessary-but-not-sufficient conditions, or at least factors that improve your chances of having impact with your research:

Relationships: A lot of the impact came from researchers’ using their networks – a glass of wine with UN types in Juba, or national researchers embedded in local decision-making systems.

Track Record: The longer you have been in a place, the more credibility and contacts you accumulate. This may not be intentional – you go somewhere to research your PhD without any thought of influence, but years later the people you interviewed can open the door when you return with ideas.

Authentic, high quality research: Phew – yes it still matters.

Social Media: If you are on social media, it is much easier to grab one of those windows of opportunity.

Brand: It may well be unfair, even colonial, but it helps if you have LSE after your name.

Findings that are surprising/unexpected: Research that confirms what everyone already thinks or does is understandably not likely to get much interest. The academic equivalent of a journalistic ‘man bites dog’ story (such as ‘Ebola death rates were lower in community run treatment centres than in the official ones’) piques interest and opens doors.

Secondly, you need luck, but not just luck. As Louis Pasteur said on his ‘accidental’ discovery of germ theory, ‘fortune favours the prepared mind’. Crises can be helpful – scandals, shocks and general meltdowns have policymakers scrambling for solutions with suddenly open minds. But what makes them listen to your research rather than someone else’s?

Often, it comes back to relationships. If you’ve met them, know them, even just interviewed them for your research, decision-makers are more likely to pick up the phone or accept the meeting. But an entrepreneurial mindset also helps – someone who’s willing to go beyond their academic comfort zone, talk to a scary international body, and maybe open doors for more junior research staff, as Tim Allen did for Holly Porter at the ICC.

Finally, how should we attribute impact to a piece of research? The short answer is – with great difficulty.

For a start, it’s a lot easier to claim impact for a given researcher than a specific research output. The REF case studies are full of senior academics who have been invited onto advisory groups or ended up as de facto consultants for decision-makers. But as I found in the case of Melissa Parker’s work on Ebola, which seems to have landed her on the UK COVID advisory committee, those kinds of invitations are often based on reputation/a lifetime’s work rather than a specific piece of research. Pity the poor junior researcher just starting out, who has yet to gain that track record but is still required to ‘prove’ impact.

Digging into that consultant point, consultants come armed with solutions, however specious. Many academics do not; they highlight problems, things that aren’t working and unwarranted assumptions underpinning policy-making. If they succeed in getting a government or international body to think again, they won’t be able to point to their magic bullet/toolkit to prove impact. Tricky.

My overall impression? Impact is important, messy and unpredictable, but there are things you can do to greatly improve your chances of achieving it.

Catch up on the case studies here:

- Supporting early warning systems for famine in South Sudan

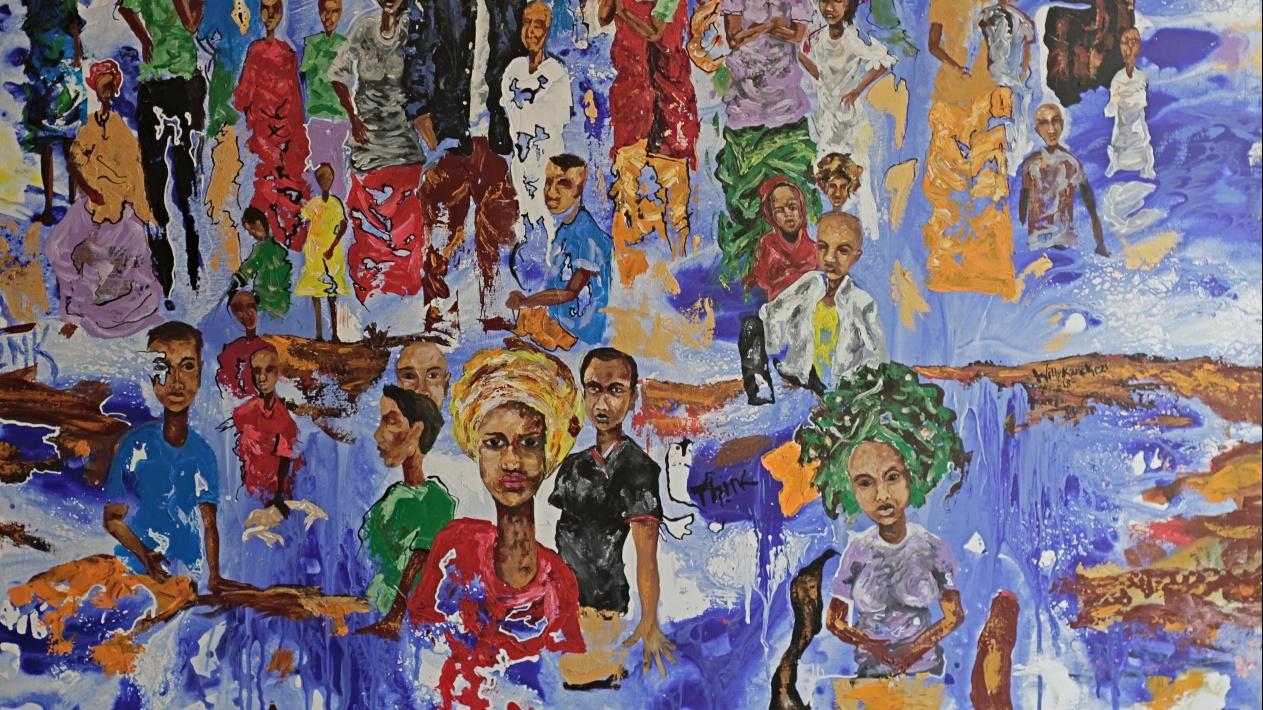

- How an arts project created real-world impact for refugees and formerly displaced persons

- How research into Ebola secured a seat at the table of COVID-19 policymaking

- How research impacted the reform of Ugandan refugee camp aid systems

- ‘New’ issues in development policy drive research impact on Somali state-building

- How research into sexual wronging changed the course of the landmark trial at the ICC

Photo: UNMISS Police Commissioner Fred Yiga visits police posts in Juba. Credit: UNMISS, Licensed under CC BY-NC-ND 2.0.