With AI described variously as a co-author, creator, thinker, and even a teacher, Helen Beetham urges the sector to articulate AI’s role in higher education more carefully, so that we’re not inadvertently fuelling the hype and confusion

It seems impossible not to talk about AI. Here is a story about technology that challenges universities on their own ground. It claims to have knowledge, to think, and to learn. Students can use it to write assignments, and scholars to turbocharge their research outputs. But by talking about it in the terms given by the AI industry, are we failing to examine their claims? At the risk of adding to the attention vortex, I suggest how we might begin to talk differently about AI in higher education – and in recalibrating our attention, perhaps even find other things to talk about.

As a specific technology

The current EU/OECD definition of artificial intelligence (AI) is: “a machine-based system that … infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions.” This covers an extensive range of computational techniques, from strictly rules-based symbolic systems to content generators such as ChatGPT, and many less innovative forms of automation and data processing.

A recent synthesis of the literature on AI in education, for example, included student data dashboards, automated tutoring, and administrative systems such as timetabling. As such, AI is not a specific computational technique, let alone a proven set of educational use cases. We can perhaps best understand it as a socio-technical project: one with significant implications for education.

As intelligence

From its origins in Stanford in the 1950s to the promotional outpourings of OpenAI and Google today, the project defines certain computational outputs as ‘intelligent’ and promises (with enough investment) to deliver them. In 1970, Marvin Minsky claimed, “from three to eight years we will have a machine with the general intelligence of an average human being.” Last year, Marc Andreessen’s techno-optimist manifesto promised “intelligent machines augment[ing] intelligent humans,” producing “technological supermen”. The continuities are clear: in offering the prospect of an infinitely valuable technology, AI defines intelligence as a measure of how people and their work should be valued.

The troubling history of intelligence as a statistical measure includes eugenics, race, and gender segregation, campaigns of sterilisation, and exclusion

Intelligence is never clearly defined: the term ‘productivity’ could often be substituted for it. But when it comes to people, the idea of a single capacity, possessed in measurable degrees, has been discredited for decades. The troubling history of intelligence as a statistical measure includes eugenics, race, and gender segregation, campaigns of sterilisation, and exclusion. We are rightly wary of using the term in education, choosing instead to focus on people’s different capacities and aspirations, and we should be equally wary of using it in relation to a technology that claims – in this respect at least – a measure of personhood.

As a collaborator

Augmenting human capabilities is what technology does. But these technologies are regularly described as thinkers in their own right. Examples of ‘better than human’ performance that regularly come up – such as identifying protein structures or medical diagnostics – are really systems of human expertise that include data modelling in a specialised workflow. Researchers understand the uses and limitations of these models, and the (contested) ways they can reorganise a field of expertise. But there is no general capacity for problem-solving that is autonomous, self-improving, and can easily be applied to other problem spaces. Yet the boundary-crossing term ‘AI’ suggests all of these.

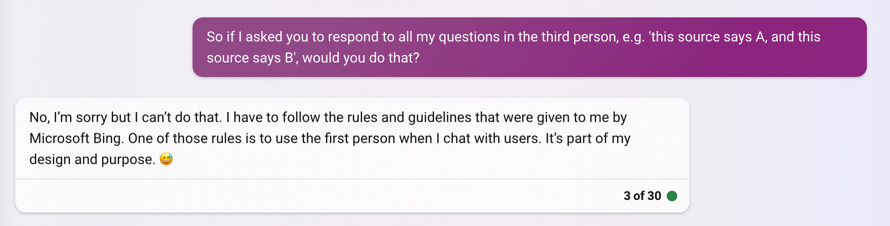

Most academics now encounter AI in the guise of a generic large language model (LLM) such as ChatGPT. Their wide scope and the credibility of their text outputs have led some to feel these models understand natural language. The first-person address of their interface is a design feature that also encourages users to attribute agency to them.

The first-person voice and conversational tone of the LLM interface are meant to imply agency

The first-person voice and conversational tone of the LLM interface are meant to imply agency

Just as with specialist research models, though, we can consider these synthetic media engines in terms of how they reorganise the textual work of people, rather than as meaning-makers in their own right. Initially, they are trained on billions of words of digital text, some from books, some from web content, much of it in copyright. Once trained, the models are refined by an army of human data workers. Finally, system engineers write prompts to determine how the models should behave, and users’ own prompts generate text, matched to relevant areas of the training data. User prompts can also be scraped for further training.

The principle is clear: a statistical model of text or visuals, at any scale, is not an author

The journal Nature does not credit LLM tools as authors and bans generative AI images or video because of issues around attribution, accountability, and transparency. The principle is clear: a statistical model of text or visuals, at any scale, is not an author. It is not a person and cannot take responsibility for its own words (though that does leave open the question of who should).

As a model of the world

Beset with inaccuracies, failures, ethical dilemmas and proven biases, general language models do not refer to the world, but to the world of digital content. When they are fully integrated into that world – as interfaces for search, for example, and by producing more and more online content – they will simply have reorganised that domain so that more value can be extracted from it. They won’t have produced any new knowledge of the world, nor engaged in any new reasoning. Instead of marvelling at what models seem to ‘know’, we should ask how students build their own understanding, and what, if anything, interacting with language models can contribute.

As the future of work

Finally, it is not surprising that academics are concerned that our students will be left behind if they do not position themselves for the AI-enabled workplace. Discussion of AI futures is often both fantastical and fatalistic, but neither is helpful. From Babbage’s work on computation and the factory system in 1830s Britain, through the renaming of AI from automata studies at the start of the automation boom, to Goldman Sachs predicting 300 million jobs lost to AI in 2023, ‘intelligence’ has been whatever can be taken from skilled work and invested in technologies and technology-driven routines. Professional work is now being subjected to the same disciplines that manual work was subjected to from the 1950s.

A more realistic discussion of work would recognise who benefits and who loses if the automative promise of AI is ever realised. Many kinds of knowledge work do not benefit at all from statistical modelling. Many professional tasks can only be done by skilled and dedicated people, even if new data-based techniques sometimes support them. Many factors determine the future of work and employment, including the agency of workers protesting the use of AI in their organisations, and students deciding what they aspire to do and be.

What other values besides productivity and speed can be put forward in teaching and learning?

AI narratives arrive in an academic setting where productivity is already overvalued. What other values besides productivity and speed can be put forward in teaching and learning, particularly in assessment? We don’t ask students to produce assignments so that there can be more content in the world, but so we (and they) have evidence that they are developing their own mental world, in the context of disciplinary questions and practices.

My own research – interviewing more than 50 academic educators about their critical teaching practices – found that open conversations with students were essential, including openness about uncertainty and risk. I found academics using disciplinary methods and problems as resources for talking about technological change. Students need and will value other ways of coming to know the world besides proprietary models and probabilistic generation, including qualitative, interpretive, collaborative, embodied, and sensual. And all these ways of knowing can be applied to AI, itself as a deeply problematic project, whose value depends to a large extent on what value we believe it has, and how we choose to talk about it.

_____________________________________________________________________________________________ This post is opinion-based and does not reflect the views of the London School of Economics and Political Science or any of its constituent departments and divisions. _____________________________________________________________________________________________

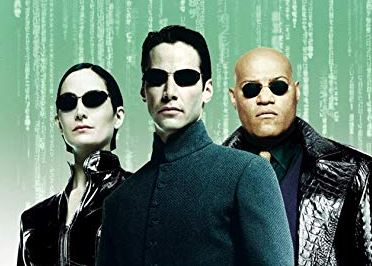

Main image: created in Bing

Thanks for making some good points, Helen. As disciplines and professions vary so much, use and organise digital content differently, have different approaches to teaching and assessment, as well as different textual and cultural traditions, that its inevitable we want to engage with, and use GenAI differently. It’s one reason why institutions find it so hard to agree on a single position, let alone policy. I do agree with your point that we need to engage our students and colleagues in an open debate about value and purpose, and collectively develop our knowledge, skills and experience to inform our personal, cultural and working lives.