Non-academics with extensive experience of particular sectors and industries can provide unique insights into the potential pathways to impact for new research projects. Drawing on a quasi-natural experiment comparing assessment panels with and without non-academic experts, Junwen Luo discusses the how these skills were perceived by academics and how the inclusion of non-academics might benefit from clearer definitions of impact in applied research grants.

Research funding agencies are increasingly using mixed review panels to facilitate broader discussions on the prospective impact of research proposals. Such mixed panels include traditional peer reviewers from the scholarly community, but also non-academic reviewers. Non-academic reviewers are stakeholders with relevant expertise, experiences, and perspectives in the proposed research areas and come from industry, government, public agencies etc. Research in organisations, juries, and other settings has repeatedly shown that a diversity of backgrounds (discipline, gender, race, and other characteristics) enhances collective decision-making. In short, diverse groups bring different experiences to bear on a problem, with positive effects for legitimacy and creativity.

Perhaps the most studied example of mixed panels is the UK’s REF 2014 that involved over 250 non-academics (accounting for 23% of panel members) in impact panels to discuss retrospectively the use and influence of scientific findings in the real world. In this instance, researchers have found that REF’s academic and non-academic reviewers’ interpretations of the impact criterion varied widely, especially their perceptions of what constituted excellence in impact and in good evidencing of impact.

There are also examples of non-academics’ involvement in ex ante grant evaluations (those that occur prior to research grants being made), many in health research fields and clinical practices. Examples include the United States’ Congressionally Directed Medical Research Program, the Dutch Heart Foundation, and a provincial health care provider in Canada. The Canadian agency, for instance, incorporated ‘user evaluators’ who had experience representing the community, who had been diagnosed with the relevant condition themselves, and/or had been a caregiver of someone diagnosed with the condition. Also, some programmes targeted at industrial innovation have involved industrial experts in the review panels, such as the ‘Industrial Leadership’ pillar of Horizon 2020 Framework Programme and ‘Innovation Projects for the Industrial Sector’ at the Research Council of Norway. Several scholars have suggested that education and public outreach professionals and other user representatives be included in the U.S. National Science Foundation panels.

In a study I undertook with Lai Ma and Kalpana Shankar, we compared two types of panels for impact assessment at Science Foundation Ireland (SFI). In 2014 and 2015, SFI organised two separate panels for its Investigators Programme (IvP): a science panel involving only academic peers to evaluate scientific excellence and an impact panel mixing academic peers and non-academic stakeholders. In 2016, both the science and impact sections of proposals were reviewed by a comprehensive panel consisting exclusively of academics. Academic reviewers for SFI all come from outside of Ireland to avoid conflicts of interest in this small country, while most of the non-academic reviewers are from local regions. This structure created a “natural experiment” that allowed us to explore the contribution of non-academic reviewers in grant proposal impact analysis. To that end, we analysed the review reports, written by individual reviewers at the end of their panel discussion, conducted a survey of SFI reviewers (N=310), and interviewed some of them (N=16).

We found that the inclusion of non-academic reviewers enriched and deepened the impact panel discussions with broader perspectives and distinctive insights, especially on the topics of national and local beneficiaries and contextual pathways of impact. Compared with purely academic panels, mixed panels’ comments on impact focused more on characteristics of applicants (e.g. performance and resources of the applicant team) and research process (e.g. training, education, collaboration, and public outreach activities), rather than scientific achievements (e.g. publications). Notably, industrial reviewers provided a valuable ‘reality check’ for use-oriented applied research projects, as one academic interviewee put it:

‘They [industrial reviewers] certainly look for different things and I quite like them to sit into these panels because they have a completely different take on a biological problem…there are in a way also knowledge for us [academic reviewers]…I do not know [how to] develop a new therapeutic approach for disease excellence. Of course, I write [about] it but I do not really have any clue on how to translate from the bench to the bedside. These people do because it is what they have worked all their lives…They really have the feeling on what will work and what will not work’.

At the same time, academic reviewers shared some concerns about the inclusion of non-academic reviewers. They were concerned that non-academics may not be able to completely understand the research and review processes and thus be overly cautious about seemingly unrealistic, risky, and surprising ideas. Some academics thought that their fellow academics could more accurately assess the potential impact of their colleagues’ work. Especially for basic science research proposals with more distance from end users, some opponents believed that the agendas of industry-based reviewers (regardless of vetting for conflicts of interest) could threaten the funding of excellent basic research since such projects tended to have more uncertain and unpredictable pathways to impact and need longer timeframes to realise that impact.

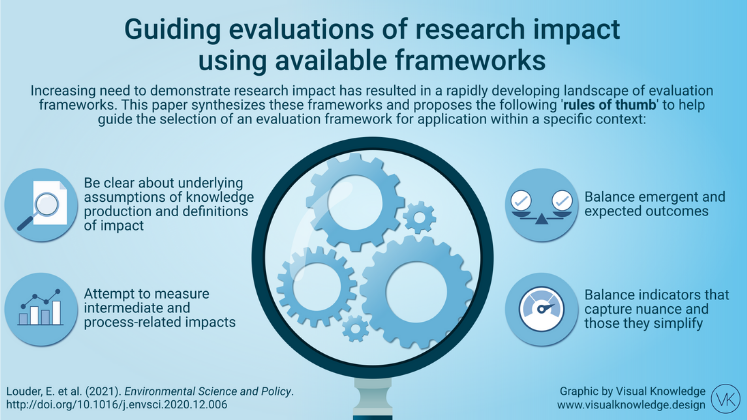

without clear frameworks, including what ‘impactful research’ means, for whom, and according to what criteria, it can be easy for non-academic evaluators to be misused in grant review panels

Our study suggests that without clear frameworks, including what ‘impactful research’ means, for whom, and according to what criteria, it can be easy for non-academic evaluators to be misused in grant review panels. To promote the alignment between the value added by non-academics and the context in which they are being used, the objectives, targeted research fields and orientations of the funding programme should guide clearly what types of impact are possible, what criteria are to be used to assess the impact pathways, and who should evaluate them. The ambition to achieve a full list of all types of impact from differently oriented research projects can be the biggest challenge for panels when recommending funding allocation.

The study suggests it is beneficial to involve non-academic reviewers for narrowly defined impact targeted grants (e.g. industrial application oriented grants or problem-solving grants) where the user perspectives and experiences are explicitly and widely valued. To take advantage of this, funding agencies could further categorise their targeted impact types for their funding programmes, especially for large and complex programmes that fund various research fields and orientations. This could help agencies refine their impact criteria and guidance for reviewers and decide how and when involving non-academics is most beneficial.

This post draws on the author’s co-authored paper, Does the inclusion of non-academic reviewers make any difference for grant impact panels?, published in Science and Public Policy.

Note: This article gives the views of the authors, and not the position of the LSE Impact Blog, nor of the London School of Economics. Please review our comments policy if you have any concerns on posting a comment below

Image Credit: Adapted from Vinicius “amnx” Amano via Unsplash.

1 Comments