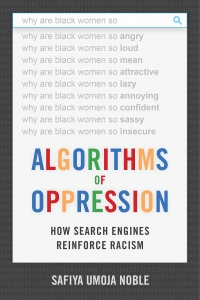

In Algorithms of Oppression: How Search Engines Reinforce Racism, Safiya Umoja Noble draws on her research into algorithms and bias to show how online search results are far from neutral, but instead replicate and reinforce racist and sexist beliefs that reverberate in the societies in which search engines operate. This timely and important book sheds light on the ways that search engines impact on our modes of understanding, knowing and relating, writes Helen Kara.

This book review has been translated into Mandarin by Chloe Yige Xiao 肖怡格 (LN814, teacher Dr Lijing Shi) as part of the LSE Reviews in Translation project, a collaboration between LSE Language Centre and LSE Review of Books. Please scroll down to read this translation or click here.

Algorithms of Oppression: How Search Engines Reinforce Racism. Safiya Umoja Noble. New York University Press. 2018.

Find this book (affiliate link):

Find this book (affiliate link): ![]()

Google search results are racist and sexist. Perhaps you know this already or maybe it comes as a surprise. Either way, Algorithms of Oppression: How Search Engines Reinforce Racism by Safiya Umoja Noble will have something to offer. Noble is an associate professor at UCLA in the US, and her book is based on six years of research into algorithms and bias.

As the book’s subtitle suggests, algorithmic bias is not unique to Google. However, Google is the dominant search engine, such that its name is now in common use as a verb. Pretty much everyone with internet access uses search engines, and most of us use Google. Many people regard Google as neutral, like a library (which, of course, wouldn’t be neutral either, though that is a different discussion). However, Google is not neutral: it is a huge commercial corporation which is motivated by profit. The ranking system used by Google leads people to believe that the top sites are the most popular, trustworthy and credible. In fact, they may be those owned by the people most willing to pay, or by people who have effectively gamed the system through search engine optimisation (SEO).

Ten years ago, a friend of Noble’s suggested that she google ‘black girls’. She did, and was horrified to discover that all the top results led to porn sites. By 2011 she thought her own engagement with Black feminist texts, videos and books online would have changed the kinds of results she would get from Google – but it had not. The top-ranked information provided by Google about ‘black girls’ was that they were commodities for consumption en route to sexual gratification.

When problems such as those that Noble experienced are pointed out to Google representatives, they usually say either that it’s the computer’s fault or that it’s an anomaly they can’t control. This reinforces the misconception that algorithms are neutral. In fact, algorithms are created by people, and we all carry biases and prejudices which we write into the algorithms we create.

Interestingly, Noble reports that Google’s founders, Sergey Brin and Larry Page, recognised that commercial agendas had the potential to skew search results in an article they wrote while they were doctoral students at Stanford. At that very early stage, they suggested that it was in the public interest not to have search engines influenced by advertising or other forms of commercialism.

Porn makes money. If you have money, and you pay Google, you can generate more hits for your website. The internet has a reputation as a democratic space, yet the commercial interests that influence what we can find online are largely invisible. The internet is far from being a space that represents democracy, and what is more, people are now using the internet to affect offline democracy.

Image Credit: (Pixabay CCO)

After the book’s first two long chapters about searching online in general, and the results of Noble’s internet search for ‘black girls’, there is a short and vivid chapter in which Noble forensically implicates Google in the radicalisation of Dylann Roof. Roof is a young white American who carried out a terrorist attack on African Americans who were worshipping at their Christian church, killing nine people. Noble is careful in this chapter to use the word ‘alleged’: Roof allegedly searched for information to help him understand the killing of Black teenager Trayvon Martin by a white neighbourhood watch volunteer who was acquitted of murder. Allegedly, using the term ‘black on white crimes’, he found conservative, white-run websites preaching white nationalism for the US and Europe, and encouraging racial hatred, particularly of Black and Jewish people. Noble used the same term and found similar results under different research conditions. She notes that Roof’s alleged search term did not lead to FBI statistics which show that violent crime in the US is primarily an intra-racial, rather than an inter-racial, problem. Presumably white nationalist sites are willing to pay Google more, and/or put more time into SEO, than the FBI. Noble concludes that search engines ‘oversimplify complex phenomena’ and that ‘algorithms that rank and prioritize for profits compromise our ability to engage with complicated ideas’ (118).

There are three more chapters: ‘searching for protections from search engines’, ‘the future of knowledge in the public’ and ‘the future of information culture’. Towards the end of the middle one of these is an impactful statement:

Search does not merely present pages but structures knowledge, and the results retrieved in a commercial search engine create their own particular material reality. Ranking is itself information that also reflects the political, social, and cultural values of the society that search engines operate within… (148)

Technology and the internet don’t simply inform or reflect society back to us in a perfect facsimile as if the screen were a mirror. They change how we understand ourselves and our cultures and societies. One particularly complicated idea, which Noble expresses succinctly, is that ‘we are the product that Google sells to advertisers’ (162). Also, as we interact with technology and the internet, they change us and our networks and surroundings. This applies to journalism too; yet while there is plenty of room to critique journalism for ‘fake news’ and bias and so on, there are also journalistic codes of ethics against which journalists can be held to account. There is no equivalent for those who write algorithms, create apps or produce software or hardware.

As Noble underscores, Google is so massive that it’s hard to comprehend. It set up its parent company, Alphabet, in 2015, apparently to ‘improve transparency’, among other things. Alphabet is growing rapidly. At the end of 2017 it employed 80,110 people and one year later it had 98,771 staff. Its financial growth in 2018 was $136.8 billion. Its users encompass around half of the world’s internet users. Google’s stated aim is to ‘organize the world’s information’. It seems a little sinister, then, at least to me, that Alphabet has recently expanded into drone technology and behavioural surveillance technologies such as Nest and Google Glass. There appears to be an almost complete lack of any moral or ethical dimension for the organisation of information to which Google aspires. The BBC recently reported that online platforms use algorithms that are built to reward anything that goes viral, which means the more outrageous the content, the more revenue is generated. For example, I recently saw in several places on social media a link to a website with a woman’s skirt for sale bearing a printed photographic image of the gas chambers at Auschwitz, which was later reported in the mainstream media. This is the kind of phenomenon we invite when we allow organisations to become monopolies driven by commercial rather than public interests.

Noble doesn’t try to offer an exhaustive account of all the ways in which the internet affects inequalities. That would certainly require a much longer book and would probably be impossible. She does address a number of power imbalances that intersect with her core focus on race and gender, such as antisemitism, poverty, unemployment, social class and radicalisation. She writes that her ‘goal is […] to uncover new ways of thinking about search results and the power that such results have on our ways of knowing and relating’ (71). I would judge that she has achieved her aim in this important and timely book.

Note: This review gives the views of the author, and not the position of the LSE Review of Books blog, or of the London School of Economics and Political Science. The LSE RB blog may receive a small commission if you choose to make a purchase through the above Amazon affiliate link. This is entirely independent of the coverage of the book on LSE Review of Books.

谷歌的搜索引擎是带有种族主义和性别歧视的。也许你之前就知道这个事实,也许你感到很惊讶。无论如何,萨菲娅•乌莫亚•诺布尔(Safiya Umoja Noble)的《算法的压迫:搜索引擎如何强化种族主义》能提供一些见解。诺布尔是加州大学洛杉矶分校的助理教授,这本书基于她六年来对算法及其偏差的研究。

从这本书名的副标题可以看出算法偏差并非只是谷歌的问题。不过谷歌是最大的搜索引擎,以至于它的名字通常被用成动词了。几乎所有能联网的人都会使用搜索引擎,大部分人用的也就是谷歌。许多人会把谷歌看成是中立的,如同一座图书馆(当然这也不一定代表图书馆就是中立的,但这就另当别论了)。然而谷歌并不是中立的:它是一个由利润驱动的庞大商业公司。谷歌的排行系统会引导大家去相信排列在前面的网站都是最受欢迎、最可靠、也最可信。事实上,这些网站运营商可能是愿意多交钱,或者是通过搜索引擎优化(SEO)来玩弄系统。

从这本书名的副标题可以看出算法偏差并非只是谷歌的问题。不过谷歌是最大的搜索引擎,以至于它的名字通常被用成动词了。几乎所有能联网的人都会使用搜索引擎,大部分人用的也就是谷歌。许多人会把谷歌看成是中立的,如同一座图书馆(当然这也不一定代表图书馆就是中立的,但这就另当别论了)。然而谷歌并不是中立的:它是一个由利润驱动的庞大商业公司。谷歌的排行系统会引导大家去相信排列在前面的网站都是最受欢迎、最可靠、也最可信。事实上,这些网站运营商可能是愿意多交钱,或者是通过搜索引擎优化(SEO)来玩弄系统。

十年前,诺布尔的一位朋友建议她在谷歌上搜索一下 “黑人女孩”。她就这样做了,然后惊恐地发现显示在最前面的几个结果全都是色情网站。在2011年之前,她以为自己参与的一些与关于黑人女权的文献,视频以及线上书籍会改变她在谷歌搜索出来的结果 ,但并没有。谷歌所提供排名最高的信息是把“黑人女孩”当成一种满足性欲的消费品。

当与诺布尔所经历类似的问题被指出来时,谷歌发言人要么辩解说是电脑问题,要么说是无法受控的异常。这种言论强化了算法是中立的错觉。但事实上,算法都是人设计出来的;我们都有自己的偏差和偏见,而这些也会被写进我们所创建的算法里。

有意思的是,诺布尔指出说谷歌的创始人谢尔盖•布林 和拉里•佩奇还在斯坦福读博时写的一篇文章里承认了商业化的意图可能歪曲搜索结果。当初他们还建议为了公共利益,搜索网站最好不要受广告和其他商业化模式的影响。

色情片可以赚钱。如果你有钱并支付给谷歌,你就可以让你的网站的点击量上涨。互联网有着‘民主空间’的美名,但是商业利益对我们网络搜索结果的影响却被大大忽视了。网络远不是一个能代表民主的地方了,更甚的是,现在人们会用互联网影响线下的民主。

Image Credit: (Pixabay CCO)

在关于广泛的网上搜索以及诺布尔在网上得到的‘黑人女孩’的搜索结果的两章长篇之后,在一个短而生动的章节里,诺布尔暗示了谷歌对于迪伦•鲁夫(Dylann Roof)极端主义的支持。鲁夫是一位美国白人青年。他恐怖袭击了正在基督会做礼拜的非裔美国人。诺布尔在这篇文章里谨慎地用了 “据说”的字眼:“据说”为了解黑人青年特雷沃恩•马丁 (Trayvon Martin)被一位白人居民联防的志愿者枪击案,鲁夫搜集了资料。“据说”,在他使用 “黑人对白人的罪犯” 为搜索关键词时,他找到了一些保守主义白人运营的网站。这些网站一直在传道美国和欧洲的白人种族主义,还尤其鼓励针对黑人和犹太人的种族仇恨。

诺布尔用了同样的搜索关键词,在不同的研究假设条件下也搜到了相似的结果。她指出鲁夫所谓搜索的关键词并没有指向联邦调查局数据显示的结论 — 即美国暴力犯罪基本上是同种族内, 而不是异族之间的。大概就是因为白人种族主义的网站更愿意付钱给谷歌,也比联调局在搜索引擎优化(SEO)方面付出更多精力。诺布尔得出的结论就是各个搜索引擎会 “过度简化复杂的现象” ;与此同时 ,“考虑盈利的排序和优化的算法影响了我们与复杂想法的互动能力”(118)。

此书另外还有三章:“寻找来自搜索引擎的保护”,“公共知识的未来”,以及“信息文化的未来”。在中间一章的结尾处有一句非常具有影响力的结论:

搜索不只会呈现各种页面,它同样也构建了知识,在一个被商业化的搜索引擎中所得到的结果也会创造自己特有的物质现实。排序结果本身也反映出了搜索引擎所运营的那个社会的政治、社会、以及文化的价值观……(148)

屏幕并非镜子,科技和互联网并不会完美告知我们或反射社会的真相。他们会改变我们对于自身、自己文化以及社会的认知。诺布尔简洁地表达了一个极其复杂的概念: “我们就是谷歌卖给广告商的产品” (162)。还有当我们跟科技和互联网互动时, 它们会改变我们、我们的周遭、以及互联网环境。这也适用于新闻学;虽然有足够机会可以批判新闻业中的‘假新闻’和偏见等等,但新闻业还是有很多需要承担责任的道德规范。但那些写算法,开发APP或制作软件和硬件的人,就无需遵循等同的道德规范。

就像诺布尔所强调的,谷歌规模大到无法理解。在2015年谷歌成立了母公司Alphabet,目的之一是为了 “改善透明度”。 Alphabet正在迅速增长。在2017年底雇佣了8,0110人,一年后就有了9,8771名员工。它的财务增长在2018年是1368亿美金。用户涵盖了全球一半的互联网用户。谷歌的宗旨是“整合全球信息”。

至少对于我来说,Alphabet 最近的扩张,比如无人机科技和谷歌巢和谷歌眼镜之类的行为监测,似乎有些险恶。谷歌所期望的信息整合似乎没有任何伦理与道德层面上的考虑。

最近BBC报道多个互联网平台专门运用的算法会奖励任何被广泛传播的内容。这也就意味着内容越离谱,营收越多。比如说,我最近在社交媒体上多处看到的一条链接是卖女款短裙的。而短裙上印的是奥斯维辛毒气室的图片。主流媒体报道稍后也报道此事。这是我们“自作自受/自食其果”– 当我们允许垄断企业被金钱利益而不是公共利益所驱动时就会出现这种现象。

对于网络是如何影响不平等,诺布尔并没有尝试(穷尽地)说明所有方式。那肯定会需要写更长的书,估计也是不可能的。不过她涉及了几个与权力不平衡相交的核心重点,比如反犹太主义、贫困、失业、社会阶层以及激进主义。她写道她的“目标就是【。。。】发现一些新的关于搜索结果和这些结果对于我们认知的影响力的思考方式”(71)。我认为她在这本重要且适时(及时)的书中达到了她的目标。

13 Comments