Research metrics are currently being debated across the UK. With last week’s 1AM conference discussing alternative metrics and this week’s In metrics we trust? event as part of the Independent Review of the Role of Metrics in Research Assessment, the uses and misuses of metrics are under close scrutiny. Cameron Neylon reports back from last week’s altmetrics conference and looks at the primary motivations and applications of new data sources for building a better scholarly communication system.

Research metrics are currently being debated across the UK. With last week’s 1AM conference discussing alternative metrics and this week’s In metrics we trust? event as part of the Independent Review of the Role of Metrics in Research Assessment, the uses and misuses of metrics are under close scrutiny. Cameron Neylon reports back from last week’s altmetrics conference and looks at the primary motivations and applications of new data sources for building a better scholarly communication system.

Last week, along with a number of PLOS folks, I attended the 1AM Meeting (for “First (UK) Altmetrics Conference”) in London. The meeting was very interesting with a lot of technical progress being made and interest from potential users of the various metrics and indicators that are emerging. Bubbling along underneath all this there is an outstanding question that although it appeared in different contexts remains unanswered. What do these various indicators mean? Or perhaps more sharply what are they useful for?

This question was probably most sharply raised in the online discussion by Professor David Colquhoun. David is a consistent and trenchant critic of all research assessment metrics. I agree with many of his criticisms of the use and analysis of all sorts of research metrics, while disagreeing with his overall position of rejecting all measures, all the time. Primarily this comes down to us being interested in different questions. David’s challenge can perhaps be summed up in his tweet: “I’d appreciate an example of a question that CAN be answered by metrics”. Here I will give some examples of questions that can (or could in the future) be answered, with a focus on non-traditional indicators.

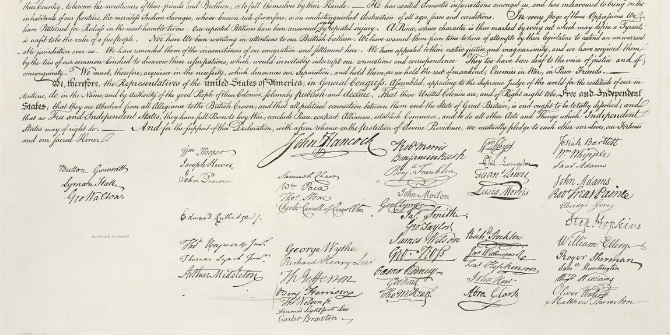

Image credit: Cambodia4kids.org Beth (Flickr, CC BY)

Image credit: Cambodia4kids.org Beth (Flickr, CC BY)

Provide evidence that…

Much of the data we have is sparse. That is, the absence of an indicator can not reliably be taken to mean an absence of activity. For example a lack of Mendeley bookmarks may not mean that a paper is not being saved by researchers, just that those who do are not using Mendeley to do it. A lack of tweets about an article does not mean it is not being discussed. But we can use the data that does exist to show that some activity is occurring. Some examples might include:

- Provide evidence that relevant communities are aware of a specific paper. I identified the fact that this paper was mentioned by crisis centres, sexual health organisations and discrimination support groups in South Africa when I was looking for UCT papers with South African twitter activity using Altmetric.com.

- Provide evidence that this relatively under cited paper is having a research impact. There is a certain kind of research article, often a method description or a position paper that is influential without being (apparently) heavily cited. For instance this article has a respectable 14,000 views and 116 Mendeley bookmarks but a relatively (for the number of views) small number of WoS citations (19) compared to say this article which is similar in age and number of views but has many more citations.

- Provide evidence of public interest in…A lot of the very top articles by views or social media mentions are of ephemeral (or prurient) interest, the usual trilogy of sex, drugs, and rock and roll. However dig a little deeper and a wide range of articles surface, often not highly cited but clearly of wider interest. This article for instance has high page views and Facebook activity amongst papers with a Harvard affiliation but is neither about sex, drugs nor rock and roll. Unfortunately because this is Facebook data we can’t see who is talking about it which limits our ability to say which publics are talking about it which could be quite interesting.

Compare…

Comparisons using social media or download statistics needs real care. As noted above the data are sparse so it is important that comparisons are fair. Also comparisons need to be on the basis of something that the data can actually tell you (“which article is discussed more by this online community” not “which article is discussed more”).

- Compare the extent to which these articles are discussed by this online patient group. Or possibly specific online communities in general. Here the online communities might be a proxy of a broader community or there might be a specific interest in knowing whether the dissemination strategy reaches this community. It is clear that in the longer term social media will be a substantial pathway for research to reach a wide range of audiences, understanding which communities are discussing what research will help us to optimise the communication.

- Compare the readership of these articles in these countries. One thing that most data sources are very weak on at the moment is demographics but in principle the data is there. Are these articles that deal with diseases of specific areas actually being viewed by readers in those areas? If not, why not? Do they have internet access, could lay summaries improve dissemination, are they going to secondary online sources instead?

- Compare the communities discussing these articles online. Is most conversation driven by science communicators or by researchers? Are policy makers, or those who influence them involved? What about practitioner communities. These comparisons require care and simple counting rarely provides useful information. But understanding which people within which networks are driving conversations can give insight into who is aware of the work and whether it is reaching target audiences.

What flavour is it…

Priem, Piwowar and Hemminger (2012) in what remains in my mind one of the most thoughtful analyses of the PLOS Article Level Metrics dataset used principle component analysis to define different “flavours of impact” based on the way different combinations of signals seemed to point to different kinds of interest. Much of the above use cases are variants on this theme – what kind of article is this? Is it a policy piece, of public interest? Is it of interest to a niche research community or does it have wider public implications? Is it being used in education or in health practice? And to what extent are these different kinds of use independent from each other?

I’ve been frustrated for a while with the idea that correlating one set of numbers with another could ever tell us anything useful. It’s important to realise that these data are proxies of things we don’t truly understand. They are signals of the flow of information down paths that we haven’t mapped. To me this is the most exciting possibility and one we are only just starting to explore. What can these signals tell us about the underlying pathways down which information flows? How do different combinations of signals tell us about who is using that information now, and how they might be applying it in the future. Correlation analysis can’t tell us this, but more sophisticated approaches might. And with that information in hand we could truly design scholarly communication systems to maximise their reach, value and efficiency.

Some final thoughts

These applications largely relate to communication of research to non-traditional audiences. And none of them directly tell us about the “importance” of any given piece of research. The data is also limited and sparse, meaning that comparisons should be viewed with care. But the data is becoming more complete and patterns are emerging that may let us determine not so much whether one piece of work is “better” than another but what kind of work it is – who is finding it useful, what kinds of pathways is the information flowing down?

Fundamentally there is a gulf between the idea of some sort of linear ranking of “quality” – whatever that might mean – and the qualities of a piece of work. “Better” makes no sense at all in isolation. Its only useful if we say “better at…” or “better for…”. Counting anything in isolation makes no sense, whether it’s citations, tweets or distance from Harvard Yard. Using data to help us understand how work is being, and could be, used does make sense. Building models, critiquing them – checking the data and testing those models to destruction will help us to build better communications systems.

Gathering evidence, to build and improve models, to apply in the real world. It’s what we scholars do after all.

This piece originally appeared on as Altmetrics: What are they good for? on the PLOS Opens blog and is licensed under a Creative Commons Attribution 4.0 International License.

Note: This article gives the views of the author, and not the position of the Impact of Social Science blog, nor of the London School of Economics. Please review our Comments Policy if you have any concerns on posting a comment below.

Cameron Neylon is a biophysicist and well known advocate of opening up the process of research. He is Advocacy Director for PLOS and speaks regularly on issues of Open Science including Open Access publication, Open Data, and Open Source as well as the wider technical and social issues of applying the opportunities the internet brings to the practice of science. He was named as a SPARC Innovator in July 2010 and is a proud recipient of the Blue Obelisk for contributions to open data. He writes regularly at his blog, Science in the Open.

1 Comments