Recent assessments have found that racial and ethnic minorities have less political knowledge compared to Whites. But could this be due to the way that questions are asked rather than a true knowledge gap? Efrén Pérez tests this theory by providing a richer set of political questions to Whites and Latinos, which include Latino-themed questions. He finds that when unbiased questions are used, the knowledge gap between the two groups disappears.

Recent assessments have found that racial and ethnic minorities have less political knowledge compared to Whites. But could this be due to the way that questions are asked rather than a true knowledge gap? Efrén Pérez tests this theory by providing a richer set of political questions to Whites and Latinos, which include Latino-themed questions. He finds that when unbiased questions are used, the knowledge gap between the two groups disappears.

Imagine a White and Latino student taking a test evaluating intelligence. A question asks them to complete the analogy Runner is to marathon as a) Envoy is to embassy; b) Martyr is to massacre; or c) Oarsman is to regatta. The White student correctly answers “c.” The Latino student does not. Is the latter pupil less smart? Only if regattas—yacht races—are equally prevalent in the communities where Whites and Latinos typically reside (note: Latinos often live in lower-income, segregated neighborhoods; hardly the stuff of regattas).

This example prompted me to study whether something similar is afoot in assessments of Americans’ political knowledge. People’s level of political information is deemed a workhorse variable in public opinion research. Possessing more political knowledge makes people more interested and active in politics. It enables them to pinpoint and follow their political interests. And, it endows them with information to counter-argue. Many scholars believe political knowledge is reflected in the political facts individuals possess, so this trait is often measured by posing quiz-like questions to people—e.g., Whose responsibility is it to determine if a law is constitutional or not?. These measures often indicate that Whites hold much more political knowledge than non-Whites. Is this evidence that minorities are politically inattentive and under-engaged?

Only if the equivalents of regattas are absent from researchers’ inventory of political knowledge questions. I highly doubt this, though. Scholars have generally validated these questions in surveys where White adults predominate. Yet the US is rapidly diversifying, and the bank of questions that perform impartially in heterogeneous contexts has not kept pace. Many regattas likely dot the fleet of items available to capture knowledge, thereby distorting the conclusions we draw about political information levels among racial/ethnic minorities.

For example, scales of quiz-like items suggest Latinos are between 15 to 30 percent less politically informed than Whites. This yawning gap is probably inflated by subtle biases. Because the universe of what people could know about politics is vast and unknown, scholars have delimited knowledge to facts about people, procedures, and happenings in politics. This has yielded factual items that scale nicely: a person’s response to one question is robustly correlated with their responses to other similar questions. Yet such items might also tap aspects of politics that some groups attend to more.

Consider the question How much of a majority is required for the US Senate and House to override a presidential veto? On average, this item will be within reach of a White person with at least a high school education, since it broaches a civics fact often covered in US secondary schools. Yet this item will be harder to answer for an equally educated Latino who was partially or completely schooled outside the US, a likely prospect given the large number of foreign-born Latinos. This item will make Latinos seem less informed than they actually are.

The solution here is to field richer question sets, while determining which items perform impartially across groups. The goal is not to replace knowledge items that are “stacked against” one group answering correctly for items that are “stacked in favor” of another. It is to design items that adhere to a common formulation of political knowledge and perform equally across groups. Hence, I asked a nationally representative sample of White and Latino adults eight knowledge questions in randomized order. Five of these were traditional civics items: 1) Which is the more conservative political party? 2) Whose responsibility is it to determine a law’s constitutionality? 3) What majority is required to override a presidential veto? 4) What office does John Roberts hold? 5) What job does John Boehner hold? The remaining three items were Latino-themed: 1) What job does Marco Rubio hold? 2) What office does Sonia Sotomayor occupy? 3) What government institution implemented the 2012 stay of deportation for undocumented immigrants?

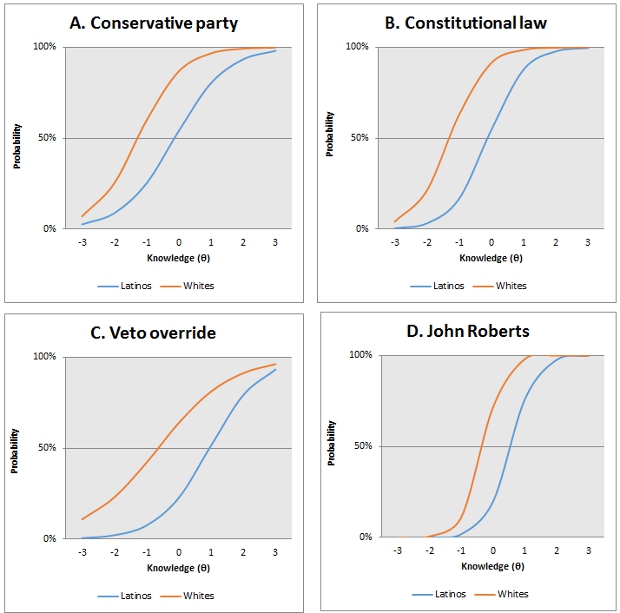

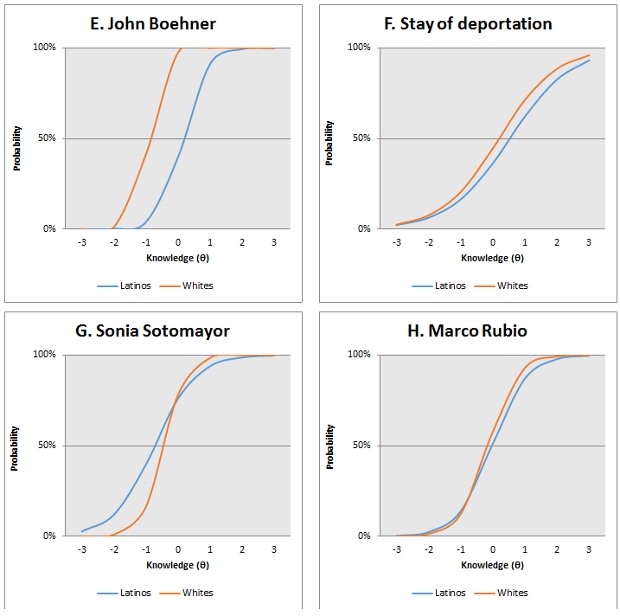

All these items hew to political knowledge’s usual conceptualization. But are any of them regattas? The item characteristic curves (ICCs) in figures 1a through 1h provide some clues. Each figure’s y-axis displays the probability of correctly answering an item. Each figure’s x-axis reveals how difficult an item is to answer and how well it distinguishes between people at that difficulty level. Thresholds with positive (negative) values indicate harder (easier) items, as it takes more (less) knowledge to answer them, denoted here by θ. Loadings with higher positive values indicate an item crisply distinguishes between individuals at a given threshold, as evidenced by an ICC’s steeper slope.

Figures 1A – 1H. Item Characteristic Curves for Political Knowledge Items: Latinos and Whites

The ICCs for Latinos are generally to the right of Whites, suggesting these questions are harder for Latinos to correctly answer. Consider the item John Roberts. Among Latinos, this question yields a threshold of .547, a relatively difficult item. But among Whites, the same item produces a threshold of -.311, a relatively easier item. Comparable patterns emerge for other questions. However, none of the Latino-themed questions is appreciably harder for Whites than Latinos. Indeed, statistical tests show the three Latino-themed items, plus the Conservative party question, operate impartially.

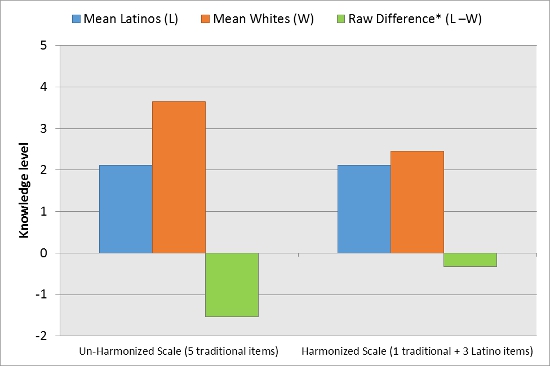

Figure 2 shows why this matters. The un-harmonized scale leaves item bias unaddressed. As an index of five traditional items, it reflects the usual approach to measuring knowledge. There, Latinos correctly answer 2.12 items on average, while Whites correctly reply to 3.65 items on average, producing a 31 percent knowledge gap. The second scale uses the four unbiased items. There, Latinos correctly answer 2.11 questions on average, while Whites correctly reply to 2.45 questions on average, yielding an 8 percent knowledge gap. In fact, when I statistically control for individual differences in levels of education, age, sense of efficacy, and other factors, this smaller knowledge gap vanishes.

Figure 2 – Raw Knowledge Gaps between Latinos and Whites

Note: The un-harmonized scale runs from 0-5 items. The harmonized scale runs from 0-4 items. Means and differences-in- means reflect the number of knowledge questions answered correctly. *Reliable difference at 5% level or better (two-tailed).

Now, I mentioned earlier that political knowledge is a workhorse variable. Often freighted onto it are normatively wholesome behaviors. For example: greater knowledge promotes more enlightened views on racial issues. I tested this by analyzing opinions toward illegal immigration, measured here by asking my survey respondents to express their degree of agreement with the statement “Lawmakers in our nation’s capital should make it harder for illegal immigrants to become US citizens.” I find that when knowledge levels among Whites and Latinos are assessed by my un-harmonized scale, greater political information makes Whites less supportive of this immigration proposal, but Latinos a smidgeon more supportive—more supportive?!?! In contrast, when knowledge is measured with my harmonized scale, holding more political information makes Whites and Latinos less supportive of this harsh policy, just as prior work suggests.

Three lessons emerge from all this evidence. First, the questions researchers ask structure the answers we get about political knowledge in a diverse polity. Second, remedying such biases is important if we wish to accurately characterize how politically engaged minorities are. Finally, developing unbiased test questions involves designing and testing items that perform equivalently across heterogeneous groups. This is laborious, but it is the kind of effort needed to draw the right conclusions about how much the American people know about politics and the kind of political behavior this knowledge produces.

This article is based on the paper ‘Mind the Gap: Why Large Group Deficits in Political Knowledge Emerge—And What To Do About Them’ in Political Behavior.

Featured image credit: Marcus Ramberg (Flickr, CC-BY-NC-2.0)

Please read our comments policy before commenting.

Note: This article gives the views of the author, and not the position of USAPP – American Politics and Policy, nor the London School of Economics.

Shortened URL for this post: http://bit.ly/1IYot2l

_________________________________

Efrén Pérez – Vanderbilt University

Efrén Pérez – Vanderbilt University

Efrén Pérez is Assistant Professor of Political Science and Sociology (by courtesy) at Vanderbilt University. He is also Co-Director of its Research on Individuals, Politics, and Society (RIPS) experimental lab. He conducts research on group identity, language and political choice, and implicit political cognition. He is the author of Unspoken Politics: Implicit Attitudes and Political Thinking, which is forthcoming at Cambridge University Press.