Literature reviews are a core part of academic research that are loathed by some and loved by others. The LSE Impact Blog recently presented two proposals on how to deal with the issues raised by literature reviews: Richard P. Phelps argues, due to their numerous flaws, we should simply get rid of them as a requirement in scholarly articles. In contrast, Arnaud Vaganay proposes, despite their flaws, we can save them by means of standardization that would make them more robust. Here, I put forward an alternative that strikes a balance between the two: Let’s build databases that help systemize academic research. There are examples of such databases in evidence-based health-care, why not replicate those examples more widely?

The seed of the thought underlying my proposition of building dynamic knowledge maps in the social sciences and humanities was planted in 2014. I was attending a talk within Oxford’s evidence-based healthcare programme. Jon Brassey, the main speaker of the event and founder of the TRIP database, was explaining his life goal: making systematic reviews and meta-analyses in healthcare research redundant! His argument was that a database containing all available research on treatment of a symptom, migraine for instance, would be able to summarize and display meta-effects within seconds, whereas a thorough meta-analysis would require weeks, if not months, if done by a conventional research team.

Although still imperfect, TRIP has made significant progress in realizing this vision. The most recent addition to the database are “evidence maps” that visualize what we know about effective treatments. Evidence maps compare alternative treatments based on all available studies. They indicate effectiveness of a treatment, the “size” of evidence underscoring the claim and the risk of bias contained in the underlying studies. Here and above is an example based on 943 studies, as of today, dealing with effective treatment of migraine, indicating aggregated study size and risk of bias.

There have been heated debates about the value and relevance of academic research (propositions have centred on intensifying research on global challenges or harnessing data for policy impact), its rigor (for example reproducibility), and the speed of knowledge production, including the “glacial pace of academic publishing”. Literature reviews, for the reasons laid out by Phelps and Vaganay, suffer from imperfections that make them: time consuming, potentially incomplete or misleading, erratic, selective, and ultimately blurry rather than insightful. As a result, conducting literature reviews is arguably not an effective use of research time and only adds to wider inefficiencies in research.

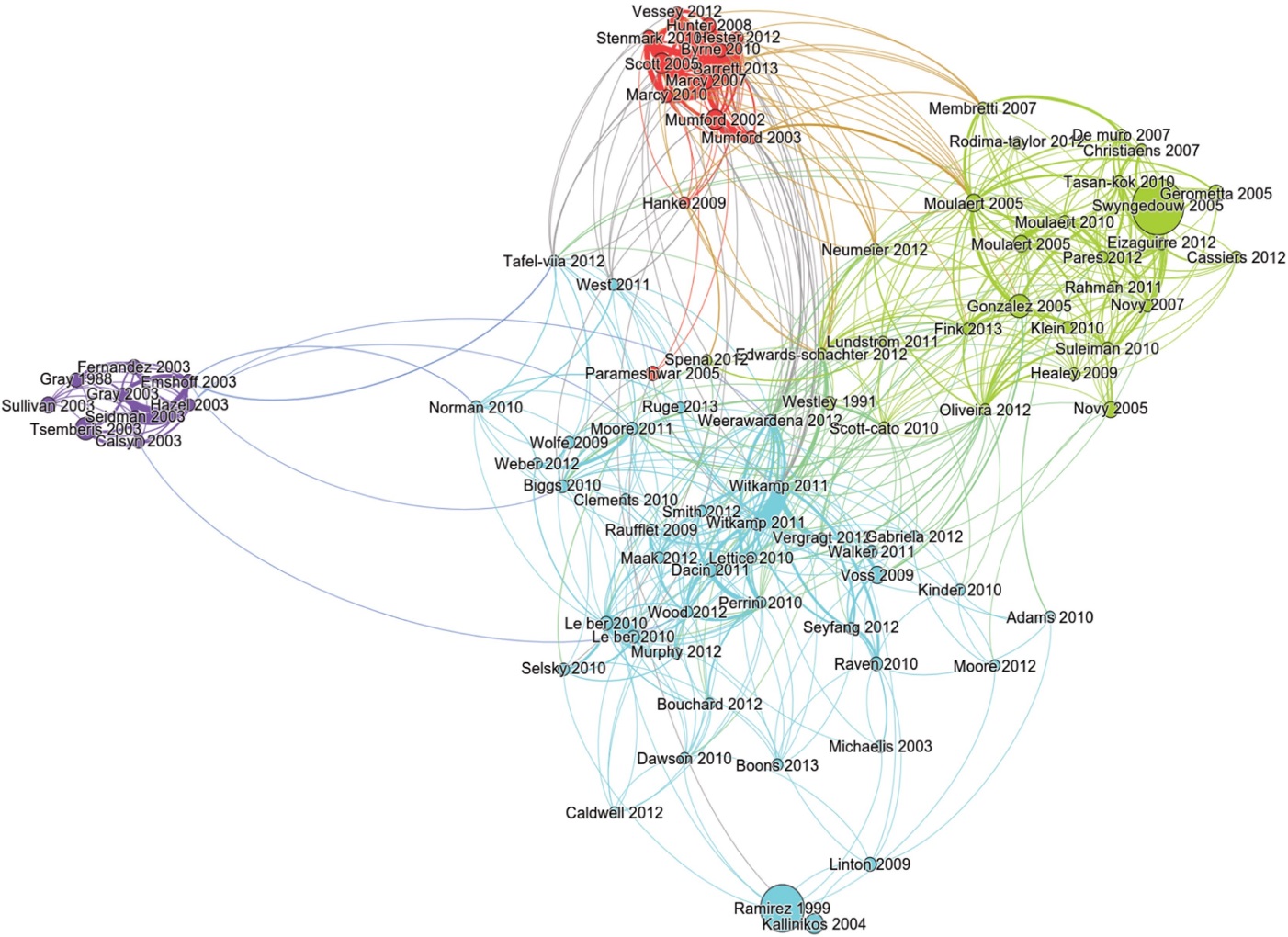

We can of course stress the positive sides of reviews, namely that they are one part in enabling the emergence of a research community, help to establish the level playing field for new studies and serve to identify important research gaps. However, most would probably agree that they only present a partial overview of a research field. In this respect, Robert P. van der Haave and Luis Rubalcaba provide a good example in Research Policy of how different literatures on the same subject (social innovation) “do not speak to each other”, thereby limiting collaboration and faster progress in understanding new phenomena.

Source: van der Haave & Rubalcaba (2016).

Source: van der Haave & Rubalcaba (2016).

Our ambition as researchers should be to advance knowledge and one way of achieving this would be if anyone could easily gain a high-level summary of the research literature, in a way similar to what we’ve just seen the TRIP database do. I see no reason why these principles could not be applied to the social sciences and the humanities.

However, research in these fields offers unique challenges. For instance, in contrast to healthcare research, the volume of qualitative research renders the notion of effect sizes to some extent irrelevant. Measures such as these would instead have to be complemented by features that allow for mapping “research depth”. Two-dimensional graphs will not be able to capture this depth. Instead, we would require tree-like structures that allow us to dig deeper starting from broader research themes such as organizational stigma or innovation ecosystems.

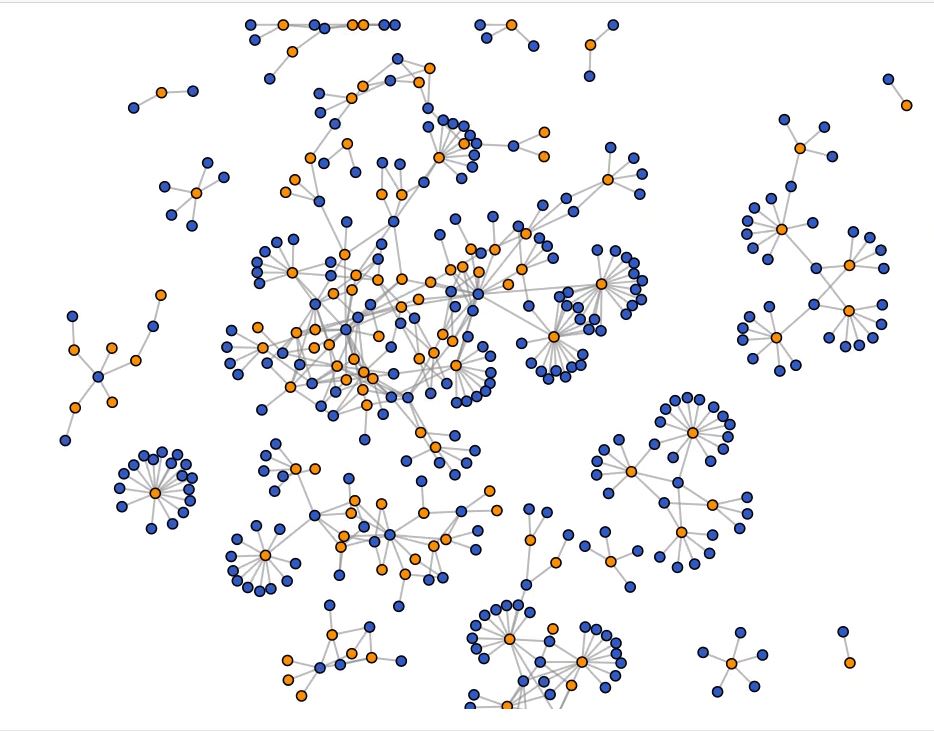

Phenomena can also be looked at from very different angles in the social sciences and humanities. There is less of an objective “truth”, or that truth needs to emerge by combining analytic angles, methods and research traditions. This would require the analytic view displayed by the evidence map to be more adaptable and dynamic. But this is also not unheard of. Dynamic visualizations are already used to display and track collaboration networks. Why not use them to systemize our knowledge?

Publication network of all publications of the Oxford Protein Informatics Group. By Florian Klimm, available on GitHub.

Publication network of all publications of the Oxford Protein Informatics Group. By Florian Klimm, available on GitHub.

Obviously, there are still many issues to be resolved, for example: Who decides on how particular analytical frames are mapped? How can we produce maps across disciplinary borders, research communities or “conversations”? And what role would journals play in developing and administering the maps? There might be a role here for learned and professional societies, or for scholarly self-organization, as in the open science movement. So the vision is far from clear cut yet.

But if we choose to invest into building dynamic knowledge maps, we will fully adhere to Vaganay’s calls for increasing standardization, while we will equally fully adhere to Phelps calls for not wasting time and resources for presenting the same thing repeatedly, each team of authors in their own light. Instead, we will be able to invest the time and energy we will save into furthering original thought and pushing the boundaries of our knowledge.

Lead image credit: TRIP database

Disclaimer: This post is opinion-based and does not reflect the views of the London School of Economics and Political Science or any of its constituent departments and divisions.

Note: This post was first published on the LSE Impact Blog on 14 May, 2019 and we are grateful for their permission to reproduce it here.