The mainstreaming of Generative AI technologies over the past year has led to a fundamental re-evaluation of many long-held academic institutions and the purpose of higher education and learning. This review brings together a selection of posts exploring this change featured on the LSE Impact Blog over the past year. Want to find even more? You can read a selection of posts on AI, Data and Society via the links and all of our annual reviews here.

Can artificial intelligence assess the quality of academic journal articles in the next REF?

Can artificial intelligence assess the quality of academic journal articles in the next REF?

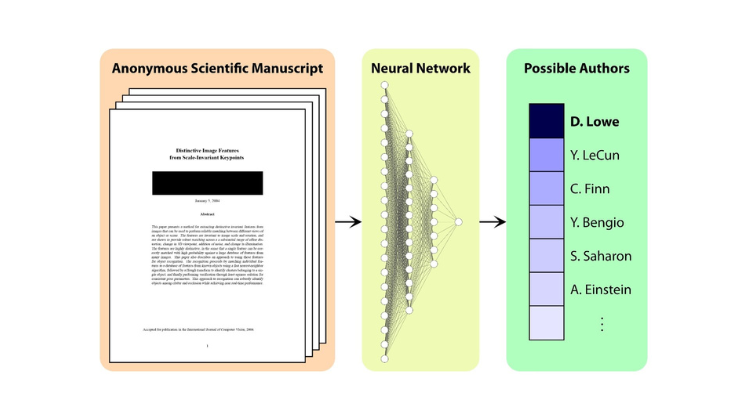

In this blog post Mike Thelwall, Kayvan Kousha, Paul Wilson, Mahshid Abdoli, Meiko Makita, Emma Stuart and Jonathan Levitt discuss the results of a recent project for UKRI that made recommendations about whether artificial intelligence (AI) could be used as part of the Research Excellence Framework (REF). It assessed whether AI could support or replace the decisions of REF subpanel members in scoring journal articles. The project developed an AI system to predict REF scores and discussed the results with members of sub-panels from most Units of Assessment (UoAs) from REF2021. It was also given temporary access to provisional REF2021 scores to help develop and test this system.

Generative AI should mark the end of a failed war on student academic misconduct

Generative AI should mark the end of a failed war on student academic misconduct

Higher education institutions have long been locked in a Sisyphean struggle with students prepared to cheat on tests and assignments. Considering how generative AI reveals the limitations of current assessment regimes, Utkarsh Leo argues educators and employers should consider how an obsessive focus on grades has distorted teaching and learning in universities.

The value of generative AI is often dismissed after it fails to produce coherent academic responses to single prompts. Mark Carrigan, argues that careless use of generative AI fails to engage with more interactive ways in which it can used to supplement academic work.

Who is the better forecaster: humans or generative AI?

Who is the better forecaster: humans or generative AI?

The ability to forecast and predict future events with a degree of accuracy is central to many professional occupations. Utilising a prediction competition between human and AI forecasters, Philipp Schoenegger and Peter S. Park, assess their relative accuracy and draw out implications for future AI-society relations.

Can generative AI add anything to academic peer review?

Can generative AI add anything to academic peer review?

Generative AI applications promise efficiency and can benefit the peer review process. But given their shortcomings and our limited knowledge of their innerworkings, Mohammad Hosseini and Serge P.J.M. Horbach argue they should not be used independently nor indiscriminately across all settings. Focusing on recent developments, they suggest the grant peer review process is among contexts that generative AI should be used very carefully, if at all.

A social science agenda for studying the impacts of algorithmic decision-making

A social science agenda for studying the impacts of algorithmic decision-making

AI and algorithmic decision-making tools already influence many aspects of our lives and are likely to become increasingly embedded within businesses and governments. Drawing on recent research and examples from across the social sciences, Frederic Gerdon and Frauke Kreuter outline where and how social science is vital to the ethical use of algorithmic decision-making systems.

Whilst the ability of generative AI to produce text in English has been widely covered, the implications of its ability to translate and act as a cultural broker into English have received less attention. Considering use contexts in education and research, Dimitrinka Atanasova, suggests higher education policymakers should adopt a cultural lens when developing policy responses to generative AI.

The gap between AI practitioners and ethics is widening – it doesn’t need to be this way

The gap between AI practitioners and ethics is widening – it doesn’t need to be this way

The application of AI technologies to social issues and the need for new regulatory frameworks is a major global issue. Drawing on a recent survey of practitioner attitudes towards regulation, Marie Oldfield discusses the challenges of implementing ethical standards at the outset of model designs and how a new Institute for Science and Technology training and accreditation scheme may help standardise and address these issues.

What the deep history of deepfakes tells us about trust in images

What the deep history of deepfakes tells us about trust in images

The ability to manipulate and generate images with new technologies presents challenges to traditional media reporting and scholarly communication. However, as Joshua Habgood-Coote discusses, the history of fake images shows that rather than heralding a mass breakdown in trust, technological innovations have fed into ongoing social problems around the production of knowledge.

The content generated on this blog is for information purposes only. This Article gives the views and opinions of the authors and does not reflect the views and opinions of the Impact of Social Science blog (the blog), nor of the London School of Economics and Political Science. Please review our comments policy if you have any concerns on posting a comment below.

Image credit: LSE Impact Blog via Canva.