LSE’s Emma Goodman discusses the risks of anti-vaccination content on social media, and how the tech companies are addressing it. This article is a response to a workshop organised earlier this month by the Royal Society for Public Health (RSPH) on ‘Vaccines, information and social media’.

LSE’s Emma Goodman discusses the risks of anti-vaccination content on social media, and how the tech companies are addressing it. This article is a response to a workshop organised earlier this month by the Royal Society for Public Health (RSPH) on ‘Vaccines, information and social media’.

The problem

It’s not the only health issue to be subject to misinformation online, but the prevalence of anti-vaccination content on social media is causing great concern among public health officials, politicians and more. The UK government’s White Paper on online harms mentions vaccination misinformation under “Threats to our way of life,” specifying that “the spread of inaccurate anti-vaccination messaging online poses a risk to public health.”

The public health consequences of not vaccinating are evident. Many countries that had been close to eliminating measles have seen a significant increase in cases, mainly among growing unvaccinated populations. According to the World Health Organisation (WHO), vaccination currently prevents 2-3 million deaths a year, and a further 1.5m could be avoided if global coverage of vaccinations improved. But despite efforts from public health bodies to increase vaccination coverage of preventable diseases such as measles, rates have been falling.

The reasons are complex and not entirely clear. The WHO has ranked ‘vaccine hesitancy’ as one of the biggest threats to global health, and although this isn’t always the reason people don’t vaccinate themselves or their children – often it’s down to convenience or other pragmatic considerations rather than ideologies – there does seem to be a growing problem with trust in vaccinations. Worldwide, the Wellcome Global Monitor 2018 found that 79% of people agree that vaccines are safe, while this drops to 59% in Western Europe, and 40% in Eastern Europe.

The role of social media

Although any link between an increase in online misinformation and a fall in vaccination levels has so far been established as correlational rather than causal, many worry that they are deeply entwined.

The UK-focused 2019 RSPH report ‘Moving the Needle’ found that 41% of parents surveyed had been exposed to negative messages about vaccination on social media, rising to 50% of parents of under-5s (as a parent of young children myself, I am honestly surprised these figures aren’t higher.) The risk, the report argues, is that repetition of information is often mistaken for accuracy, citing a 2015 study that showed that even when participants were armed with prior knowledge, they could succumb to the effects of ‘illusory truth.’

The UK-focused 2019 RSPH report ‘Moving the Needle’ found that 41% of parents surveyed had been exposed to negative messages about vaccination on social media, rising to 50% of parents of under-5s (as a parent of young children myself, I am honestly surprised these figures aren’t higher.) The risk, the report argues, is that repetition of information is often mistaken for accuracy, citing a 2015 study that showed that even when participants were armed with prior knowledge, they could succumb to the effects of ‘illusory truth.’

Even if just a small percentage of the population is opposed to vaccinations, they are very passionate and social media has enabled them to have a loud voice. In the ‘attention economy,’ social media tends to favour the emotive and sensational over factual content, the negative over the positive, the outrageous over the mundane. Recommendation engines are expert at sending users down paths that expose them to more of the same views, while likely becoming increasingly extreme.

Social media platforms can also suffer from the kind of harmful data void described by Microsoft researchers in 2018, whereby the lack of a steady stream of quality, authoritative information on a topic leaves spaces open to manipulation by the promotion of misinformation.

So what are social media companies doing about anti-vaccination material on their platforms?

Under pressure from governments, civil society and the public, there is no obvious path forward for tech companies. They can’t just ignore this content, but removing it would limit their users’ freedom of expression and put the companies themselves into an ‘arbiter of truth’ role, which neither they nor most others believe they should take on. The main players, therefore, are all trying slightly different approaches:

Under pressure from governments, civil society and the public, there is no obvious path forward for tech companies. They can’t just ignore this content, but removing it would limit their users’ freedom of expression and put the companies themselves into an ‘arbiter of truth’ role, which neither they nor most others believe they should take on. The main players, therefore, are all trying slightly different approaches:

As it does for any incidences of misinformation, Facebook works with third party fact-checkers who analyse flagged stories and if found to be misleading, the platform reduces the attention that they receive, by reducing their ranking in Facebook’s news feed and search functions. Distribution usually falls by around 80% after being flagged as misleading, the company says.

When page or group admins spread vaccine hoaxes that have been publicly debunked by authoritative organisations such as WHO, the company goes a step further by not just reducing the distribution of the post, but also of the page or group that posted the information. They will not be included in recommendations or in predictions when a user is typing in Search.

Ads with vaccine misinformation are rejected, and you can no longer target people based on options like “vaccine controversies.”

In March, Facebook said that said vaccine misinformation would not be shown or recommended in Instagram’s Explore, hashtag and search pages. In May, Instagram said that it would hide hashtags that include a ‘high percentage’ of inaccurate information relating to vaccines (that which is at odds with facts determined by organisations such as WHO).

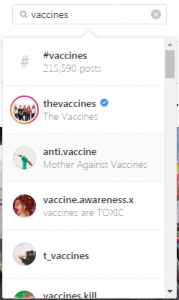

However, as shown in this screenshot, although the only hashtag to come up prominently in a research search for ‘vaccines’ is #vaccines, which has a mix of pro- and anti-vaccination content, several of the top accounts that immediately appear have a pretty clear anti-vaccination agenda.

Twitter announced in May 2019 that it launched a new tool in search that would direct users to credible public health resources when they search for certain vaccine-related keywords, such as the Center for Disease Control and Prevention, which is run by officials at the US Department of Health and Human Services. In the UK, searching for ‘vaccinations’ brings up a tweet from the NHS as the first result.

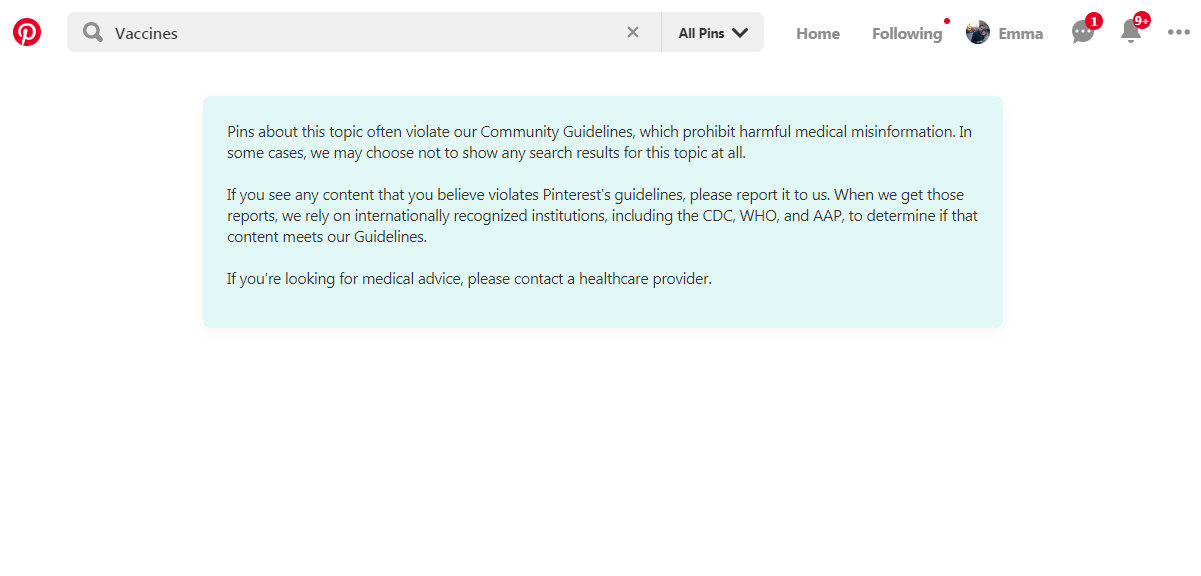

If you search for ‘vaccines’ on Pinterest you get no results – the company has just decided to shut off these discussions entirely.

It is also impossible to ‘pin’ pages from certain URLs that are known to carry problematic content such as health misinformation, Pinterest told the Guardian.

It is also impossible to ‘pin’ pages from certain URLs that are known to carry problematic content such as health misinformation, Pinterest told the Guardian.

YouTube

Youtube announced in February that it was ‘demonetising’ anti-vaccination content – in other words, removing the ability to advertise alongside it. According to Buzzfeed, the move followed complaints from several large advertisers who did not want their content alongside videos promoting anti-vaccine views. It is a similar approach to that which the company takes for hateful or violent content, and one which is described in a Guardian editorial as a ‘halfway house between complete freedom and extinction.’

It also provides information panels on videos detected as having an anti-vaccine message, linking to a Wikipedia page on vaccine hesitancy. The company’s announcement in January that it was working to reduce recommendations of ‘borderline’ content could also apply to vaccine-related misinformation.

Will such approaches be effective?

An acknowledgement that free speech is not the same as free reach and that platforms have responsibility for the recommendations that they make is significant (especially given that, for example, 70% of viewing on YouTube is through recommendations). Providing easy access to reliable, evidence-based information is also a welcome step forward.

However, there are limitations to the benefits that these ad hoc moves can have.

- Lack of information isn’t the problem. There is a huge amount of evidence for the benefits of immunization, but presenting this evidence to those who feel otherwise isn’t necessarily going to change their minds. A social media redirect to an authoritative source is unlikely to be sufficient to have an impact on those with deeply entrenched viewpoints.

- It is also important to remember that social media are not the only way that anti-vaccine misinformation can spread: spurious, sensationalist claims related to vaccines appear in traditional media also.

- There are indications that anti-vaccination views are a sign of deeper mistrust in society, and therefore this isn’t going to be an easy fix. For example, there is a highly significant positive association between the percentage of people in a country who vote for populist parties and who believe that vaccines are unimportant and ineffective, a study found earlier this year. According to the author Jonathan Kennedy, “populism and vaccine skepticism are driven by similar feelings: distrust and animosity toward elites and experts.”

What can be done?

- As argued by UN special rapporteur on freedom of expression David Kaye in a letter to Facebook CEO Mark Zuckerberg in May, the “ad-hoc development” of policies addressing issues such as vaccine hesitation “may be susceptible to criticisms of bias and arbitrariness.” Kaye advises that “aligning these measures with human rights standards, however, can place them on a more principled footing.”

- It was suggested at the RSPH workshop that more effective campaigning against vaccine misinformation could focus on the dangers of the disease in question, rather than the safety of vaccines, given that it is when confronted with an outbreak that people often seem to agree to vaccinate. Involving the target populations in building campaigns was also thought to be very helpful.

- Improving media and digital literacy so that the public is more easily able to identify reliable sources of information is always beneficial, but requires sustained spending and a significant, coordinated effort.

As we argued in the Truth, Trust and Technology Commission report, any attempt to truly tackle the information crisis and its effects should take a holistic, systems-based approach, rather than focusing simply on taking down or hiding problematic content after it appears. Our T3 report identified confusion, cynicism, fragmentation, irresponsibility of tech companies, and apathy as the five ‘evils’ of the information crisis, and any response should take into account how these are perpetuated by today’s information system and the business models on which it thrives.

This post represents the views of the author, and not the position of the LSE Media Policy Project, nor of the London School of Economics and Political Science.