In the context of the ongoing revision of the Children’s Online Privacy Protection Rule, known as the COPPA Rule, the U.S. Federal Trade Commission (FTC) opened a public consultation to gather stakeholder input on the future development of this fundamental piece of legislation. Doctoral researcher at the LSE Eleonora Maria Mazzoli and Professor Sonia Livingstone responded to the consultation providing evidence on the limitations of the current COPPA Rule’s implementation and on the need for further scrutiny over data collection and data processing practices linked to children. The full response is available here and it will soon be published at Regulations.gov.

In the context of the ongoing revision of the Children’s Online Privacy Protection Rule, known as the COPPA Rule, the U.S. Federal Trade Commission (FTC) opened a public consultation to gather stakeholder input on the future development of this fundamental piece of legislation. Doctoral researcher at the LSE Eleonora Maria Mazzoli and Professor Sonia Livingstone responded to the consultation providing evidence on the limitations of the current COPPA Rule’s implementation and on the need for further scrutiny over data collection and data processing practices linked to children. The full response is available here and it will soon be published at Regulations.gov.

The FTC is currently conducting its ten-year review earlier than expected because of public pressure and concerns regarding the Rule’s implementation. This is also needed because of rapid technological developments affecting services used by children on a daily basis. One month after the closing of the FTC’s public consultation on COPPA, the debate among industry stakeholders, civil society and policy makers is still heated, as everyone waits with trepidation for the FTC’s decision.

It is important to recognise that although COPPA is part of the U.S. regulatory system, it has become the de facto global standard for children’s access to many internet sites and social media services around the world. For instance, this is also the reason why the minimum age on social media apps like Facebook, Instagram, TikTok and others 13. Thus, the COPPA’s framework, actions and future developments therefore matter globally.

In a digital age in which many everyday actions generate data – whether given by digital actors, observable from digital traces, or inferred by others, human or algorithmic – addressing the relation between privacy and data online is urgent, especially when children’s rights are at stake. On the one hand, digital technologies and online services are increasingly vital for children to exercise their civil rights and freedoms, including much needed access to education, socialising, participation, wellbeing and entertainment. On the other hand, as technologies become more sophisticated, networked and commercially viable, children’s privacy is threatened by new forms of data collection and surveillance. Hence our research on children’s understanding of the digital environment and their digital skills and literacies, which is designed to support ways in which their full range of rights can be considered in designing services, regulation and policy.

Existing legal and regulatory frameworks like the COPPA Rule have laid the ground for increased children’s protection online. However, full implementation and compliance are still far from achieved. Enforcement challenges are highlighted by recent rampant children’s privacy violations, including among companies like Google Alphabet’s YouTube and social networking app TikTok (formerly known as Musical.ly) that are used globally by an increasing number of minors. While the FTC’s intervention have brought changes to online services directed at children and apparent improvements in their data collection strategies, stakeholders are still not satisfied. We are indeed witnessing angry developers criticising the FTC for its sanctions, and consumer and civil society groups calling instead for stronger measures, both countered by fierce industry lobbying.

We urge a holistic approach to children’s ‘best interests’ to balance protection and participation online so that efforts to protect children do not have the consequence, intended or otherwise, of restricting children’s opportunities. How can this be achieved? Based on the evidence we provided in our consultation reply, we offer these key recommendations:

- Alongside legal clarification of the scope and definition of the services covered by the COPPA Rule, the FTC should undertake an investigation to better understand children’s data privacy and to examine business practices regarding digital content, advertising structures and data collection, including use of recently developed metrics (such as “brand safety” targeting applications now in use by leading marketers and key platforms like YouTube and Facebook). Wide support for such investigation comes from numerous advocacy organisations, including the Center for Digital Democracy and the Campaign for a Commercial-Free Childhood.

- Any data collection and data processing linked to children, whether deliberate or otherwise, must be in the best interests of the child. The child’s best interests should be prioritised over commercial interests, and care should be taken in framing the regulation that compliance with the law does not undermine children’s best interests or come at the cost of children’s privacy and data protection.

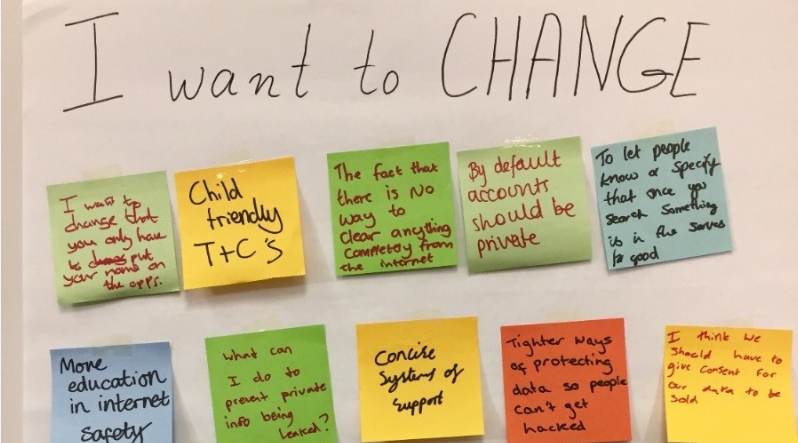

- A forward-looking regulatory framework should include regulation for privacy-by-design and by-default to require child-friendly age-appropriate mechanisms for privacy protection, complaint and remedy. This intervention should be complemented by increased transparency over data collection practices, improved privacy control navigation, and granular control over privacy settings to match (and counter) the industry’s elaborate data-harvesting techniques and create better industry standards for actual users’ empowerment.

Overall, all children’s civil rights and freedoms, and their privacy rights, are fundamental and the COPPA Rule should ensure that young people can fully exercise their rights while receiving adequate data and privacy protection. Although increased data literacy to empower children is greatly needed, children cannot be burdened with solely responsibility for handling the complex privacy environment. Nor should the responsibility for their protection too heavily on parents. Responsibility should be shared with all relevant stakeholders involved, including those commercial companies providing platforms services that are used by children. We look to the relevant regulators to make this happen. While we await the FTC’s next steps, it is noteworthy that in the UK, the Information Commissioner’s Office has just published its Age-Appropriate Design Code which surely represents an innovative advance in children’s data protection.

This article represents the views of the authors, and not the position of the Media@LSE blog, nor of the London School of Economics and Political Science.

Featured image: Photo by Igor Starkov on Unsplash