At present, most discussions surrounding the elimination of objectionable content from end-to-end encrypted (E2EE) services approach it as a debate about breaking E2EE technology to allow enforcement agencies access to secure communications. In this post, Akshata Singh and Nitish Chandan of the CyberPeace Foundation in India, who recently published a report assessing E2EE platforms as a new mode of Child Sexual Abuse Material (CSAM) distribution, argue that there are other levers that can be turned on to regulate the transmission of CSAM on E2EE platforms without infringing user privacy.

At present, most discussions surrounding the elimination of objectionable content from end-to-end encrypted (E2EE) services approach it as a debate about breaking E2EE technology to allow enforcement agencies access to secure communications. In this post, Akshata Singh and Nitish Chandan of the CyberPeace Foundation in India, who recently published a report assessing E2EE platforms as a new mode of Child Sexual Abuse Material (CSAM) distribution, argue that there are other levers that can be turned on to regulate the transmission of CSAM on E2EE platforms without infringing user privacy.

A report released by the Rajya Sabha (upper house) of the Indian Parliament in February 2020 on online child sexual abuse makes exhaustive technological and legislative recommendations, including, mandatory reporting of CSAM by intermediaries to Indian authorities, and allowing legal backdoors to E2EE.

While the Information Technology Act 2000 (IT Act) in India bestows sweeping powers on authorities for tracking, and intercepting data, such provisions do not expressly authorise breaking E2EE. We argue that a legal backdoor to encryption coupled with sweeping powers of data interception would have disastrous effects.

First, on a global level, without commensurate data protection, backdoors would enable arbitrary data interception, causing the beleaguered global economy to further slowdown, as a result of the negation of operational and economic synergies owing to seamless confidential communication over the internet. Second, governments worldwide have demonstrated a tendency to use policy concerns for coercing or colluding with intermediaries to needlessly intercept and censor content. Russia’s demand from Telegram to save encryption keys and give access to the Russian Surveillance System, or China’s encryption licensing regime coupled with the Chinese Government’s access to deleted messages on a private messaging service, are harbingers to the possible scenario of allowing encryption backdoor in other countries.

As an alternative, we suggest two levers that can achieve tighter regulation without any infringement on user privacy.

The First Lever: Technology

Governments in different parts of the world look to censor and tackle different types of online content, but a global consensus exists on checking the distribution of CSAM. Several technology measures employed to check online content on the surface web have not been adapted to E2EE platforms. For instance, automated filters which work fairly well on the surface web are either not deployed or not deployable on E2EE services. In-app reporting, although a major feature of E2EE platforms, is virtually inconsequential, due to a prolonged response time. Despite the industry admitting to the need for expedited action on CSAM over other types of content (as detailed on p. 37 of this report), no distinction exists between reporting CSAM and other content. These factors make stopping CSAM circulation and preventing re-victimisation difficult on E2EE platforms. Consequently, the distribution and consumption of CSAM on these platforms is particularly high.

The actual problem with stopping the spread of CSAM is inaction on part of E2EE platforms and ineffective reporting mechanisms. A report that used primary data from publicly accessible adult pornography groups on E2EE platforms, found continual CSAM proliferation in an observation period of just two weeks. Given the CSAM proliferation on E2EE platforms which exists on closed groups and private communication channels, this data does not remotely reflect the exact volume of demand or extent of CSAM proliferation on E2EE platforms. Accordingly, it is crucial to institutionalise effective end-user reporting mechanisms which enable expedited action against CSAM by intermediaries in a prioritised manner.

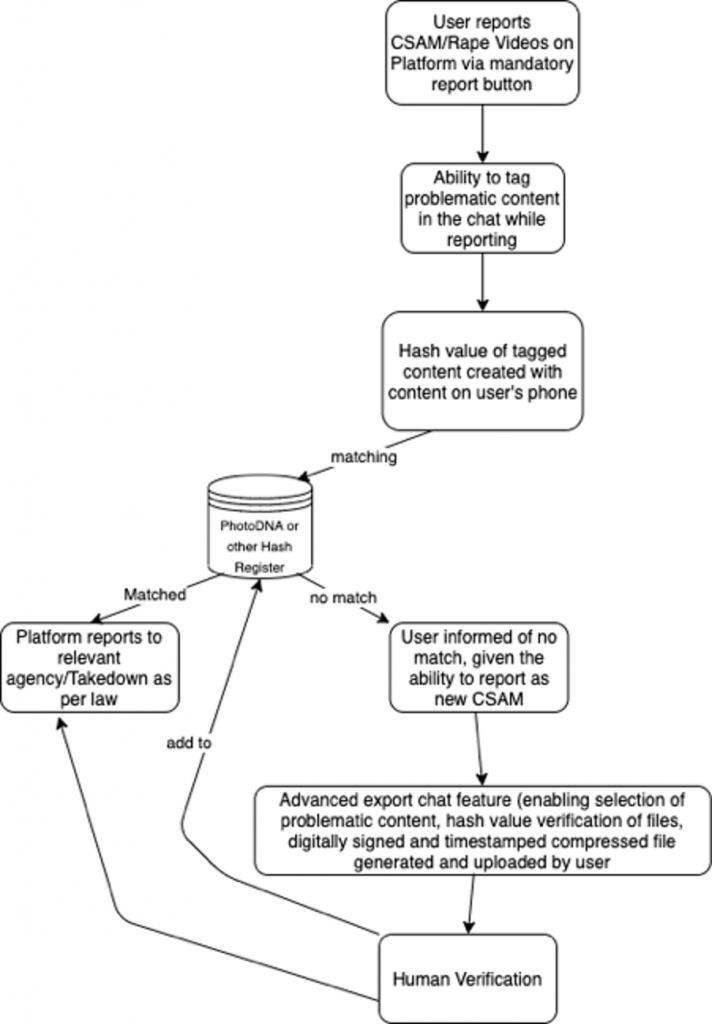

A possible technology design intervention could bridge this gap, as Princeton’s Jonathan Mayer argued in a 2019 paper. This mechanism relies on users to report CSAM in two steps:

(i) at first, for a user to tag the content as CSAM, the E2EE platform must provide a ‘Report CSAM’ option. This option will enable user reporting on E2EE platforms by sharing the hash value (numeric value assigned to tagged content) generated from the unencrypted content downloaded on the user’s device. The E2EE platform will verify this hash value with existing database for CSAM (similar to the Microsoft PhotoDNA). If the verification returns a match, then such incident will be reported to authorities.

(ii) At the next stage, if there is no match within the database, the user will be notified and given the option of reporting ‘new CSAM’. On selecting this option, the user can access an advanced chat export feature, enabling export of the whole or parts of the chat relating to CSAM transmission. This exported file can be affixed with another hash function or supplemented by digital authentication modes to ensure data integrity. The submitted multimedia files will undergo both automated and human review. If CSAM is found, it would be reported to authorities, and its hash value would be stored in the CSAM hash register. See the diagram depicting the reporting process.

(ii) At the next stage, if there is no match within the database, the user will be notified and given the option of reporting ‘new CSAM’. On selecting this option, the user can access an advanced chat export feature, enabling export of the whole or parts of the chat relating to CSAM transmission. This exported file can be affixed with another hash function or supplemented by digital authentication modes to ensure data integrity. The submitted multimedia files will undergo both automated and human review. If CSAM is found, it would be reported to authorities, and its hash value would be stored in the CSAM hash register. See the diagram depicting the reporting process.

The proposed mechanism has potential risks as well, specifically, the aspect of data collection and aggregation of tagged CSAM, is open to addition of non-CSAM content hash value to the CSAM hash register, which would verify non-CSAM or neutral content as CSAM. Accordingly, it is essential that the proposed reporting mechanism is implemented in a transparent manner, which ensures minimum government control on the CSAM database. Despite these risks, major industry coalitions and international organisations which work against CSAM have identified reporting as a precursor to any effective strategy to combat CSAM (UNICEF Guidelines for Industry on Child Online Protection, and WeProtect Voluntary Principles).

The Second Lever: Policy Change

Policy change can be used to make intermediaries accountable for blatant inaction. In India, the government is set to introduce the Information Technology (Intermediaries Guidelines (Amendment)) Rules which propose blanket provisions for intermediaries to be made directly liable for not aiding law enforcement agencies in investigations. The Rajya Sabha committee has proposed legislative amendments on similar lines. These winds of change are not unsolicited: in 2019, India gained the shameful distinction of maximum number of reports for CSAM in the world.

Another change worth considering is dilution of the safe-harbour provision. The Safe-harbour provision was introduced by way of section 79 of the IT Act, to protect intermediaries from legal liability for any unlawful content posted or disseminated through their platform, so long as intermediaries employ due diligence measures and do not play an active role in dissemination of content. In 2015, the Supreme Court of India in Shreya Singhal v Union of India, diluted the due diligence requirements for intermediaries, by exculpating intermediaries from liability for unlawful content on their platform, except in cases where the government or a court orders the intermediary to takedown unlawful content. While the judgment did not explicitly whittle down the due diligence requirement under S.79(2)(c), the lack of culpability, except when the intermediary refuses to comply with a court or government order, made intermediaries complacent towards their due diligence obligations. Intensifying due diligence requirements and enforcing such requirements through penalties can fill this gap.

Global alliances are the key

E2EE platforms seem to participate in global alliances against child abuse that focus on the importance of reporting, however, the primary findings from research by CyberPeace Foundation show no concrete measures by E2EE platforms to ensure a robust and effective reporting mechanism that actions child sexual abuse on priority. It is precisely for this reason that the pressure to mandate backdoors is mounting globally, most recently evidenced by the call for backdoors by India and Japan, along with the Five Eyes (FVEY) countries.

This changing global political climate incentivises the tech industry to implement technological solutions such as the CSAM reporting mechanism to avoid other countries from passing legislation such as the EARN IT Act in the US, which disables the safe-harbour privilege of intermediaries for civil and criminal claims relating to CSAM.

Hence, we argue that the solution lies in turning levers of policy and technology to curb CSAM on E2EE platforms while safeguarding user privacy. Issue based solutions, such as the CSAM reporting mechanism, are arguably resource heavy, but industry players are willing to expedite action against CSAM owing to the threat it poses to the moral and social fibre of any society. If we implement an effective reporting mechanism against CSAM on E2EE platforms, and intensify intermediary liability to help avert backdoors, then simply put ,we do not need to burn the house down to roast a pig.

This article gives the views of the authors, and does not represent the position of the Media@LSE blog, nor of the London School of Economics and Political Science.