As the way the media operates becomes more and more complicated and harder to study, LSE PhD researcher Hossein Derakhshan explains why research techniques such as breaching experiments can be useful for studying the effect of platforms and algorithms on people’s everyday lives.

As the way the media operates becomes more and more complicated and harder to study, LSE PhD researcher Hossein Derakhshan explains why research techniques such as breaching experiments can be useful for studying the effect of platforms and algorithms on people’s everyday lives.

Studying media was much easier before platforms and their artificial intelligence-powered algorithms dominated our everyday lives. The mass media era meant millions of people woke up with the same radio shows, read the same newspapers on the tube, watched the same sitcoms, evening news and late-night talk shows. Magazines, books, and music records enjoyed more variety, but each were still consumed by massive numbers of people at any given time, and it was easy to ask people what they made of a television or radio network, a newspaper or music label, or of a specific show or article or record.

Platforms are changing all this. There are now as many Twitter timelines, Instagram feeds, or YouTube playlists as internet users in any given society. What people experience in every one of these endless streams of words, sounds, and images is different, not only in their selection or order, but thanks to generative AI technologies, even in their very composition. This is already happening with advertising, where the same product or service is represented in numerous distinct ways to different users. What can be called mass personalization is emerging as the dominant mode of consumption of media products and beyond.

If media have traditionally been studied in three aspects – production, media text (or content), and reception – algorithms have now become the de facto equivalent of the media text in the age of mass personalization. It is therefore no wonder that many scholars have focused on algorithms in the past decade. In this article, however, I intend to highlight the problems of studying platforms through algorithms and propose a conceptual model to study what platforms do rather than what they are.

Against the algorithm-centred approach

There are at least three features of algorithms that complicate researching them:

1) Hyper-modulation: Unlike older objects of media studies such as films, news, songs, photographs, novels, TV shows, etc., algorithms do not have a fixed textuality. Thus, most empirical attempts at studying them face validity issues. For example, a film’s audience may interpret it differently, but they all view the same film, even if at different time and space. But an Instagram feed is almost unique to each user at a specific time and space. No user or researcher can experience the same algorithmic flow twice, and individual experiences of algorithms are by and large singular.

2) Invisibility: Algorithms are invisible to platform users. Like electricity and running water, platforms and their embedded algorithms have grown so crucial in every aspect of our everyday lives that we hardly notice them. It is not an exaggeration to view them as infrastructures of urban sociality, because like most infrastructures, they are indivisible in everyday life until they break down.

Such invisibility makes algorithms very difficult objects of empirical research. People may be able to speak of their experiences with their toaster, television set, or hairdryer, but not of their underlying enabler, electricity. Even when it is disrupted, they may still talk about their frustrations with why they cannot use many of their electric devices rather than the electricity itself.

3) Inextricability: Algorithms are now tightly interwoven with one another, with platforms’ core codes, and with the user data; they cannot be experienced on their own. With such inextricability, it won’t be easy for instance for people to separate their experience of their feed from the overall perception of an app. As an analogy: people watch films as a whole; they do not experience directing, script, cinematography, set and costume design, and acting separately. In a similar way, users experience platforms as a whole, not as the sum of isolated aspects of encryption, content servers, personalized feeds, graphical interface, etc.

A new conceptual model for platforms

The challenge at hand is to acknowledge how different platforms are from traditional media. Their media text (or content) is inaccessible to researchers and attempts at treating algorithms as if they are the text of platforms have not helped. Therefore, I offer a conceptual framework that focuses on what platforms do instead what they are.

Platform figuration

Seeing platforms as social figurations will help us identify and understand the interdependent, intertwined, and continuous processes embedded in them.

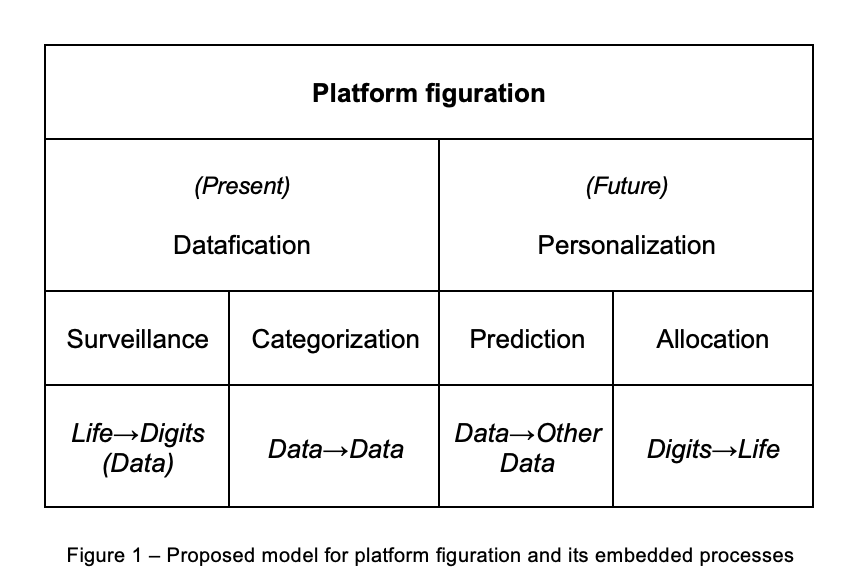

I propose that every platform, as a social figuration, has two processes at its core: datafication and personalization. While the former has extensively discussed in the past few years, the latter has rarely been seen as an essential core process within all platforms.

Datafication consists of surveillance and categorization and it is mainly oriented to the present time. Surveillance links human life to digits, resulting in an ever-modulating relation which we call data, but can also be termed as life-digits so to reveal its relationality. Categorisation is linking these life-digits (data) to each other in the present time.

For example, when you open Instagram on your phone and start scrolling down your feed, hundreds of qualitative and material aspects of your life such as your name, gender, age, location, emotions, mood, interests, beliefs, etc. are measured and quantified through your profile information and your behaviour (such as speed of scrolling, pauses, liking, zooming ins, etc.)

When these data relations (or data points) such as ’47 years of age’, ‘received dozens of ‘likes’ from friends and even strangers’, ‘25th of the month’, ‘visited several clothing shops last week’, ‘paused on a post about a conference next month’, ‘has friends who live in Italy’, ’North London location’, ‘checked profiles of those who liked the conference’, ‘3:30pm’, ‘connecting via iPhone’ are linked to each other, you have thousands of categories: ‘men between 30-45’, ‘happy person at 3:30pm’, ‘conference going man interested in shirts’, ‘iPhone user on 25th of the month’, etc.

There are infinite numbers of categories which are endlessly constructed with these life-digits or data relations by your phone’s OS and apps you use. Most of them may have no value to anyone now within the process of datafication, but every single one of them will be useful when it comes to the other core platform process of personalization.

Personalization, a less discussed core process of platforms that is oriented to the near future, consists of two sub-processes of prediction and allocation. Prediction is nothing but a re-categorization toward the future; it is simply a speculative reconfiguration of the links between life-digits, or data relations based on the existing categories. Allocation, on the other hand, is a future-oriented reversal of surveillance, a process in which predictions (which are themselves relations between data relations) are disentangled down toward life qualities and materialities.

To follow up on the earlier example, predictions are categories such as these: ’47 years of age men who are going to a conference within the next few weeks’, ‘happy people who will shop in the last week of the month’, ‘people who will be traveling soon’, ‘Male North Londoners who spend around 20 mins of shopping between 3-5pm on the day they get their salary’, ‘Men who will most likely shop from Italian brands’, etc. The last sub-process is allocation, and it basically entails applying these predictions to everyday life of individual users. This is when you see a gorgeous men’s shirt from an Italian brand on sale in your newsfeed and you cannot resist buying it immediately—a real material shirt that will be delivered to your door in a couple of days and you will take with you to the conference.

This description of the model may be seen as a cycle which starts from abstracting life into digits and ends with turning digits back to life, however, as in any figuration, datafication and personalization and their sub-processes are all happening almost at the same time. This understanding of platforms as interdependent and continuous processes opens up possibilities for different research methods.

Renewing an eclipsed methodology: ethnomethodology

The consequence of accepting two pervasive contemporary media theories, mediatization and platform society is to acknowledge that platforms have become infrastructures of everyday life in many parts of the world.

Since infrastructures are by most definitions invisible, most people are not always aware of the role of platforms in their everyday lives. Even when we are aware of them, it is difficult to feel their foundational impact except for when they are disrupted. This is where two key sociologists come to our assistance: Alfred Schutz, a sociologist of knowledge who was concerned with the symbolic infrastructures of everyday social life, and Harold Garfinkel, who was influenced by Schutz and interested in the practical foundations of a stable everyday social interactions with others.

In 1960s, Garfinkel developed an approach that he called ‘ethnomethodology’hich focuses on micro level social practices that stabilize everyday life at home, work, or public places. He devised a number of empirical methods to enable researchers to study these practices. One of the best-known methods was ‘breaching experiments’ which entailed various kinds of disruptions to generate ‘disorganized interaction’ in order to know, in Garfinkel’s words, ‘how the structures of everyday activities are ordinarily and routinely produced and maintained.’

These were not experiments in the scientific sense, where a causal relation or ‘effect’ is pursued, but they are ‘demonstrations’ of how the ‘unnoticed seens’ of everyday life, or what Schutz calls the ‘world known in common and taken for granted’ can be rediscovered—’ reflections through which the strangeness of an obstinately familiar world can be detected’.

Examples of breaching experiments included things like standing too close to someone in an elevator thereby violating personal space norms, negotiating the price of a non-negotiable item in a supermarket, responding to casual questions (like “How are you?”) with detailed, serious answers, using formal language in an informal setting, or vice versa.

Regardless of the ethical challenges these breaching experiments now face and how we can tackle them, the study of everyday user experience of platforms, especially the process of personalization, can be greatly enhanced by using various forms of breaching experiments.

For example, in my own research about the reconfiguration of collectivity through mass personalized listening, I’m interviewing my participants in two phases: during and after a breaching experiment which consists of using anonymous user accounts which are trained by others.

When people use someone else’s Spotify account, they see a very different home page. They cannot find their usual playlists in their usual place. Their daily or weekly auto curated playlists are very different and they encounter a different taste in music and podcasts as well as different sets of habits. They must find ways to re-domesticate the new account and the process is rich and insightful. People also imagine the person whose account they are using and often have interesting ideas about them: they may even be keen to know that person and ask them questions.

Another disruption happens when people get their own accounts back after a few weeks. They face things they had had forgotten, or things they no longer like in their accounts. Their personalized playlists and graphical interface have a new meaning now and they have interesting interpretations of this transition to share.

To conclude, it is fair to say that researching digital platforms pose new challenges compared to most other media forms because of their distinct ontology. Algorithms cannot be the main object of platform studies because of their hyper-modular, invisible, and inextricable nature. This, in addition to platforms’ deep embedding in our everyday lives, calls for novel theories and innovative research methods.

This post represents the views of the author and not the position of the Media@LSE blog nor of the London School of Economics and Political Science.