Following a special workshop convened by the Media Policy Project on ‘Automation, Prediction and Digital Inequalities’, Seeta Peña Gangadharan, Acting Director of the LSE Media Policy Project, connects current concerns about automated, predictive technologies with the goals of digital inclusion, arguing that broadband adoption policies and programmes need to better prepare members of marginalised communities for a new era of intelligent machines.

Following a special workshop convened by the Media Policy Project on ‘Automation, Prediction and Digital Inequalities’, Seeta Peña Gangadharan, Acting Director of the LSE Media Policy Project, connects current concerns about automated, predictive technologies with the goals of digital inclusion, arguing that broadband adoption policies and programmes need to better prepare members of marginalised communities for a new era of intelligent machines.

We’ve reached a new frontier in digital inequalities.

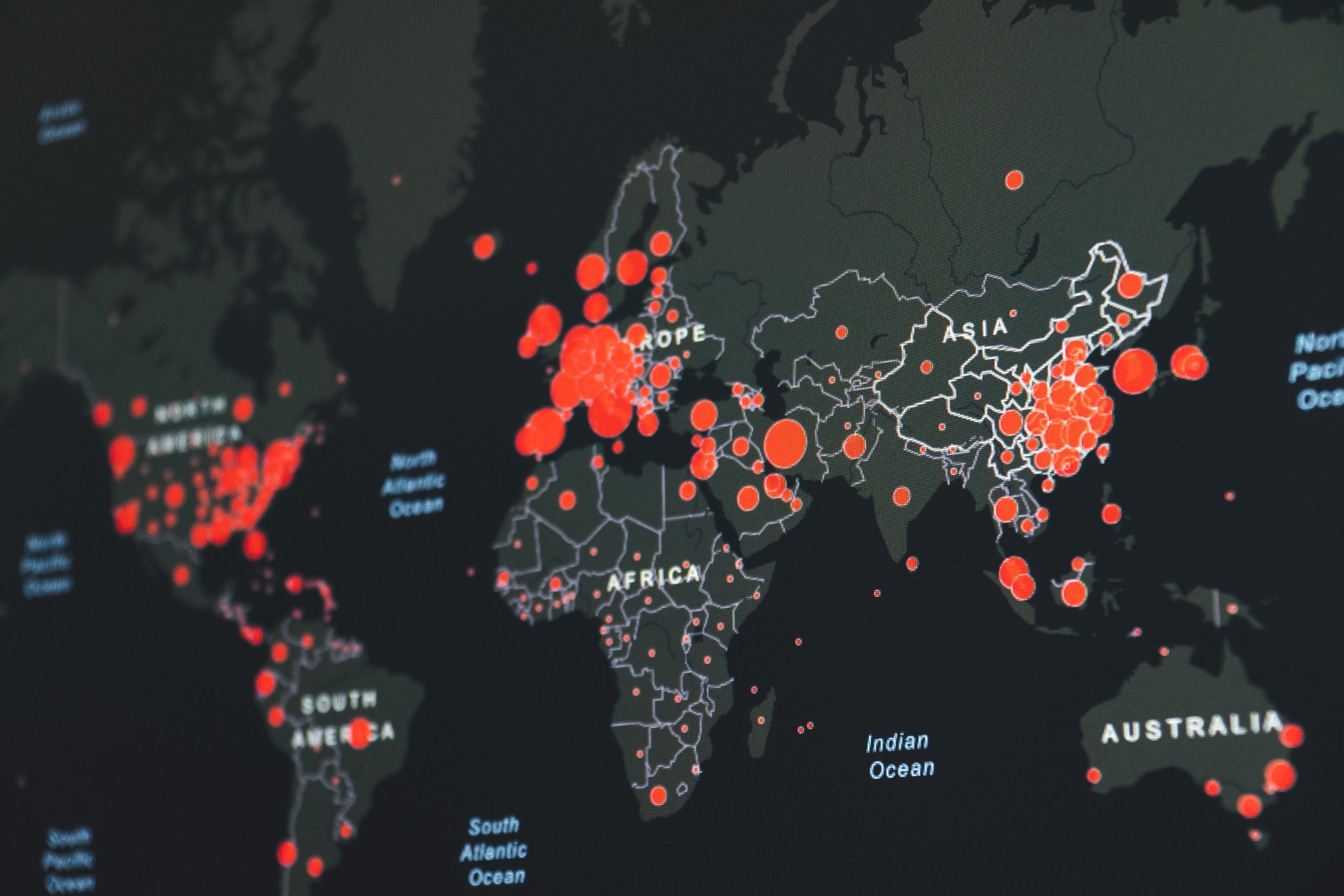

For years, researchers have been arguing that access to broadband corresponds with economic opportunity, health and well-being, civic participation, and more. In the UK, the UK Digital Heatmap, for example, showed the compound effects of lack of access: areas with low levels of education, economic growth, and health also have low levels of broadband adoption.

To tackle this problem, policymakers frequently pursue a dual strategy: build out broadband infrastructure and broaden support for digital skills programmes. The latter efforts introduce members of underserved communities to basic digital literacy training focused on everyday skills that ordinary consumers and citizens need to get by.

But in an age of intelligent technologies like driverless cars, tablet-based table service, drone delivery, and integrated surveillance systems for smart cities, people coming online face new and complex threats to their privacy and security, let alone to their ability to make a better life for themselves. In fact, traditional approaches to digital inclusion are in need of radical rethinking: these policies and programmes must be adjusted in order to address both the data protection needs of — and social and economic justice for — target populations. Without this, members of marginalised communities are headed for a digital dead end.

Take digital inclusion’s focus on broadband adoption and employment opportunities. Most digital literacy programmes offer students a mixture of technical, information-gathering, and communication skills. Learn to use a keyboard, search online jobs databases, and create a LinkedIn profile, and you can land yourself a job, for example, doing data entry for a company that runs its business online.

But these skills may become less important when automated, predictive technologies come into play. That’s because artificial intelligence is particularly likely to constrain labour options for the poor. In an analysis of nearly 700 occupations classified by the U.S. Department of Labor, economists Carl Benedikt Frey and Michael A. Osborne estimated that 47 percent of occupations were susceptible to computerisation, with low-wage, low-skilled workers at their most vulnerable. Service, sales, transportation and logistics — each of these industries will see autonomous, intelligent systems replacing humans, or automating and surveilling them in the workplace.

Suppose an individual returns to the workforce after serving a twenty year sentence in prison. She faces the very real prospect that she will compete with a robot for waitressing in a restaurant, stocking shelves in a supermarket, or driving for a delivery service. Already some indicators point to this trend. Just last week, Lyft announced it would road-test driverless taxis within the year. Meanwhile, Whole Foods has begun to roll out teaBot, an automated system that can make you a custom cup of loose-leaf tea.

And even when workers use computers instead of being replaced by them, digital service employees don’t enter the workplace as agents in the so-called “knowledge economy.” For example, employers now deploy workplace monitoring technologies that reduce human productivity to algorithms, penalising workers and pushing them to dangerous limits. These innovations have serious health impacts at odds with the narrative of the benefits of digital inclusion.

Many commentators view this Luddite fear of the computerisation of work as solvable: teach people how to code, and they will be better prepared for competition between humans and computers for low-wage work. Already, several initiatives concentrate on coding literacy, helping individuals to ‘skill up’ and confront the job market effectively. Other commentators have suggested that coding literacy which focuses less on how to code computers and more on why computational thinking matters in today’s society will also have a transformative effect on members of marginalised communities.

But the replacement-by-robot scenario does not represent the only facet of cumulative disadvantage. There’s an infrastructural problem too that has the potential to disadvantage poorer broadband users: Internet Service Providers (ISPs) run the business of data flows, and increasingly, they want to (and do) monetise the contents of those data flows — i.e., broadband usage data. According to ISPs like (major US telecoms company) Verizon, the sale of broadband usage data can help grow and diversify the market for online advertisers. Or put more bluntly, usage data can lead to large returns for ISPs.

For the marginalised, though, that kind of monetisation heightens risks. About a quarter of Web traffic consists of http versus https requests. That makes unencrypted traffic easy for ISPs to analyse (in the aggregate), sell, and use for the purposes of targeting. For better and for worse, such targeting has the power to influence how humans make decisions, including ones essential to basic survival. A New America report speculated, for example, that an ISP could analyse or hire a third party analytics firm to examine patterns in usage times and infer whether certain customers had recently lost their job (e.g. spikes in residential broadband use during normally dormant day-time hours combined with traffic patterns pointing towards job search sites).

The sharing of that information down the data supply chain to data brokers, lead generators, predatory financial services, or other problematic actors, has huge implications for data privacy and social justice. From a data privacy perspective, value of broadband usage data to payday loan providers, for example, is something that would not ever be evident to the customer herself. And while some might some might advocate transparency, it’s not clear that disclosure by an ISP would give customers adequate warning of future harmful scenarios that could arise from the analysis of broadband usage data or targeting on the basis thereof.

From a social justice perspective, while the possibility of being taken for a ride by predatory actors might be an equal risk for everyone, the dangers of data profiling differ for rich and poor people. Members of historically marginalised communities face a vicious circle: when, aggressively targeted by companies that prey on their vulnerability, they fall victim to just-in-time nudges of a short-term loan provider, they face the very real prospect of getting into debt. Futhermore, they have access to fewer support systems or resources to climb their way out. Data profiling in this instance isn’t a nuisance — like an annoying ad for shoes that follows you from device to device. It shapes possibilities and life chances.

The conundrum begs the question of whether particular kinds of customers — for example, low-income broadband users in underserved communities — should be exempted from analysis of broadband usage data altogether. Beyond the kinds of data analysis necessary to maintain standards of network performance, ISPs might elect to curtail other forms of first party analysis of broadband usage data, or to share such data with third parties, as a matter of public interest. By doing so, ISPs would enable a safer environment and make customers in underserved communities feel more welcome and more trusting in online settings.

None of this suggests that digital literacy is obsolete. In a data-driven world, there’s still a place for learning not only computer and Internet skills but also broader knowledge about the nature of an always-on, networked world, including both its harms and benefits.

But the burden should not rest on users alone. Some privacy and data literacy programmes, especially those that focus on educating the staff that serve underserved communities, serve as models for how to increase know-how without expecting the individual user to do it all on her own. Even more promising are policies that focus on the ethics of design in automated, predictive systems and support the development of tools that catch problems of discriminatory data profiling. Before it’s too late to undo harmful data-driven path dependencies, let’s build out and expand these approaches.

This blog gives the views of the author and does not represent the position of the LSE Media Policy Project blog, nor of the London School of Economics and Political Science.

This post was published to coincide with a workshop held in April 2016 by the Media Policy Project, ‘Automation, Prediction and Digital Inequalities’. This was the third of a series of workshops organised throughout 2015 and 2016 by the Media Policy Project as part of a grant from the LSE’s Higher Education Innovation Fund (HEIF5). To read a summary of the workshop, please click here.

How touching that you play the ancient game of I am a window cleaner, a robot will never take my job. You don’t get it do you ? Coding ? You think humans can find refuge in coding ? Robots can’t do coding ? Oh that’s a funny one. In two hundred years time the tech is so vast there is no job a human can do, that a robot an’t. You think you can do 200 zillionaires, and seven billion unemployed ? The humans of this age are cocaine addict hooked on employment. Let the morons with a work ethic work, I will sit by the swimming pool. Robot productivity is utopian. You put stuff on the ration system. Only idiots work for living. In the end eventhe ration system is academic, food and clothes become free. With 3d copiers, who would dream of buying stuff from a corporation. Evetbody gets Kodack-ed.