How to tackle ‘fake news’ and the societal implications of misinformation are questions under consideration by policy makers, civil society, the tech industry and others. In this post, Cambridge University’s Sander van der Linden and Jon Roozenbeek present their answer: Bad News, a game based on the mechanics of fake news that applies insights from behavioural science to develop resistance amongst players to fake news.

How to tackle ‘fake news’ and the societal implications of misinformation are questions under consideration by policy makers, civil society, the tech industry and others. In this post, Cambridge University’s Sander van der Linden and Jon Roozenbeek present their answer: Bad News, a game based on the mechanics of fake news that applies insights from behavioural science to develop resistance amongst players to fake news.

Journalists, politicians, academics, and governments all agree that the problem of news manipulation needs to be addressed, even if no one seems to be able to agree on what to call it. The terms ‘fake news’, ‘misinformation’, ‘disinformation’, and ‘propaganda’ are all used interchangeably. But none of this should distract from the fact that we are dealing with a serious problem that’s getting bigger. We know from scientific research that fake news spreads farther, faster and deeper on social media than any other type of news, even (real) stories about natural disasters, terror attacks and the Kardashians. And that’s just the internet; fake news can have real world consequences as well. In 2018, more than twenty people were killed in India after rumours went viral on WhatsApp alleging the presence of child abductors in several villages across the country. Many readers will also remember the PizzaGate affair from 2016, when a man entered a New York restaurant and fired a rifle, after he was duped by a false story about Hillary Clinton running a child trafficking ring there.

Sustainable solutions to the fake news problem aren’t easy to find, although not for lack of trying. Proposed solutions range from legislating news media, to tweaking algorithms and moderating content, to “softer” approaches such as fact-checking, debunking, and media literacy education. All of these approaches have their pros and cons. Oftentimes, lawmakers are reluctant to introduce new laws to halt the flow of misinformation, as this quickly runs into issues with freedom of the press, and may provide an excuse for authoritarian states to crack down on dissenting opinions. Deleting, demonetising or disincentivising content that is deemed problematic, as is being done by social media companies like Facebook, YouTube and Twitter, has a backfiring potential, as algorithms aren’t 100% accurate in deciding what counts as problematic content, and mishandled grey zone cases can lead to lots of problems. Next up are fact-checking organisations like Snopes who debunk viral fake news stories. Although useful, the problem is the continued influence of misinformation: once people have been exposed to a falsehood they often continue to believe in it even after a correction has been issued. Lastly, media literacy initiatives are set up all over the world to teach children not to fall for misleading stories. The sad thing is that those who aren’t currently in education (meaning: the vast majority of the population) aren’t benefitting from such efforts.

Being behavioural scientists, we started to think about what else could be done. Enter inoculation theory, a classic theory from social psychology that posits that it’s possible to confer psychological resistance against persuasion by pre-emptively exposing people to a weakened version of a deceptive argument, much like a real vaccine confers resistance against a pathogen after being exposed to a severely weakened dose of the “virus”. After an initial paper which showed that it’s possible to ‘inoculate’ people against a specific piece of misinformation about climate change, we decided to up the ante a little bit. Rather than ‘inoculating’ people against specific falsehoods, we theorised that it should also be possible to help people spot common strategies used in the production of most fake news. The big advantage of this, at least in theory, is that people develop something like a Spidey Sense for manipulation, and can spot fake or misleading content by teasing out manipulation strategies.

Long story short, we built a video game. Together with partners at DROG, a Dutch anti-misinformation platform, we created Bad News, a multiple award-nominated free-to-play online browser game in which players start out as anonymous Twitter users and grow to become fake news tycoons by making use of various common manipulation strategies to gain followers whilst preserving online credibility. In total, there are six badges to be earned in the game, each representing one such strategy: impersonating people online, using emotional language, polarising audiences, spreading conspiracy theories, discrediting opponents, and trolling.

So why play the ‘bad guy’? Think of it this way: the first time you go to a magic show, you’re likely to be duped by the trick. But once the magician explains the trick to you, you won’t be fooled by it again. However, just sitting back and letting other people tell you what the facts are isn’t very fun. Bad News inoculates people against deception by letting people do the trick themselves in a simulated environment. After all, experience is a powerful teacher.

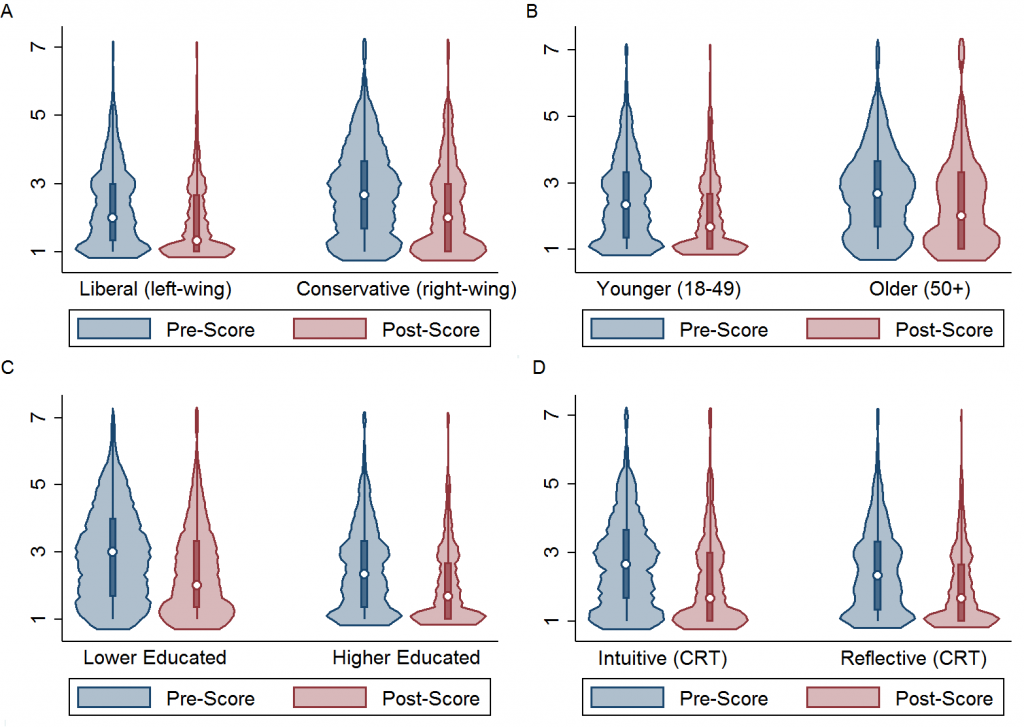

So did it work? Short answer: yes. Since its launch in early 2018, the game has been played more than half a million times. And, importantly, about 15.000 people responded to our in-game survey, the results of which we published recently in the journal Palgrave Communications. We wanted to test if players become better at spotting deception techniques in Twitter (news) posts that we showed them before and after playing the game. To do so, we designed a number of headlines that were fictitious but based on real instances of fake news. Why? Well, one major issue in the literature on fake news is that memory confounds (people may know a headline is fake or real simply because they remember it). The results of our study showed that people significantly downgraded the reliability of fake (but not real!) news items after playing and these effects were robust across different demographics like age, gender, education level and political affiliation.

Violin plots showing the kernel density distribution of pre-reliability and post-reliability judgements with median box plots (horizontal bars). Note: political ideology (panel a), age (panel b), education (panel c), and cognitive reflection (panel d) source Fake news game confers psychological resistance against online misinformation

There were some limitations, of course. For one, our sample was self-selected and we did not have a randomised control group. However, we’re currently running trials that are confirming our prior findings, even over time. Together with the UK Foreign office we have also translated the game into 14 new languages (including German, Czech, Polish, Greek, Esperanto, Swedish and Serbian) which will allow us to do large-scale cross-cultural comparisons. We’re also working with WhatsApp to combat rumours spreading on direct messaging apps by designing a new version of the game. Ultimately, although we think that a cure for the post-truth era will require a multi-layered defence system, one thing is clear: the science of prebunking has only just begun.

This post was first published on the LSE’s Impact Blog and is republished with thanks. It draws on the authors’ co-authored article, Fake news game confers psychological resistance against online misinformation, published in Palgrave Communications. This post represents the views of the authors, and not the position of the LSE Media Policy Project, nor of the London School of Economics and Political Science.

(Featured images sourced from Bad News.)