As the UK prepares to host its AI Safety Summit, Sebastián Lehuedé, a researcher at the University of Cambridge’s Centre of Governance and Human Rights, argues that far from being a ‘global’ event, Rishi Sunak’s Summit will only appeal to a narrow set of countries, while in contrast, China is already engaging with developing and non-Western countries on AI governance.

As the UK prepares to host its AI Safety Summit, Sebastián Lehuedé, a researcher at the University of Cambridge’s Centre of Governance and Human Rights, argues that far from being a ‘global’ event, Rishi Sunak’s Summit will only appeal to a narrow set of countries, while in contrast, China is already engaging with developing and non-Western countries on AI governance.

This week, the British Prime Minister Rishi Sunak is hosting the first AI Safety Summit. While the UK government has sold this instance as a pioneering ‘global’ governance forum, in practice the actors and concerns at stake do not reflect the truly global character of AI.

Last week, Sunak affirmed: ‘My vision, and our ultimate goal, should be to work towards a more international approach to safety’. Along those lines, he said he expects that this summit will set up a ‘truly global’ expert panel on the matter.

This ambition contrasts with the decision to invite only a few countries to the summit. As the government states: ‘The summit itself will bring together a small number of countries, academics, civil society representatives and companies who have already done thinking in this area to begin this critical global conversation’. Thus, representation was never a goal underlying this summit.

When it comes to the summit’s themes, only ‘misuse’ and ‘loss of control’ risks will be addressed. Such a focus on hypothetical and apocalyptic scenarios, which echoes the vested interests of the AI industry, does not speak to countries already facing concrete social and environment harms engendered by AI.

The narrow set of actors and themes of the AI Safety Summit ignores the fact that AI relies on a vast value chain of labour and natural resources that spans across the world. Let me provide an example stemming from my research. Last year, I spoke to residents of the working-class area of Cerrillos in Santiago, Chile’s capital city, who are fighting the establishment of a Google data centre project. Data centres host the computers that process the vast amount of data that make AI work. Data centres are water- and energy-intensive since data processing servers need to be constantly cooled. The Cerrillos community mobilised against Google’s project as this was expected to utilise 169 litres of water per second in an area facing severe drought. There is currently no clarity on whether this project will ever see the light. The Chilean case speaks to a broader issue since, as research indicates, data centres’ water consumption will go from 4.2 to 6.6 billion cubic meters in 2027 due to the rise of AI. This is equivalent to half the UK’s water consumption.

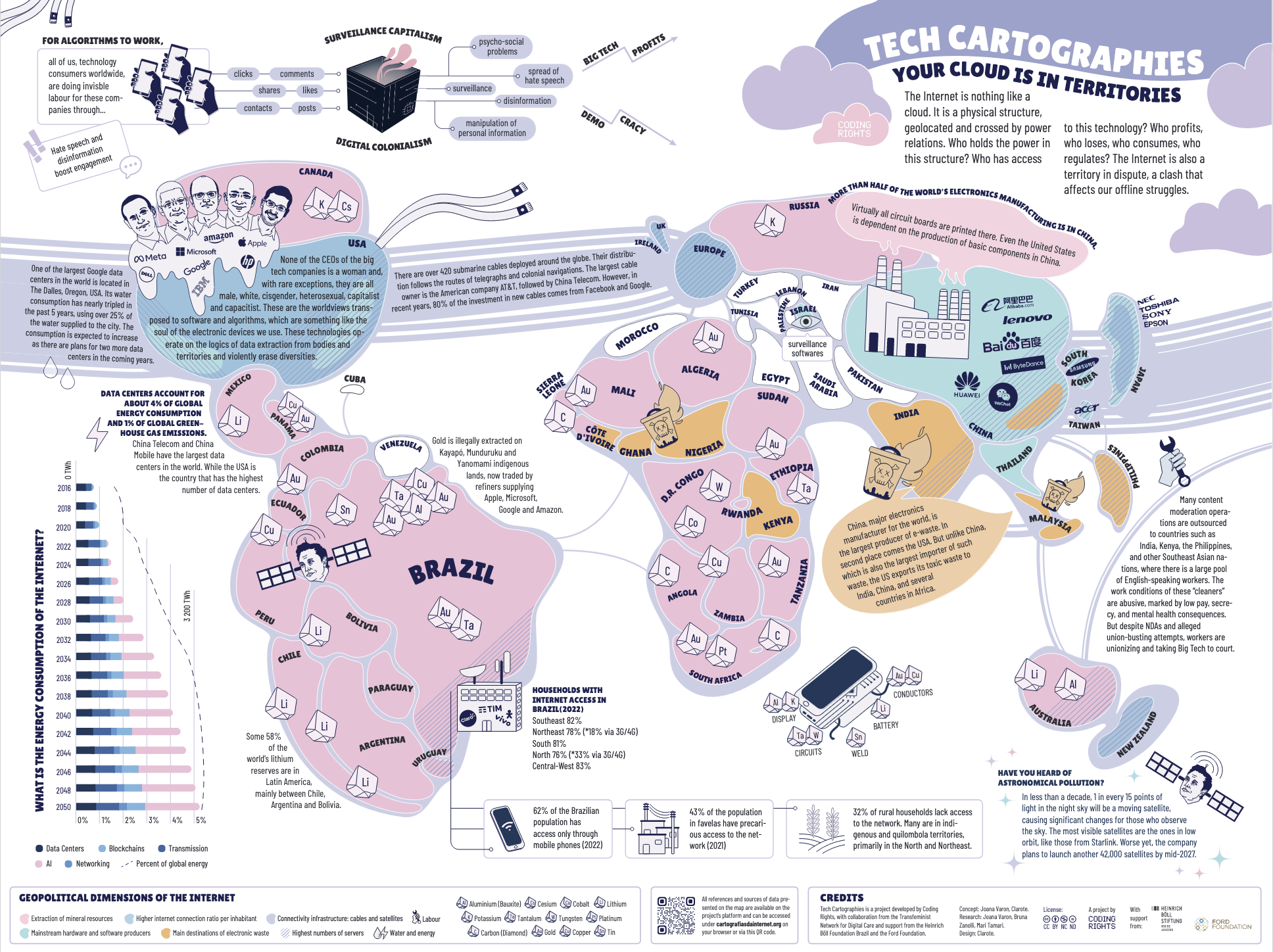

‘Tech Cartographies: Your Cloud is in Territories‘ by Coding Rights. As this graphic shows, the cloud (and AI) relies on a global network of labour and natural resources.

‘Tech Cartographies: Your Cloud is in Territories‘ by Coding Rights. As this graphic shows, the cloud (and AI) relies on a global network of labour and natural resources.

Along with data centres’ energy and water consumption, other relevant global concerns will be excluded from Sunak’s summit. In Kenya, workers labelling the data employed to train AI algorithms were found to be paid less than US$2 per hour and getting exposed to mentally stressing content. In that same region, the dumping and re-selling of intelligent devices is generating the release of hazardous substances that threaten the lives of the local population and contribute to global warming. In South America, indigenous communities are witnessing the decrease of flamingo colonies and trees species due to water-intensive lithium extraction, a mineral used to build intelligent devices such as voice assistants and smart phones.

Sunak’s focus on ‘AI Safety’ will also exclude original research being conducted in different regions of the world. For example, I am affiliated to the state-funded Future of Artificial Intelligence Research (FAIR) interdisciplinary group involving three prestigious universities in Chile. Not coincidentally, no researcher in this group is investigating ‘rogue robots’ hypothetical scenarios. Instead, almost half of us are looking at AI-related environmental issues such as data centres and resources extraction.

Unlike the UK, so-called ‘AI superpower’ China is engaging with developing and non-Western countries in a much more decisive way. In August, BRICS announced the setting up of a study group aimed at building “more secure, reliable, controllable and equitable” AI. Two weeks ago, China launched the Global AI Governance Initiative that, besides addressing a broader range of themes, proactively encourages participation from developing countries. There are also regional blocs emerging. For example, last week 20 Latin American and Caribbean countries signed an agreement in order to ensure a common voice in global AI governance.

These examples show that Sunak is not only not pioneering when it comes to global AI governance but also excluding key actors in the field. Hopefully, this insular approach will be rectified in the future so the UK does not walk towards isolation.

This post represents the views of the author and not the position of the Media@LSE blog, nor of the London School of Economics and Political Science.

Featured image by Number 10 on flickr, used under the CC BY-NC-ND 2.0 Deed license