![By Image signed by T.Belack ? Artist name lost to history ? 118 years old. [Public domain or Public domain], via Wikimedia Commons](https://i0.wp.com/upload.wikimedia.org/wikipedia/commons/8/87/Le_Petit_Journal_-_6_August_1894.jpg?resize=147%2C217) Thomson Reuters have released the annual round of updates to their ranked list of journals by journal impact factor (JIF) in yesterday’s Journal Citation Reports. Impact Factors have come under increasing scrutiny in recent years for their lack of transparency and for misleading attempts at research assessment. Last year the San Francisco Declaration on Research Assessment (DORA) took a groundbreaking stance by explicitly disavowing the use of impact factors in assessment. This document has since drawn support worldwide and across the academic community. But what exactly are Journal Impact Factors and why are they cause for so much concern? Here is a reading list that highlights some helpful pieces we’ve been able to feature on the Impact blog over the last few years.

Thomson Reuters have released the annual round of updates to their ranked list of journals by journal impact factor (JIF) in yesterday’s Journal Citation Reports. Impact Factors have come under increasing scrutiny in recent years for their lack of transparency and for misleading attempts at research assessment. Last year the San Francisco Declaration on Research Assessment (DORA) took a groundbreaking stance by explicitly disavowing the use of impact factors in assessment. This document has since drawn support worldwide and across the academic community. But what exactly are Journal Impact Factors and why are they cause for so much concern? Here is a reading list that highlights some helpful pieces we’ve been able to feature on the Impact blog over the last few years.

What are Journal Impact Factors?

According to Wikipedia:

“The impact factor (IF) of an academic journal is a measure reflecting the average number of citations to recent articles published in the journal. It is frequently used as a proxy for the relative importance of a journal within its field, with journals with higher impact factors deemed to be more important than those with lower ones. The impact factor was devised by Eugene Garfield, the founder of the Institute for Scientific Information (ISI). [It was later acquired by Thomson Reuters] Impact factors are calculated yearly starting from 1975 for those journals that are indexed in Thomson Reuters’ Journal Citation Reports.”

You can also read more from the source as clarified by Thomson Reuters on the Web of Science citation indexing service they provide:

The JCR provides quantitative tools for ranking, evaluating, categorizing, and comparing journals. The impact factor is one of these; it is a measure of the frequency with which the “average article” in a journal has been cited in a particular year or period. The annual JCR impact factor is a ratio between citations and recent citable items published. Thus, the impact factor of a journal is calculated by dividing the number of current year citations to the source items published in that journal during the previous two years

The under-representation of developing world research in Thomson Reuters’ Web of Science (WoS)

The under-representation of developing world research in Thomson Reuters’ Web of Science (WoS)

Why are Impact Factors so problematic?

The demise of the Impact Factor: The strength of the relationship between citation rates and IF is down to levels last seen 40 years ago

The demise of the Impact Factor: The strength of the relationship between citation rates and IF is down to levels last seen 40 years ago

Jobs, grants, prestige and career advancement are all partially based on an admittedly flawed concept: the journal Impact Factor. Impact factors have been becoming increasingly meaningless since 1991, writes George Lozano, who finds that the variance of papers’ citation rates around their journals’ IF has been rising steadily.

Impact factors declared unfit for duty

Impact factors declared unfit for duty

The San Francisco Declaration on Research Assessment aims to address the research community’s problems with evaluating individual outputs, a welcome announcement for those concerned with the mis-use of journal impact factors. Stephen Curry commends the Declaration’s recommendations, but also highlights some remaining difficulties in refusing to participate in an institutional culture still beholden to the impact factor.

High-impact journals: where newsworthiness trumps methodology

High-impact journals: where newsworthiness trumps methodology

Criticism continues to mount against high impact factor journals with a new study suggesting a preference for publishing front-page, “sexy” science has been at the expense of methodological rigour. Dorothy Bishop confirms these findings in her assessment of a recent paper published on dyslexia and fears that if the primary goal of some journals is media coverage, science will suffer. More attention should be placed on whether a study has adopted an appropriate methodology.

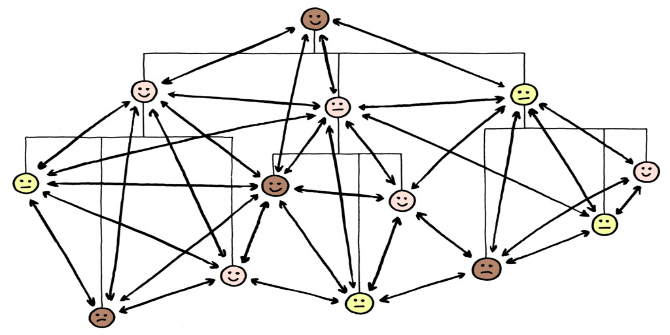

Alternative metrics offer the opportunity to redirect incentive structures towards problems that contribute to development, or at least to local priorities argues Juan Pablo Alperin. But the altmetrics community needs to actively engage with scholars from developing regions to ensure the new metrics do not continue to cater to well-known and well-established networks.

How journals manipulate the importance of research and one way to fix it

How journals manipulate the importance of research and one way to fix it

Our methods of rewarding research foster an incentive for journal editors to ‘game’ the system, and one in five researchers report being pressured to include citations from the prospective journal before their work is published. Curt Rice outlines how we can put an end to coercive citations.

High impact factors are meant to represent strong citation rates, but these journal impact factors are more effective at predicting a paper’s retraction rate.

High impact factors are meant to represent strong citation rates, but these journal impact factors are more effective at predicting a paper’s retraction rate.

Journal ranking schemes may seem useful, but Björn Brembs discusses how the Thompson Reuters Impact Factor appears to be a reliable predictor of the number of retractions, rather than citations a given paper will receive. Should academics think twice about the benefits of publishing in a ‘high impact’ journal?

How can the situation for research assessment be improved?

Now that the REF submission window has closed, a small panel of academics are tasked with rating thousands of academic submissions, which will result in university departments being ranked and public money being distributed. Given the enormity of the task and the scarcity of the resources devoted to it, Daniel Sgroi discusses a straightforward procedure that might help, based on the Bayesian methods that academic economists study and teach when considering the problem of decision-making under uncertainty.

Leading or following: Data and rankings must inform strategic decision making, not drive them

Leading or following: Data and rankings must inform strategic decision making, not drive them

At the Future of Impact conference, Cameron Neylon argued that universities must ask how their research is being re-used, and choose to become the most skilled in using available data to inform strategic decision making. It’s time to put down the Impact voodoo doll and stop using rankings blindly.

Impact Round-Up on the cure for impact factor mania.

Impact Round-Up on the cure for impact factor mania.

Arturo Casadevall and Ferric C. Fang present their findings on Causes for the Persistence of Impact Factor Mania in the recent issue of mBio. The comprehensive piece outlines the many problems caused by the impact factor in the scientific process and also clarifies what scientists can do to break with this destructive behaviour.

Jonathan Harle and Sioux Cumming discuss how to strengthen research networks in developing countries. There is still a huge body of Southern research which simply never gets counted. Research that is undertaken and published in the South needs to be valued, and this will only happen when Southern universities value it in their reward and promotion systems and when research funders recognise it in the grant applications they receive.

Impact Factors are a god-send for overworked and distracted individuals, and while Google Scholar goes some way to utilizing multiple measures to determine a researcher’s impact, Jonathan Becker argues that we can go one better. He writes that engagement is the next metric that academics must conquer.

The Web of Science and its corresponding Journal Impact Factor are inadequate for an understanding of the impact of scholarly work from developing regions

The Web of Science and its corresponding Journal Impact Factor are inadequate for an understanding of the impact of scholarly work from developing regions A Bayesian approach to the REF: finding the right data on journal articles and citations to inform decision-making.

A Bayesian approach to the REF: finding the right data on journal articles and citations to inform decision-making.

Geographies of knowledge: practical ways to boost the visibility of research undertaken and published in the South.

Geographies of knowledge: practical ways to boost the visibility of research undertaken and published in the South. We can do much better than rely on the self-fulfilling impact factor: Academics must harness ideas of engagement to illustrate their impact

We can do much better than rely on the self-fulfilling impact factor: Academics must harness ideas of engagement to illustrate their impact

Just a quick correction: it’s “Thomson Reuters”, not “Thompson Reuters” as Wikipedia would appear to spell it.

Thanks, Neil! Have fixed the error now.

-Sierra

Article about – as the title says – “impact factor fetishism” by Christian Fleck in the European Journal of Sociology discussing some of the points raised. http://dx.doi.org/10.1017/S0003975613000167

Very useful post. However the cartogram in the post is misleading, because one does not expect the number of WoS articles to be related to the surface of countries in the first place. As it is now in the impression the image gives is distorted by population density. Countries with above average WoS articles but below average population density (such as Canada and Australia) are shrinking instead of growing. If the makers want to use this type pf anamorfose they should have depicted the number of articles per inhabitant. Only then you would see the real effect of wealth, education & research investement, language etc